A law of adversarial risk, interpolation, and label noise

Paper and Code

Jul 08, 2022

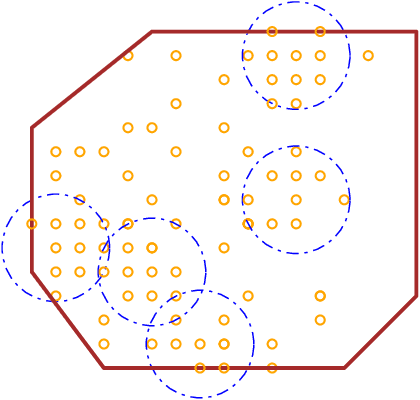

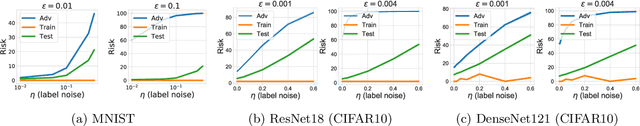

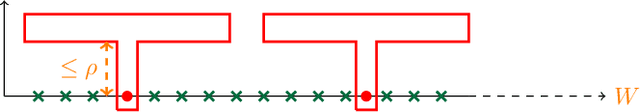

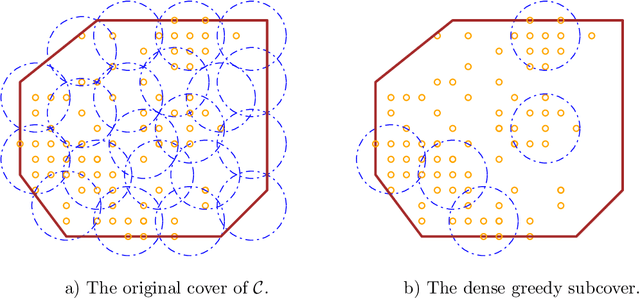

In supervised learning, it has been shown that label noise in the data can be interpolated without penalties on test accuracy under many circumstances. We show that interpolating label noise induces adversarial vulnerability, and prove the first theorem showing the dependence of label noise and adversarial risk in terms of the data distribution. Our results are almost sharp without accounting for the inductive bias of the learning algorithm. We also show that inductive bias makes the effect of label noise much stronger.

* 14 pages, 4 figures. ICML 2022 Workshop on Responsible Decision

Making in Dynamic Environments

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge