A Lagrangian Duality Approach to Active Learning

Paper and Code

Feb 08, 2022

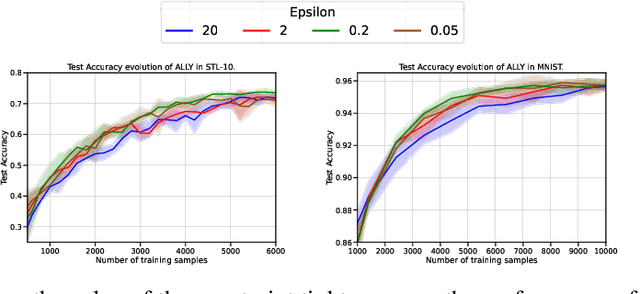

We consider the batch active learning problem, where only a subset of the training data is labeled, and the goal is to query a batch of unlabeled samples to be labeled so as to maximally improve model performance. We formulate the learning problem using constrained optimization, where each constraint bounds the performance of the model on labeled samples. Considering a primal-dual approach, we optimize the primal variables, corresponding to the model parameters, as well as the dual variables, corresponding to the constraints. As each dual variable indicates how significantly the perturbation of the respective constraint affects the optimal value of the objective function, we use it as a proxy of the informativeness of the corresponding training sample. Our approach, which we refer to as Active Learning via Lagrangian dualitY, or ALLY, leverages this fact to select a diverse set of unlabeled samples with the highest estimated dual variables as our query set. We show, via numerical experiments, that our proposed approach performs similarly to or better than state-of-the-art active learning methods in a variety of classification and regression tasks. We also demonstrate how ALLY can be used in a generative mode to create novel, maximally-informative samples. The implementation code for ALLY can be found at https://github.com/juanelenter/ALLY.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge