A Hypergraph-Partitioned Vertex Programming Approach for Large-scale Consensus Optimization

Paper and Code

Aug 30, 2013

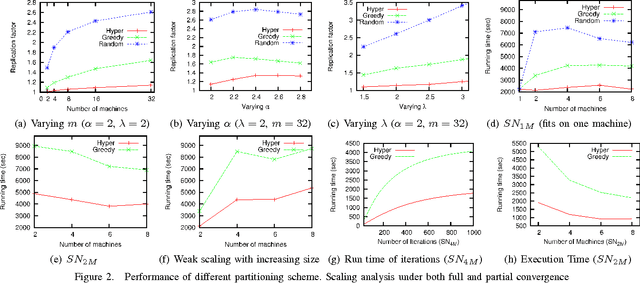

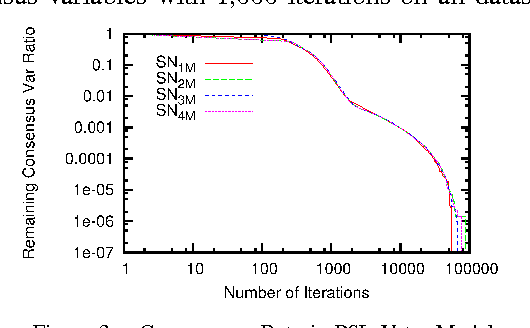

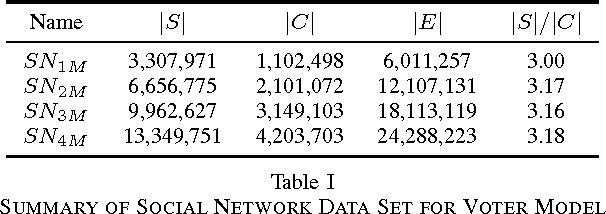

In modern data science problems, techniques for extracting value from big data require performing large-scale optimization over heterogenous, irregularly structured data. Much of this data is best represented as multi-relational graphs, making vertex programming abstractions such as those of Pregel and GraphLab ideal fits for modern large-scale data analysis. In this paper, we describe a vertex-programming implementation of a popular consensus optimization technique known as the alternating direction of multipliers (ADMM). ADMM consensus optimization allows elegant solution of complex objectives such as inference in rich probabilistic models. We also introduce a novel hypergraph partitioning technique that improves over state-of-the-art partitioning techniques for vertex programming and significantly reduces the communication cost by reducing the number of replicated nodes up to an order of magnitude. We implemented our algorithm in GraphLab and measure scaling performance on a variety of realistic bipartite graph distributions and a large synthetic voter-opinion analysis application. In our experiments, we are able to achieve a 50% improvement in runtime over the current state-of-the-art GraphLab partitioning scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge