A Homotopy-based Algorithm for Sparse Multiple Right-hand Sides Nonnegative Least Squares

Paper and Code

Nov 24, 2020

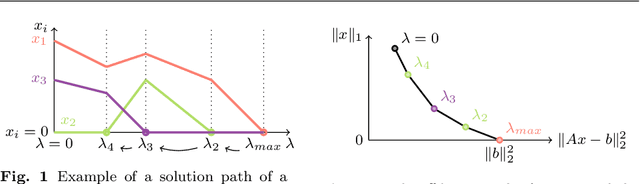

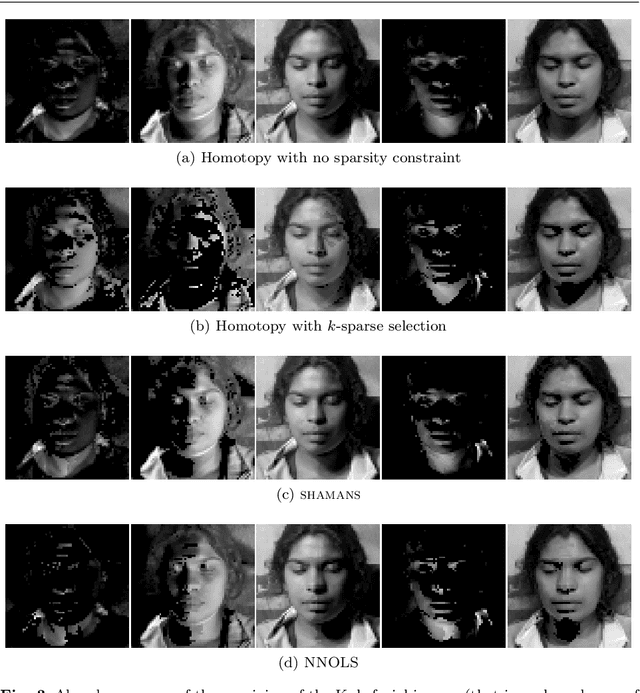

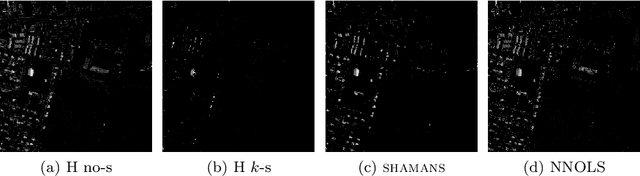

Nonnegative least squares (NNLS) problems arise in models that rely on additive linear combinations. In particular, they are at the core of nonnegative matrix factorization (NMF) algorithms. The nonnegativity constraint is known to naturally favor sparsity, that is, solutions with few non-zero entries. However, it is often useful to further enhance this sparsity, as it improves the interpretability of the results and helps reducing noise. While the $\ell_0$-"norm", equal to the number of non-zeros entries in a vector, is a natural sparsity measure, its combinatorial nature makes it difficult to use in practical optimization schemes. Most existing approaches thus rely either on its convex surrogate, the $\ell_1$-norm, or on heuristics such as greedy algorithms. In the case of multiple right-hand sides NNLS (MNNLS), which are used within NMF algorithms, sparsity is often enforced column- or row-wise, and the fact that the solution is a matrix is not exploited. In this paper, we first introduce a novel formulation for sparse MNNLS, with a matrix-wise $\ell_0$ sparsity constraint. Then, we present a two-step algorithm to tackle this problem. The first step uses a homotopy algorithm to produce the whole regularization path for all the $\ell_1$-penalized NNLS problems arising in MNNLS, that is, to produce a set of solutions representing different tradeoffs between reconstruction error and sparsity. The second step selects solutions among these paths in order to build a sparsity-constrained matrix that minimizes the reconstruction error. We illustrate the advantages of our proposed algorithm for the unmixing of facial and hyperspectral images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge