A High-Fidelity Open Embodied Avatar with Lip Syncing and Expression Capabilities

Paper and Code

Oct 15, 2019

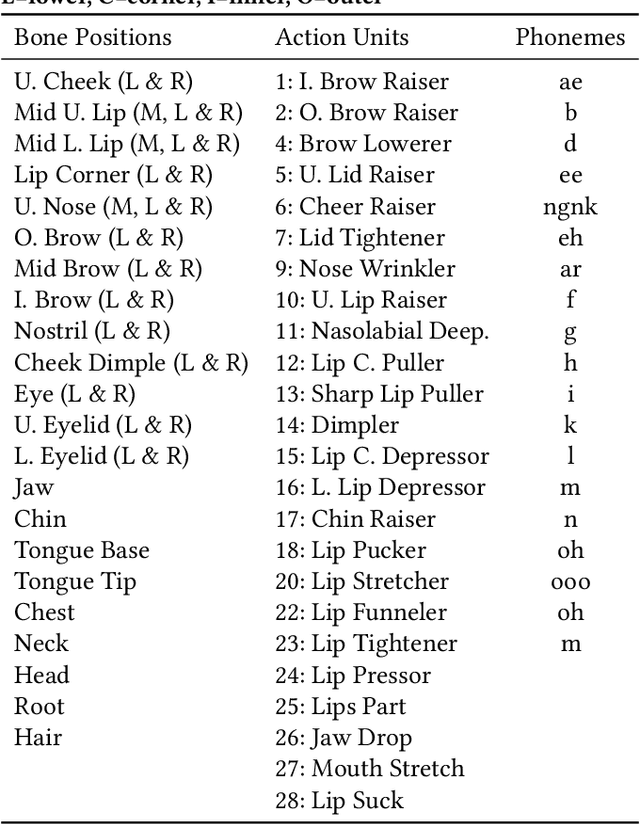

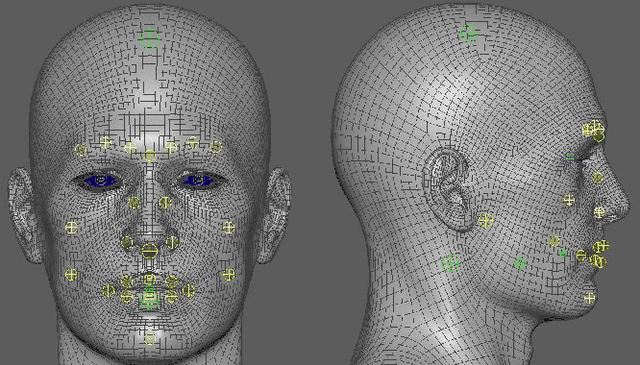

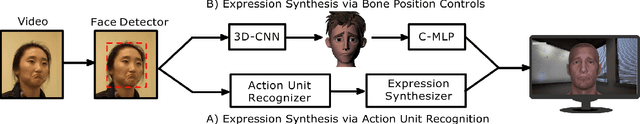

Embodied avatars as virtual agents have many applications and provide benefits over disembodied agents, allowing non-verbal social and interactional cues to be leveraged, in a similar manner to how humans interact with each other. We present an open embodied avatar built upon the Unreal Engine that can be controlled via a simple python programming interface. The avatar has lip syncing (phoneme control), head gesture and facial expression (using either facial action units or cardinal emotion categories) capabilities. We release code and models to illustrate how the avatar can be controlled like a puppet or used to create a simple conversational agent using public application programming interfaces (APIs). GITHUB link: https://github.com/danmcduff/AvatarSim

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge