A geometric perspective on functional outlier detection

Paper and Code

Sep 14, 2021

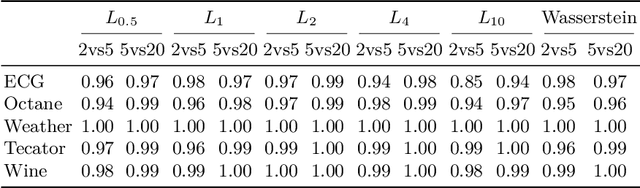

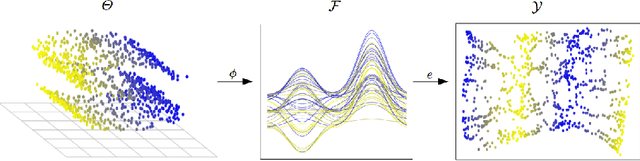

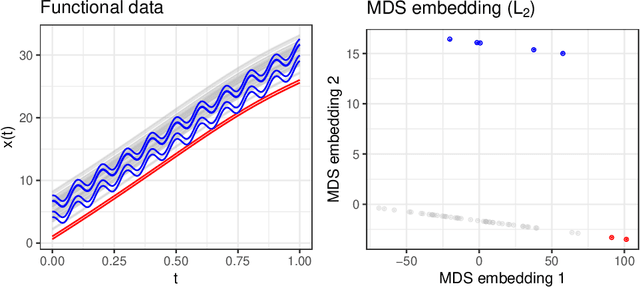

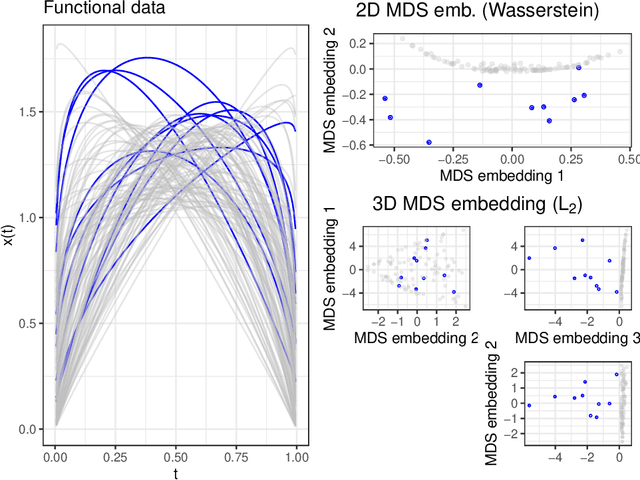

We consider functional outlier detection from a geometric perspective, specifically: for functional data sets drawn from a functional manifold which is defined by the data's modes of variation in amplitude and phase. Based on this manifold, we develop a conceptualization of functional outlier detection that is more widely applicable and realistic than previously proposed. Our theoretical and experimental analyses demonstrate several important advantages of this perspective: It considerably improves theoretical understanding and allows to describe and analyse complex functional outlier scenarios consistently and in full generality, by differentiating between structurally anomalous outlier data that are off-manifold and distributionally outlying data that are on-manifold but at its margins. This improves practical feasibility of functional outlier detection: We show that simple manifold learning methods can be used to reliably infer and visualize the geometric structure of functional data sets. We also show that standard outlier detection methods requiring tabular data inputs can be applied to functional data very successfully by simply using their vector-valued representations learned from manifold learning methods as input features. Our experiments on synthetic and real data sets demonstrate that this approach leads to outlier detection performances at least on par with existing functional data-specific methods in a large variety of settings, without the highly specialized, complex methodology and narrow domain of application these methods often entail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge