A General Framework for Analyzing Stochastic Dynamics in Learning Algorithms

Paper and Code

Jun 11, 2020

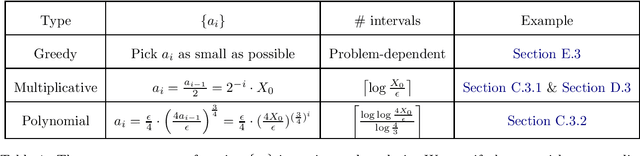

We present a general framework for analyzing high-probability bounds for stochastic dynamics in learning algorithms. Our framework composes standard techniques such as a stopping time, a martingale concentration and a closed-from solution to give a streamlined three-step recipe with a general and flexible principle to implement it. To demonstrate the power and the flexibility of our framework, we apply the framework on three very different learning problems: stochastic gradient descent for strongly convex functions, streaming principal component analysis and linear bandit with stochastic gradient descent updates. We improve the state of the art bounds on all three dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge