A Game-theoretic Understanding of Repeated Explanations in ML Models

Paper and Code

Feb 05, 2022

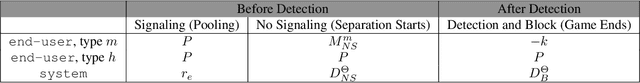

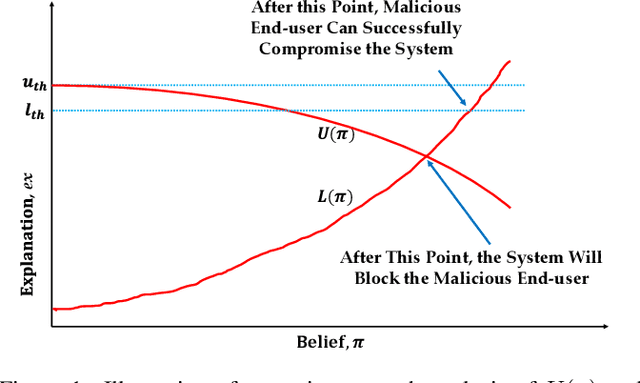

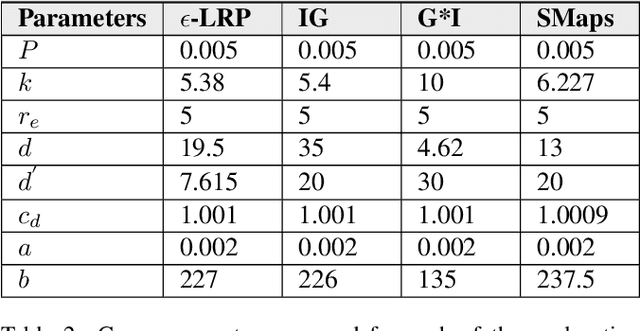

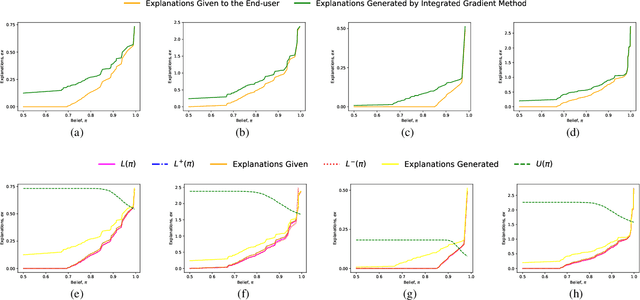

This paper formally models the strategic repeated interactions between a system, comprising of a machine learning (ML) model and associated explanation method, and an end-user who is seeking a prediction/label and its explanation for a query/input, by means of game theory. In this game, a malicious end-user must strategically decide when to stop querying and attempt to compromise the system, while the system must strategically decide how much information (in the form of noisy explanations) it should share with the end-user and when to stop sharing, all without knowing the type (honest/malicious) of the end-user. This paper formally models this trade-off using a continuous-time stochastic Signaling game framework and characterizes the Markov perfect equilibrium state within such a framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge