A Framework to Learn with Interpretation

Paper and Code

Oct 19, 2020

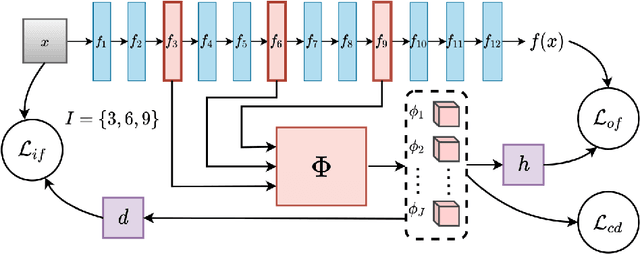

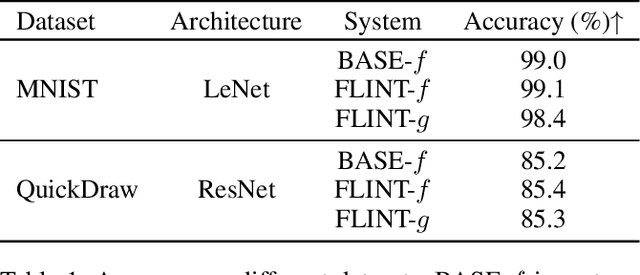

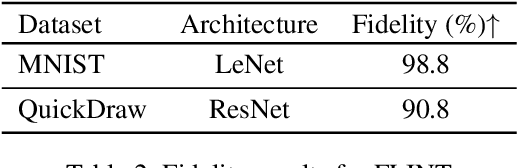

With increasingly widespread use of deep neural networks in critical decision-making applications, interpretability of these models is becoming imperative. We consider the problem of jointly learning a predictive model and its associated interpretation model. The task of the interpreter is to provide both local and global interpretability about the predictive model in terms of human-understandable high level attribute functions, without any loss of accuracy. This is achieved by a dedicated architecture and well chosen regularization penalties. We seek for a small-size dictionary of attribute functions that take as inputs the outputs of selected hidden layers and whose outputs feed a linear classifier. We impose a high level of conciseness by constraining the activation of a very few attributes for a given input with a real-entropy-based criterion while enforcing fidelity to both inputs and outputs of the predictive model. A major advantage of simultaneous learning is that the predictive neural network benefits from the interpretability constraint as well. We also develop a more detailed pipeline based on some common and novel simple tools to develop understanding about the learnt features. We show on two datasets, MNIST and QuickDraw, their relevance for both global and local interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge