A Framework for Private Matrix Analysis

Paper and Code

Sep 06, 2020

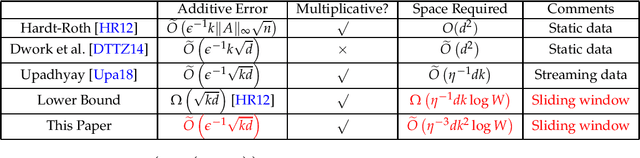

We study private matrix analysis in the sliding window model where only the last $W$ updates to matrices are considered useful for analysis. We give first efficient $o(W)$ space differentially private algorithms for spectral approximation, principal component analysis, and linear regression. We also initiate and show efficient differentially private algorithms for two important variants of principal component analysis: sparse principal component analysis and non-negative principal component analysis. Prior to our work, no such result was known for sparse and non-negative differentially private principal component analysis even in the static data setting. These algorithms are obtained by identifying sufficient conditions on positive semidefinite matrices formed from streamed matrices. We also show a lower bound on space required to compute low-rank approximation even if the algorithm gives multiplicative approximation and incurs additive error. This follows via reduction to a certain communication complexity problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge