A Framework for Comparing Uncertain Inference Systems to Probability

Paper and Code

Mar 27, 2013

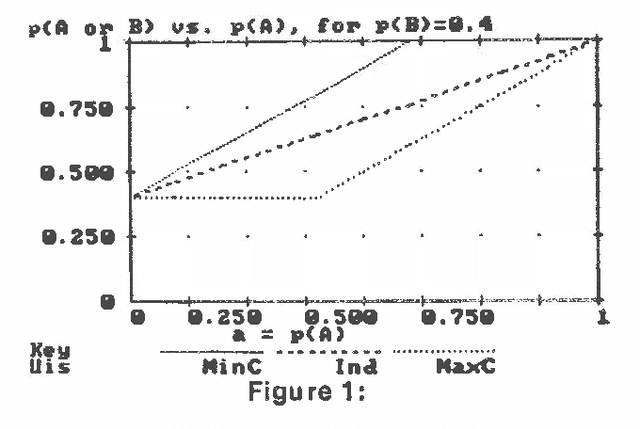

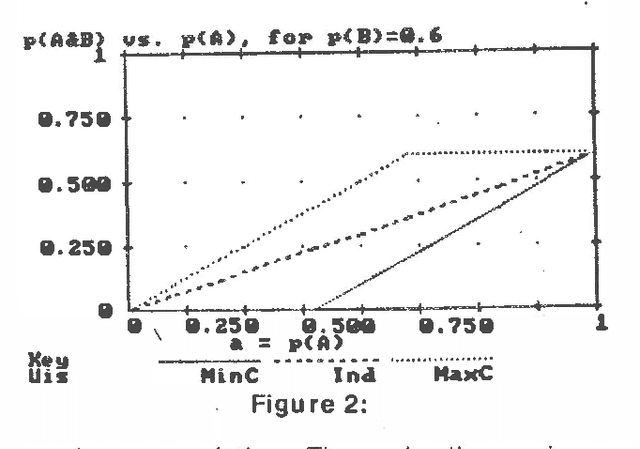

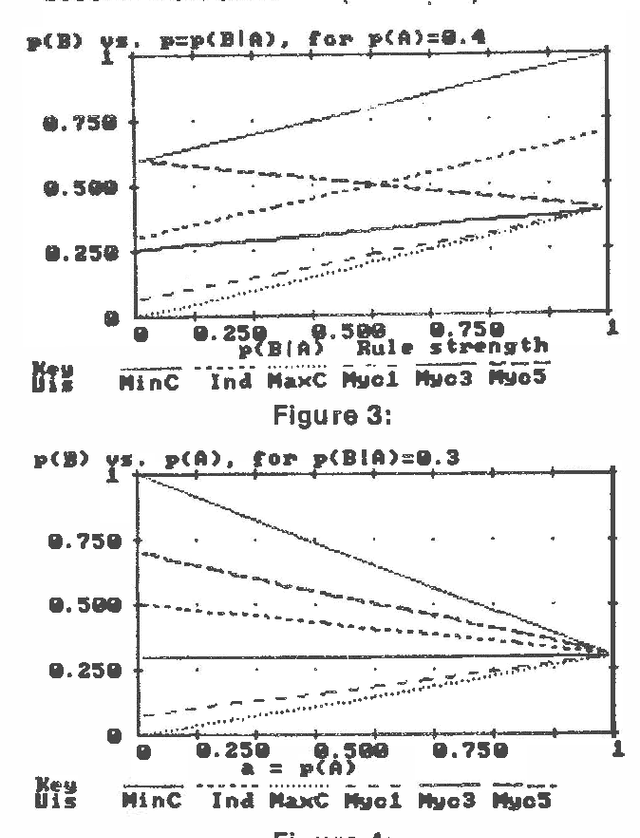

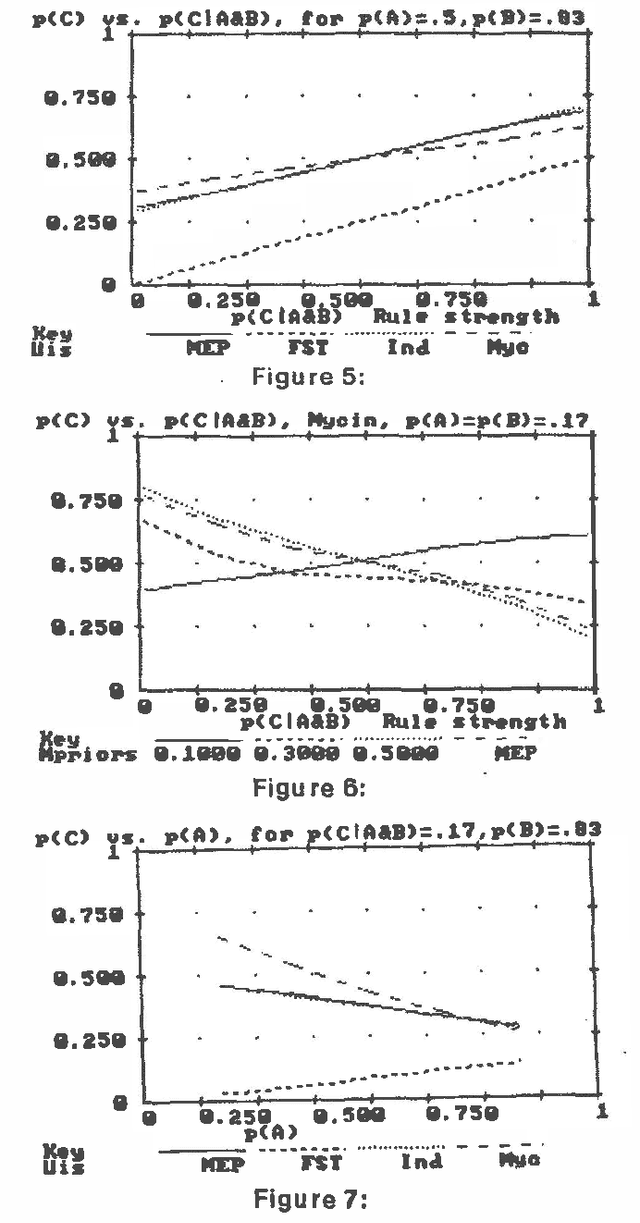

Several different uncertain inference systems (UISs) have been developed for representing uncertainty in rule-based expert systems. Some of these, such as Mycin's Certainty Factors, Prospector, and Bayes' Networks were designed as approximations to probability, and others, such as Fuzzy Set Theory and DempsterShafer Belief Functions were not. How different are these UISs in practice, and does it matter which you use? When combining and propagating uncertain information, each UIS must, at least by implication, make certain assumptions about correlations not explicily specified. The maximum entropy principle with minimum cross-entropy updating, provides a way of making assumptions about the missing specification that minimizes the additional information assumed, and thus offers a standard against which the other UISs can be compared. We describe a framework for the experimental comparison of the performance of different UISs, and provide some illustrative results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge