A Forest from the Trees: Generation through Neighborhoods

Paper and Code

Feb 04, 2019

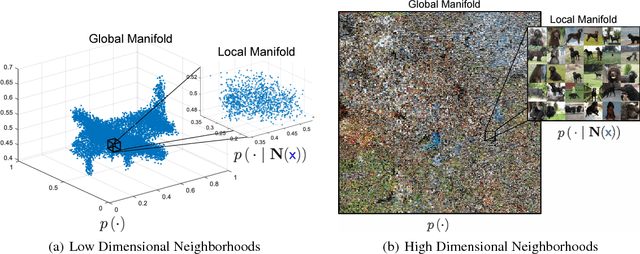

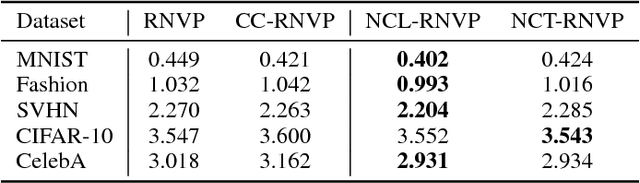

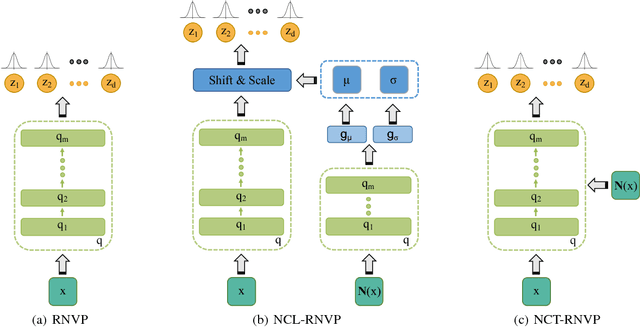

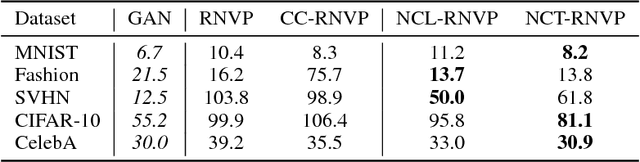

In this work, we propose to learn a generative model using both learned features (through a latent space) and memories (through neighbors). Although human learning makes seamless use of both learned perceptual features and instance recall, current generative learning paradigms only make use of one of these two components. Take, for instance, flow models, which learn a latent space of invertible features that follow a simple distribution. Conversely, kernel density techniques use instances to shift a simple distribution into an aggregate mixture model. Here we propose multiple methods to enhance the latent space of a flow model with neighborhood information. Not only does our proposed framework represent a more human-like approach by leveraging both learned features and memories, but it may also be viewed as a step forward in non-parametric methods. The efficacy of our model is shown empirically with standard image datasets. We observe compelling results and a significant improvement over baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge