A Federated Learning Framework for Non-Intrusive Load Monitoring

Paper and Code

Apr 04, 2021

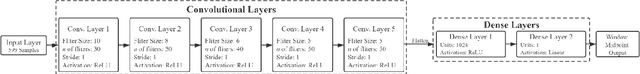

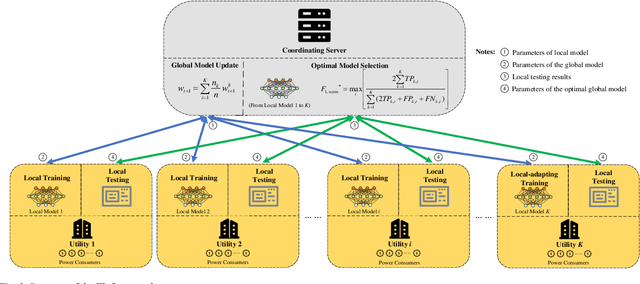

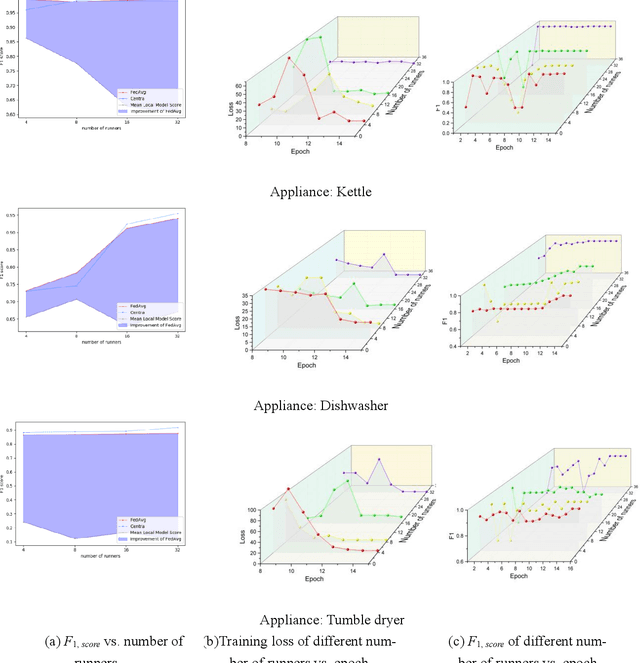

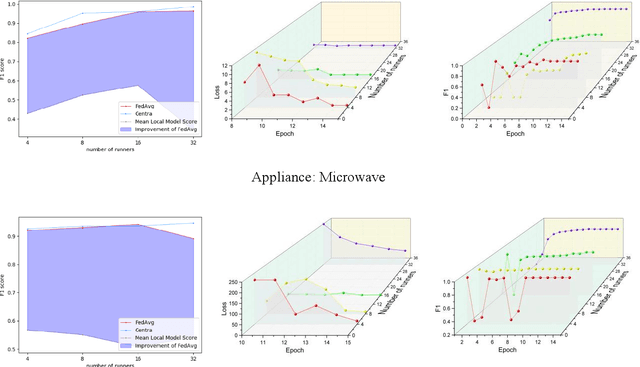

Non-intrusive load monitoring (NILM) aims at decomposing the total reading of the household power consumption into appliance-wise ones, which is beneficial for consumer behavior analysis as well as energy conservation. NILM based on deep learning has been a focus of research. To train a better neural network, it is necessary for the network to be fed with massive data containing various appliances and reflecting consumer behavior habits. Therefore, data cooperation among utilities and DNOs (distributed network operators) who own the NILM data has been increasingly significant. During the cooperation, however, risks of consumer privacy leakage and losses of data control rights arise. To deal with the problems above, a framework to improve the performance of NILM with federated learning (FL) has been set up. In the framework, model weights instead of the local data are shared among utilities. The global model is generated by weighted averaging the locally-trained model weights to gather the locally-trained model information. Optimal model selection help choose the model which adapts to the data from different domains best. Experiments show that this proposal improves the performance of local NILM runners. The performance of this framework is close to that of the centrally-trained model obtained by the convergent data without privacy protection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge