A Fast Convoluted Story: Scaling Probabilistic Inference for Integer Arithmetic

Paper and Code

Oct 16, 2024

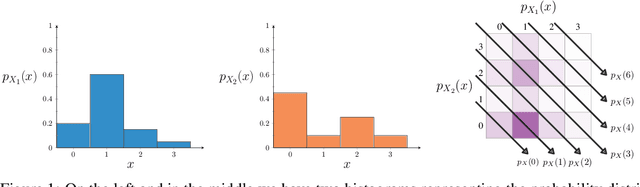

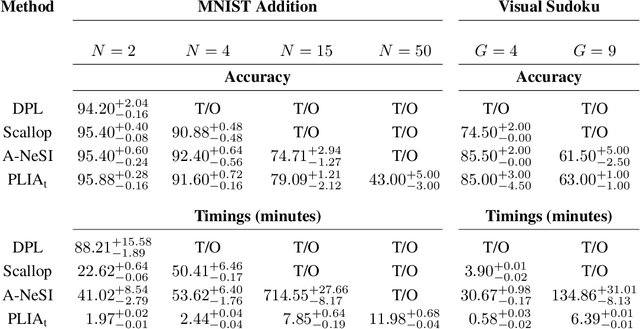

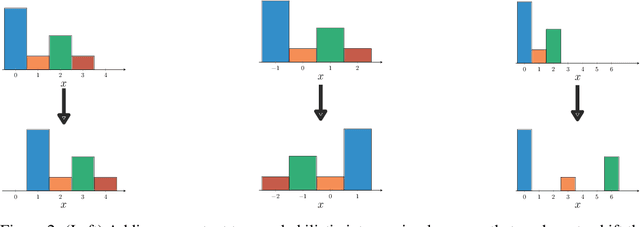

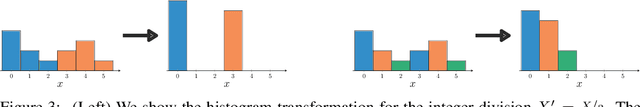

As illustrated by the success of integer linear programming, linear integer arithmetic is a powerful tool for modelling combinatorial problems. Furthermore, the probabilistic extension of linear programming has been used to formulate problems in neurosymbolic AI. However, two key problems persist that prevent the adoption of neurosymbolic techniques beyond toy problems. First, probabilistic inference is inherently hard, #P-hard to be precise. Second, the discrete nature of integers renders the construction of meaningful gradients challenging, which is problematic for learning. In order to mitigate these issues, we formulate linear arithmetic over integer-valued random variables as tensor manipulations that can be implemented in a straightforward fashion using modern deep learning libraries. At the core of our formulation lies the observation that the addition of two integer-valued random variables can be performed by adapting the fast Fourier transform to probabilities in the log-domain. By relying on tensor operations we obtain a differentiable data structure, which unlocks, virtually for free, gradient-based learning. In our experimental validation we show that tensorising probabilistic linear integer arithmetic and leveraging the fast Fourier transform allows us to push the state of the art by several orders of magnitude in terms of inference and learning times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge