A duality connecting neural network and cosmological dynamics

Paper and Code

Feb 22, 2022

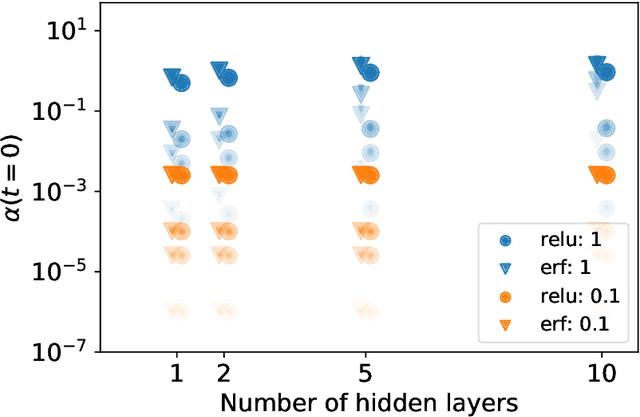

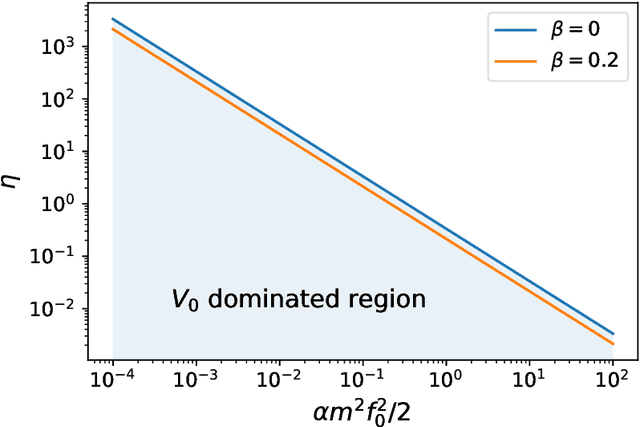

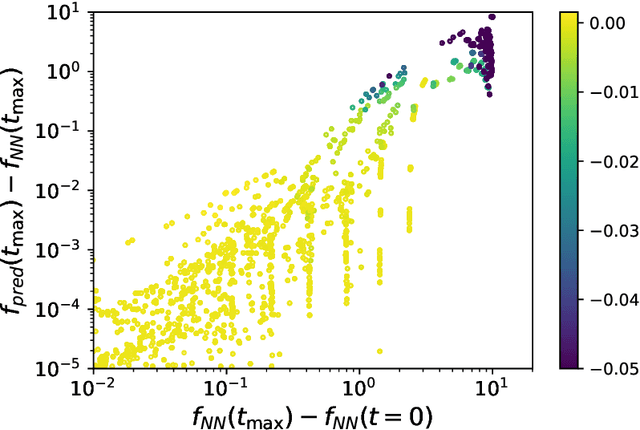

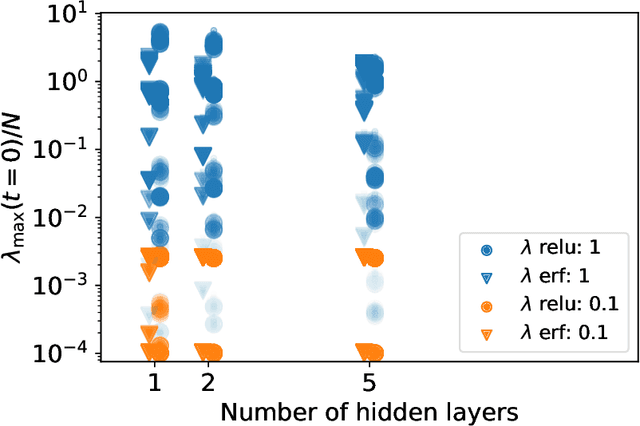

We demonstrate that the dynamics of neural networks trained with gradient descent and the dynamics of scalar fields in a flat, vacuum energy dominated Universe are structurally profoundly related. This duality provides the framework for synergies between these systems, to understand and explain neural network dynamics and new ways of simulating and describing early Universe models. Working in the continuous-time limit of neural networks, we analytically match the dynamics of the mean background and the dynamics of small perturbations around the mean field, highlighting potential differences in separate limits. We perform empirical tests of this analytic description and quantitatively show the dependence of the effective field theory parameters on hyperparameters of the neural network. As a result of this duality, the cosmological constant is matched inversely to the learning rate in the gradient descent update.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge