A developmental approach for training deep belief networks

Paper and Code

Jul 12, 2022

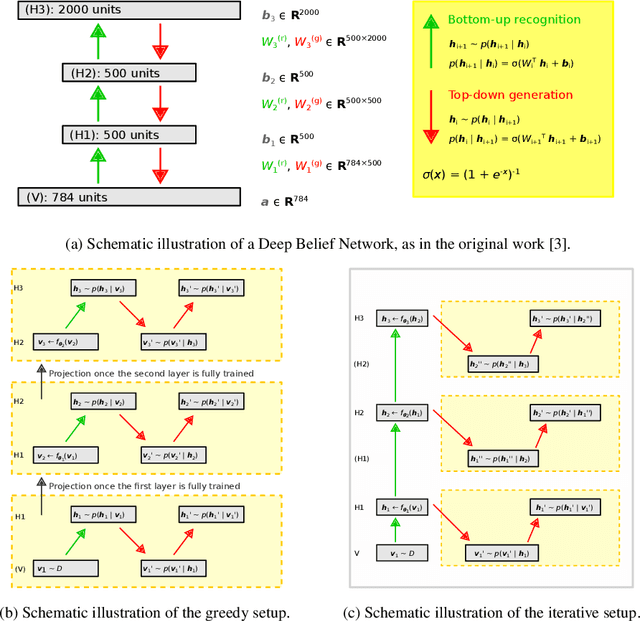

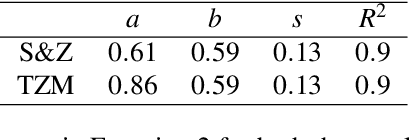

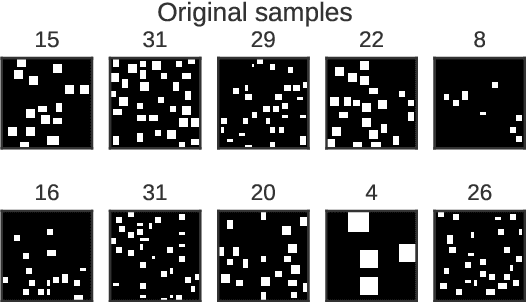

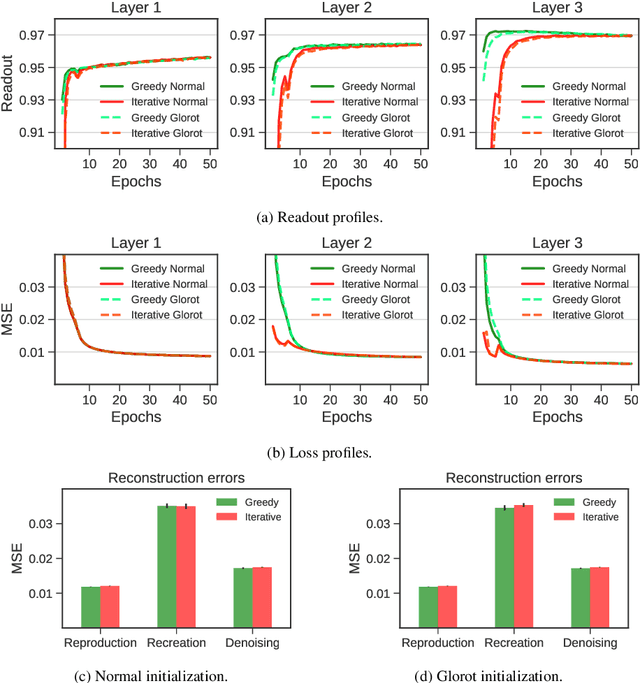

Deep belief networks (DBNs) are stochastic neural networks that can extract rich internal representations of the environment from the sensory data. DBNs had a catalytic effect in triggering the deep learning revolution, demonstrating for the very first time the feasibility of unsupervised learning in networks with many layers of hidden neurons. Thanks to their biological and cognitive plausibility, these hierarchical architectures have been also successfully exploited to build computational models of human perception and cognition in a variety of domains. However, learning in DBNs is usually carried out in a greedy, layer-wise fashion, which does not allow to simulate the holistic development of cortical circuits. Here we present iDBN, an iterative learning algorithm for DBNs that allows to jointly update the connection weights across all layers of the hierarchy. We test our algorithm on two different sets of visual stimuli, and we show that network development can also be tracked in terms of graph theoretical properties. DBNs trained using our iterative approach achieve a final performance comparable to that of the greedy counterparts, at the same time allowing to accurately analyze the gradual development of internal representations in the generative model. Our work paves the way to the use of iDBN for modeling neurocognitive development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge