A Deep Learning Technique using a Sequence of Follow Up X-Rays for Disease classification

Paper and Code

Mar 28, 2022

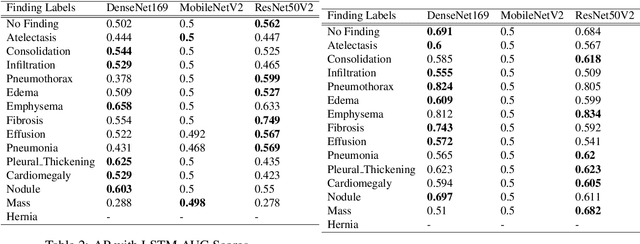

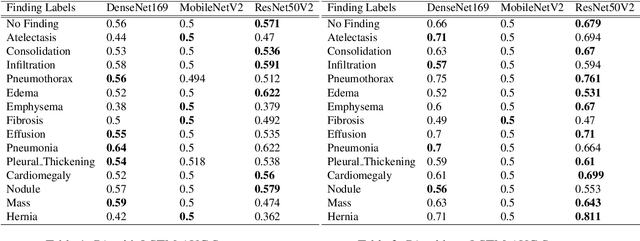

The ability to predict lung and heart based diseases using deep learning techniques is central to many researchers, particularly in the medical field around the world. In this paper, we present a unique outlook of a very familiar problem of disease classification using X-rays. We present a hypothesis that X-rays of patients included with the follow up history of their most recent three chest X-ray images would perform better in disease classification in comparison to one chest X-ray image input using an internal CNN to perform feature extraction. We have discovered that our generic deep learning architecture which we propose for solving this problem performs well with 3 input X ray images provided per sample for each patient. In this paper, we have also established that without additional layers before the output classification, the CNN models will improve the performance of predicting the disease labels for each patient. We have provided our results in ROC curves and AUROC scores. We define a fresh approach of collecting three X-ray images for training deep learning models, which we have concluded has clearly improved the performance of the models. We have shown that ResNet, in general, has a better result than any other CNN model used in the feature extraction phase. With our original approach to data pre-processing, image training, and pre-trained models, we believe that the current research will assist many medical institutions around the world, and this will improve the prediction of patients' symptoms and diagnose them with more accurate cure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge