a critical analysis of internal reliability for uncertainty quantification of dense image matching in multi-view stereo

Paper and Code

Sep 17, 2023

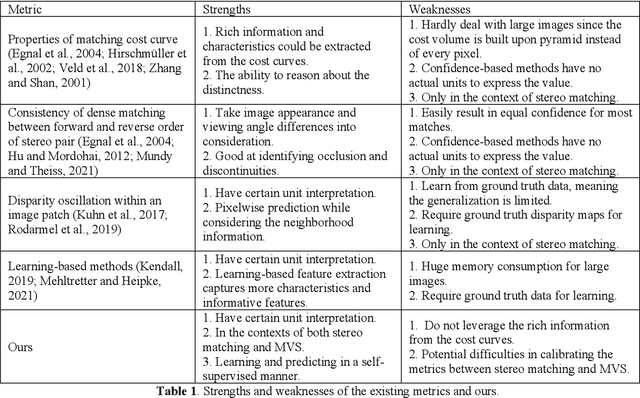

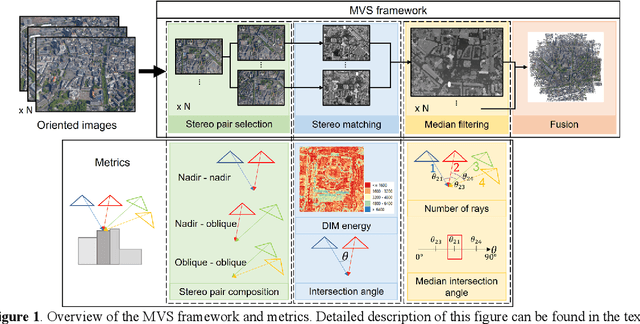

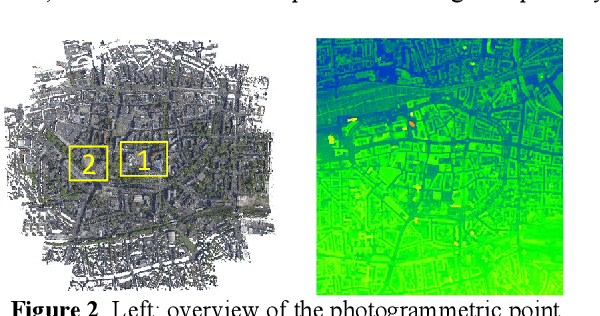

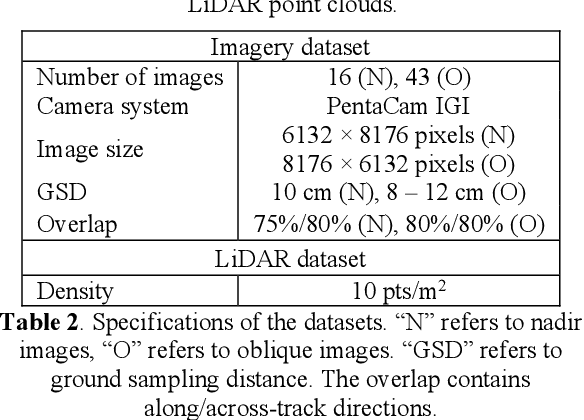

Nowadays, photogrammetrically derived point clouds are widely used in many civilian applications due to their low cost and flexibility in acquisition. Typically, photogrammetric point clouds are assessed through reference data such as LiDAR point clouds. However, when reference data are not available, the assessment of photogrammetric point clouds may be challenging. Since these point clouds are algorithmically derived, their accuracies and precisions are highly varying with the camera networks, scene complexity, and dense image matching (DIM) algorithms, and there is no standard error metric to determine per-point errors. The theory of internal reliability of camera networks has been well studied through first-order error estimation of Bundle Adjustment (BA), which is used to understand the errors of 3D points assuming known measurement errors. However, the measurement errors of the DIM algorithms are intricate to an extent that every single point may have its error function determined by factors such as pixel intensity, texture entropy, and surface smoothness. Despite the complexity, there exist a few common metrics that may aid the process of estimating the posterior reliability of the derived points, especially in a multi-view stereo (MVS) setup when redundancies are present. In this paper, by using an aerial oblique photogrammetric block with LiDAR reference data, we analyze several internal matching metrics within a common MVS framework, including statistics in ray convergence, intersection angles, DIM energy, etc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge