A Correlation Based Feature Representation for First-Person Activity Recognition

Paper and Code

Feb 09, 2018

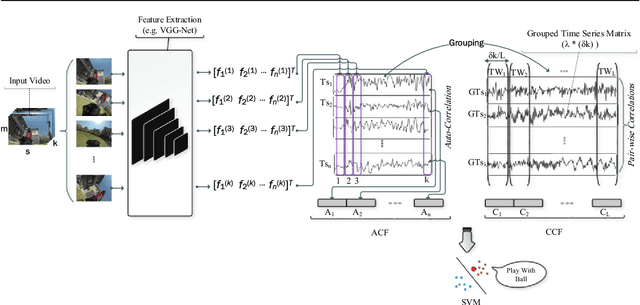

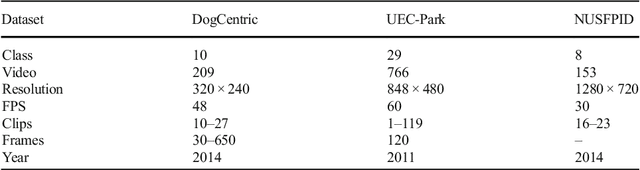

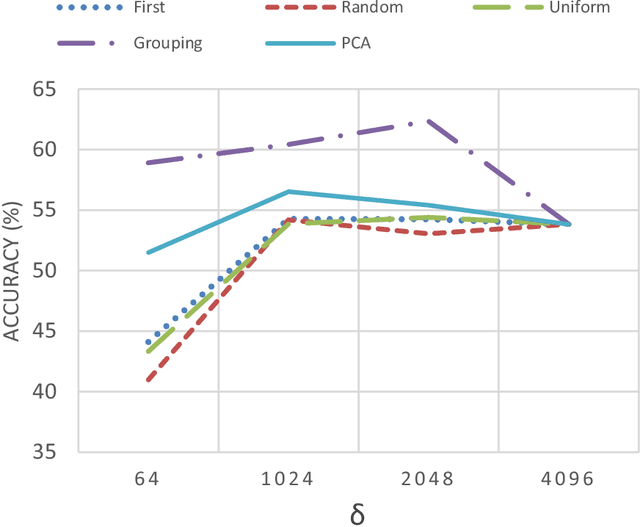

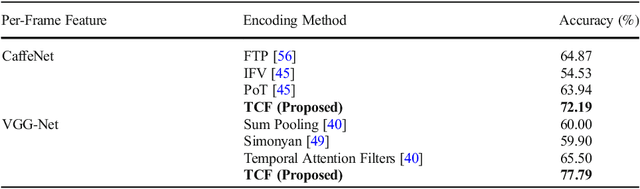

In this paper, a simple yet efficient activity recognition method for first-person video is introduced. The proposed method is appropriate for representation of high-dimensional features such as those extracted from convolutional neural networks (CNNs). The per-frame (per-segment) extracted features are considered as a set of time series, and inter and intra-time series relations are employed to represent the video descriptors. To find the inter-time relations, the series are grouped and the linear correlation between each pair of groups is calculated. The relations between them can represent the scene dynamics and local motions. The introduced grouping strategy helps to considerably reduce the computational cost. Furthermore, we split the series in temporal direction in order to preserve long term motions and better focus on each local time window. In order to extract the cyclic motion patterns, which can be considered as primary components of various activities, intra-time series correlations are exploited. The representation method results in highly discriminative features which can be linearly classified. The experiments confirm that our method outperforms the state-of-the-art methods on recognizing first-person activities on the two challenging first-person datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge