A Convolutional Architecture for 3D Model Embedding

Paper and Code

Mar 05, 2021

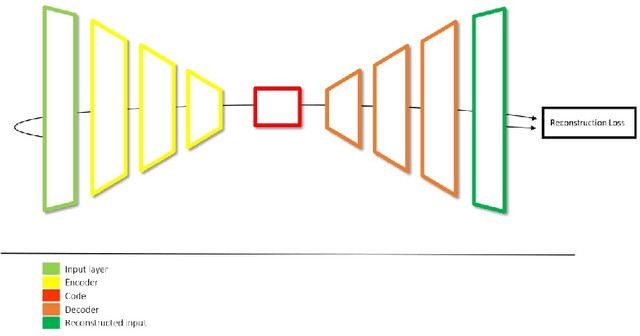

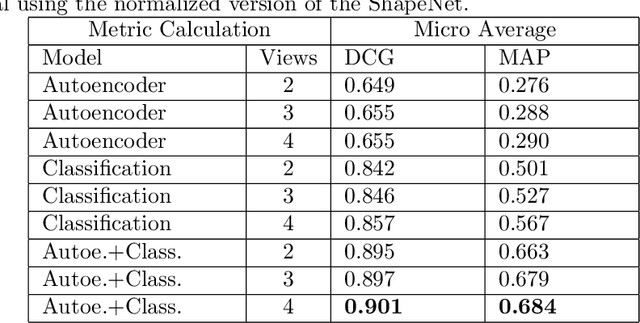

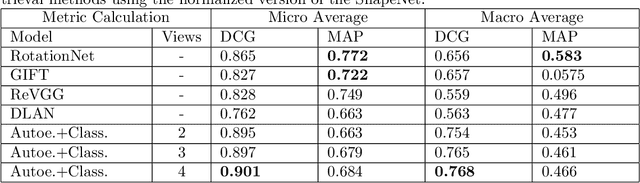

During the last years, many advances have been made in tasks like3D model retrieval, 3D model classification, and 3D model segmentation.The typical 3D representations such as point clouds, voxels, and poly-gon meshes are mostly suitable for rendering purposes, while their use forcognitive processes (retrieval, classification, segmentation) is limited dueto their high redundancy and complexity. We propose a deep learningarchitecture to handle 3D models as an input. We combine this architec-ture with other standard architectures like Convolutional Neural Networksand autoencoders for computing 3D model embeddings. Our goal is torepresent a 3D model as a vector with enough information to substitutethe 3D model for high-level tasks. Since this vector is a learned repre-sentation which tries to capture the relevant information of a 3D model,we show that the embedding representation conveys semantic informationthat helps to deal with the similarity assessment of 3D objects. Our ex-periments show the benefit of computing the embeddings of a 3D modeldata set and use them for effective 3D Model Retrieval.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge