A Cognitive Architecture Based on a Learning Classifier System with Spiking Classifiers

Paper and Code

Aug 31, 2015

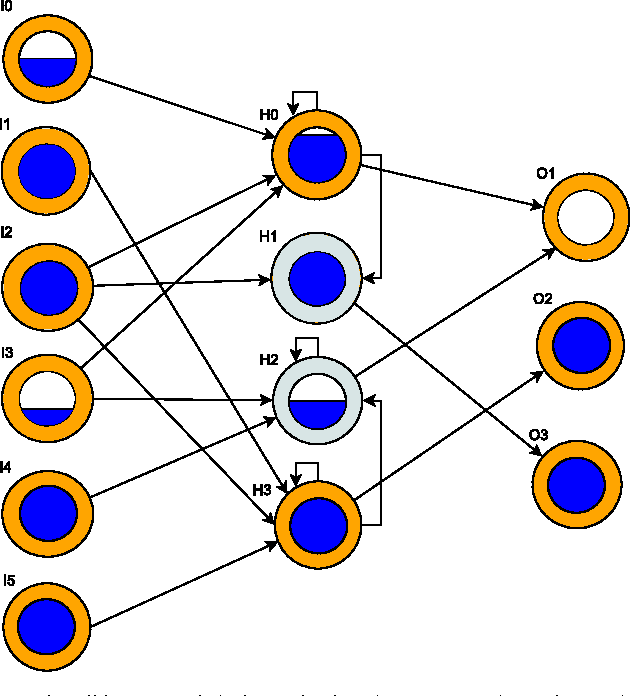

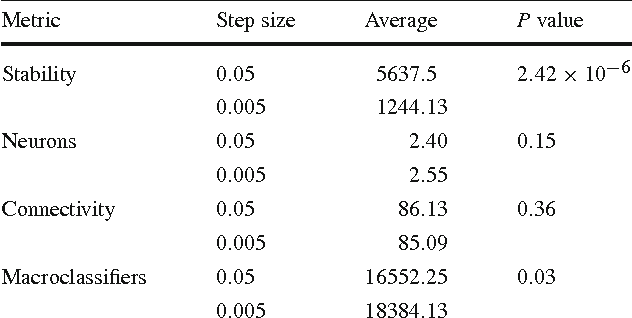

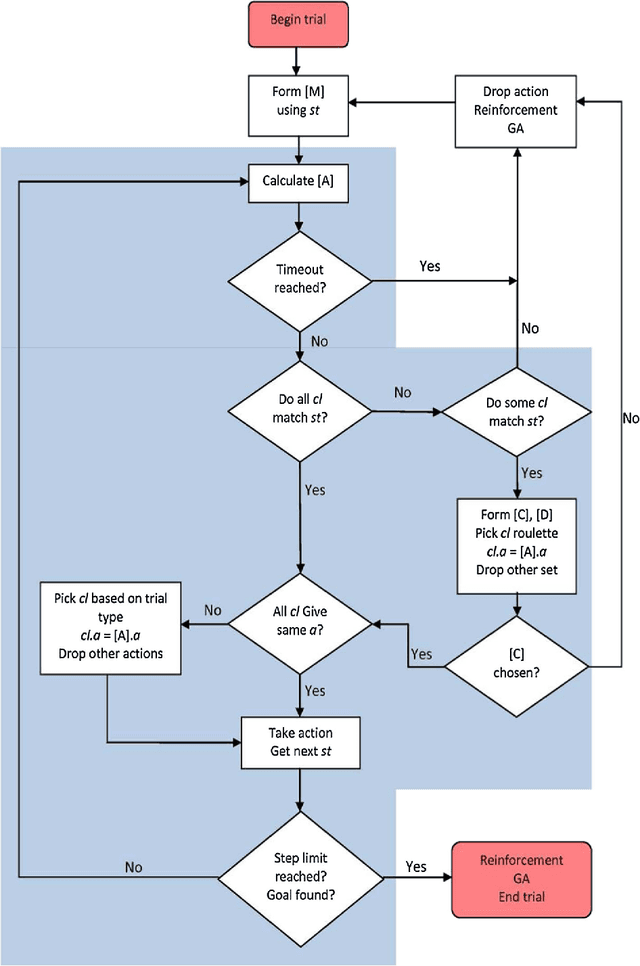

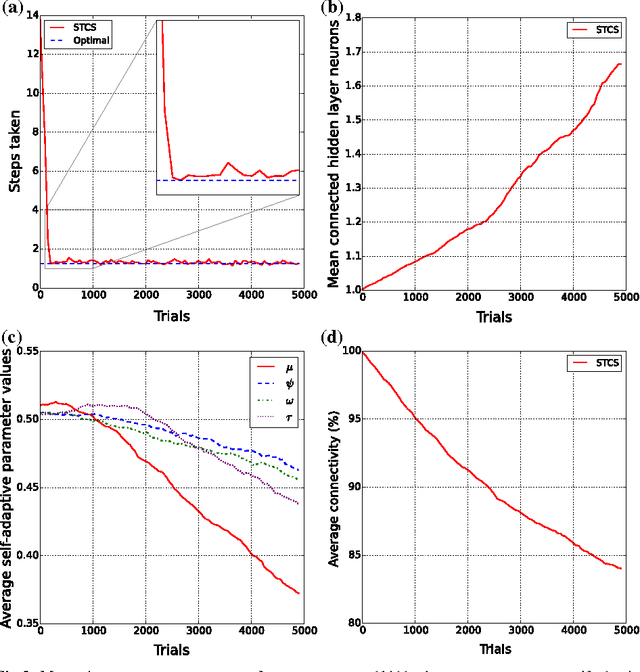

Learning Classifier Systems (LCS) are population-based reinforcement learners that were originally designed to model various cognitive phenomena. This paper presents an explicitly cognitive LCS by using spiking neural networks as classifiers, providing each classifier with a measure of temporal dynamism. We employ a constructivist model of growth of both neurons and synaptic connections, which permits a Genetic Algorithm (GA) to automatically evolve sufficiently-complex neural structures. The spiking classifiers are coupled with a temporally-sensitive reinforcement learning algorithm, which allows the system to perform temporal state decomposition by appropriately rewarding "macro-actions," created by chaining together multiple atomic actions. The combination of temporal reinforcement learning and neural information processing is shown to outperform benchmark neural classifier systems, and successfully solve a robotic navigation task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge