A C Code Generator for Fast Inference and Simple Deployment of Convolutional Neural Networks on Resource Constrained Systems

Paper and Code

Jan 14, 2020

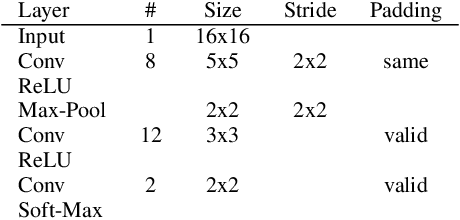

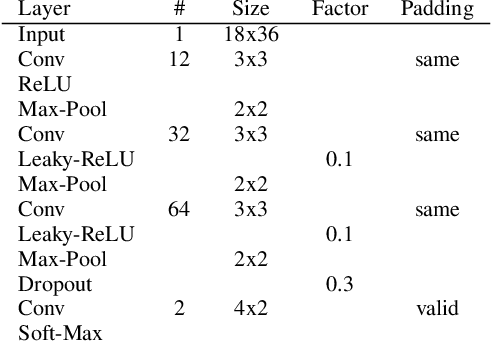

Inference of Convolutional Neural Networks in time critical applications usually requires a GPU. In robotics or embedded devices these are often not available due to energy, space and cost constraints. Furthermore, installation of a deep learning framework or even a native compiler on the target platform is not possible. This paper presents a neural network code generator (NNCG) that generates from a trained CNN a plain ANSI C code file that encapsulates the inference in single a function. It can easily be included in existing projects and due to lack of dependencies, cross compilation is usually possible. Additionally, the code generation is optimized based on the known trained CNN and target platform following four design principles. The system is evaluated utilizing small CNN designed for this application. Compared to TensorFlow XLA and Glow speed-ups of up to 11.81 can be shown and even GPUs are outperformed regarding latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge