A Benchmark of Medical Out of Distribution Detection

Paper and Code

Jul 08, 2020

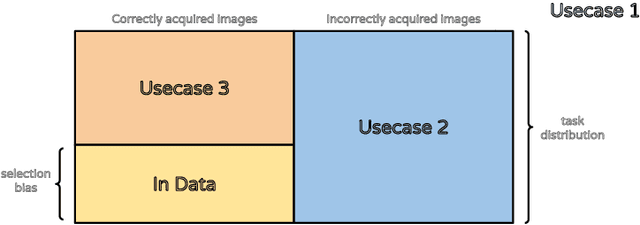

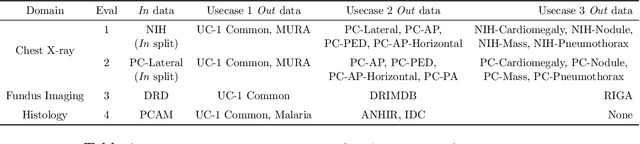

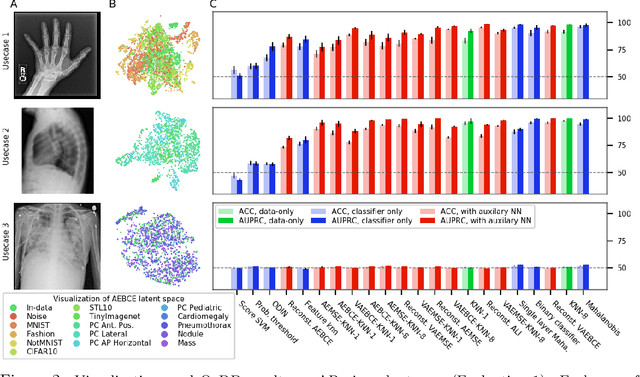

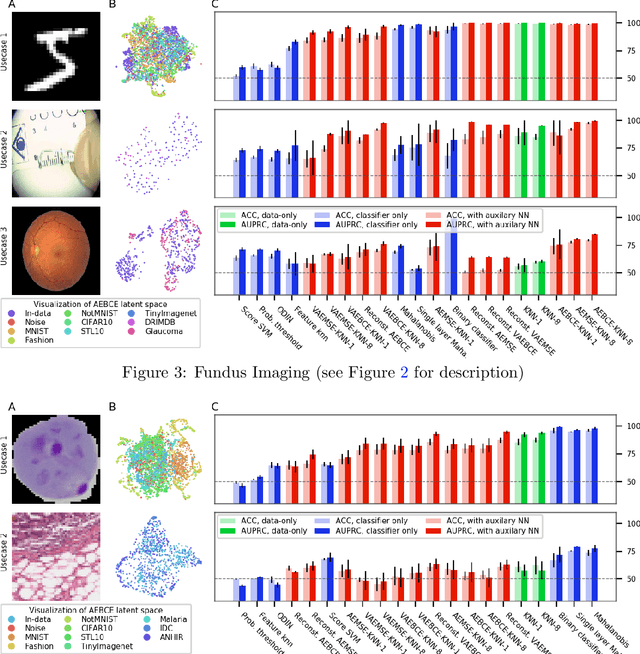

There is a rise in the use of deep learning for automated medical diagnosis, most notably in medical imaging. Such an automated system uses a set of images from a patient to diagnose whether they have a disease. However, systems trained for one particular domain of images cannot be expected to perform accurately on images of a different domain. These images should be filtered out by an Out-of-Distribution Detection (OoDD) method prior to diagnosis. This paper benchmarks popular OoDD methods in three domains of medical imaging: chest x-rays, fundus images, and histology slides. Our experiments show that despite methods yielding good results on some types of out-of-distribution samples, they fail to recognize images close to the training distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge