A Bayesian Evaluation Framework for Ground Truth-Free Visual Recognition Tasks

Paper and Code

Jun 20, 2020

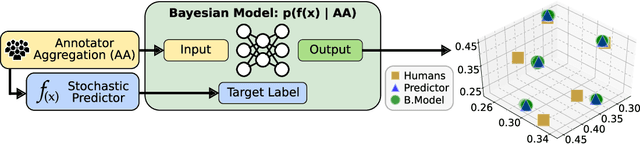

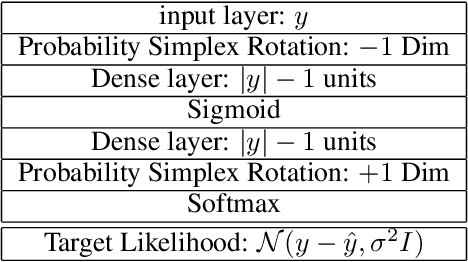

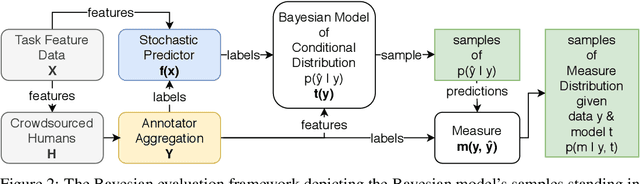

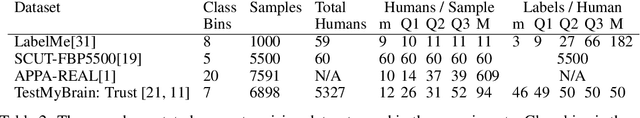

An interesting development in automatic visual recognition has been the emergence of tasks where it is not possible to assign ground truth labels to images, yet still feasible to collect annotations that reflect human judgements about them. Such tasks include subjective visual attribute assignment and the labeling of ambiguous scenes. Machine learning-based predictors for these tasks rely on supervised training that models the behavior of the annotators, e.g., what would the average person's judgement be for an image? A key open question for this type of work, especially for applications where inconsistency with human behavior can lead to ethical lapses, is how to evaluate the uncertainty of trained predictors. Given that the real answer is unknowable, we are left with often noisy judgements from human annotators to work with. In order to account for the uncertainty that is present, we propose a relative Bayesian framework for evaluating predictors trained on such data. The framework specifies how to estimate a predictor's uncertainty due to the human labels by approximating a conditional distribution and producing a credible interval for the predictions and their measures of performance. The framework is successfully applied to four image classification tasks that use subjective human judgements: facial beauty assessment using the SCUT-FBP5500 dataset, social attribute assignment using data from TestMyBrain.org, apparent age estimation using data from the ChaLearn series of challenges, and ambiguous scene labeling using the LabelMe dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge