3D-A-Nets: 3D Deep Dense Descriptor for Volumetric Shapes with Adversarial Networks

Paper and Code

Nov 28, 2017

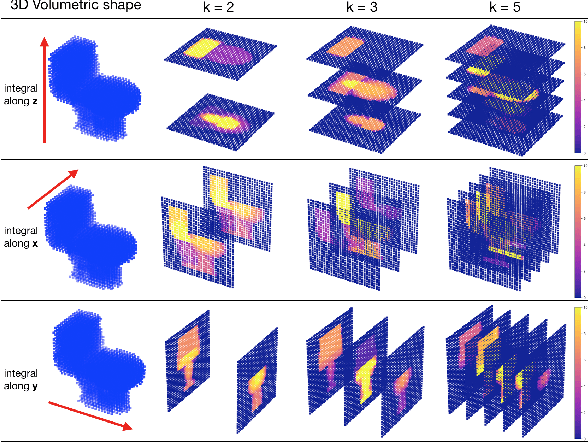

Recently researchers have been shifting their focus towards learned 3D shape descriptors from hand-craft ones to better address challenging issues of the deformation and structural variation inherently present in 3D objects. 3D geometric data are often transformed to 3D Voxel grids with regular format in order to be better fed to a deep neural net architecture. However, the computational intractability of direct application of 3D convolutional nets to 3D volumetric data severely limits the efficiency (i.e. slow processing) and effectiveness (i.e. unsatisfied accuracy) in processing 3D geometric data. In this paper, powered with a novel design of adversarial networks (3D-A-Nets), we have developed a novel 3D deep dense shape descriptor (3D-DDSD) to address the challenging issues of efficient and effective 3D volumetric data processing. We developed new definition of 2D multilayer dense representation (MDR) of 3D volumetric data to extract concise but geometrically informative shape description and a novel design of adversarial networks that jointly train a set of convolution neural network (CNN), recurrent neural network (RNN) and an adversarial discriminator. More specifically, the generator network produces 3D shape features that encourages the clustering of samples from the same category with correct class label, whereas the discriminator network discourages the clustering by assigning them misleading adversarial class labels. By addressing the challenges posed by the computational inefficiency of direct application of CNN to 3D volumetric data, 3D-A-Nets can learn high-quality 3D-DSDD which demonstrates superior performance on 3D shape classification and retrieval over other state-of-the-art techniques by a great margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge