Zitong You

A general framework for adaptive two-index fusion attribute weighted naive Bayes

Feb 24, 2022

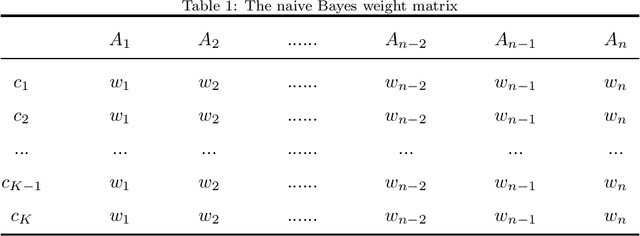

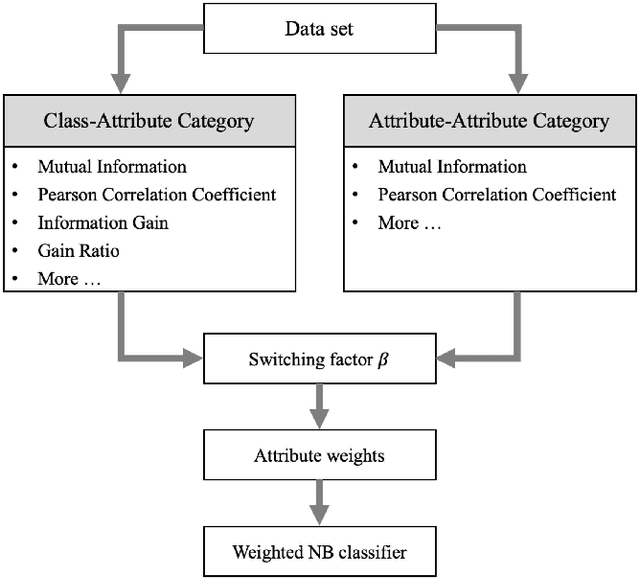

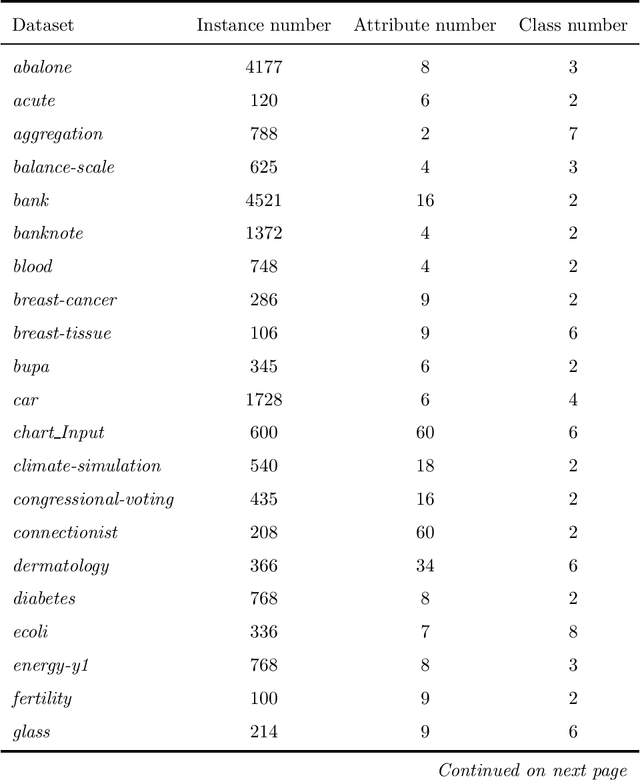

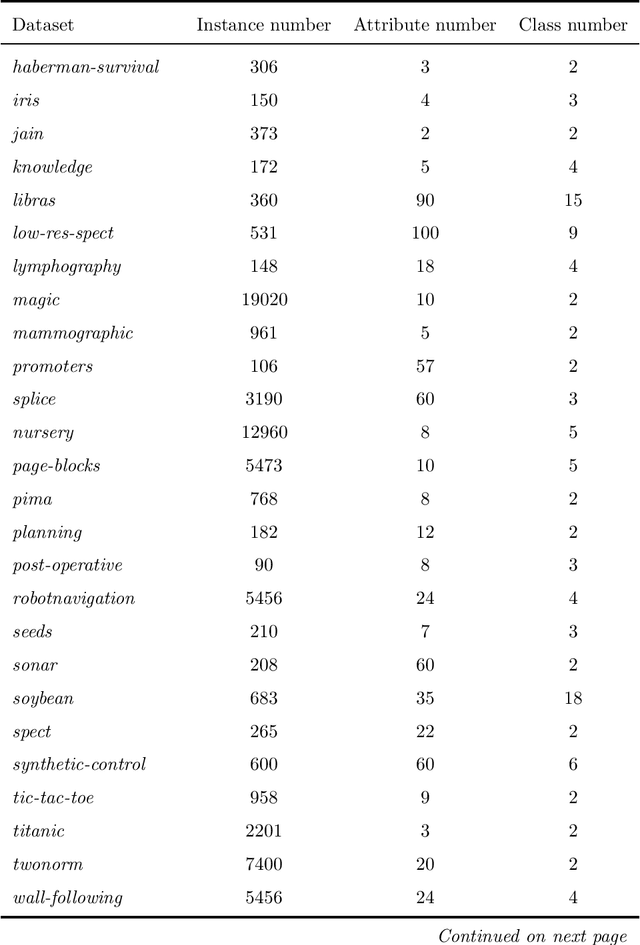

Abstract:Naive Bayes(NB) is one of the essential algorithms in data mining. However, it is rarely used in reality because of the attribute independent assumption. Researchers have proposed many improved NB methods to alleviate this assumption. Among these methods, due to high efficiency and easy implementation, the filter attribute weighted NB methods receive great attentions. However, there still exists several challenges, such as the poor representation ability for single index and the fusion problem of two indexes. To overcome above challenges, we propose a general framework for Adaptive Two-index Fusion attribute weighted NB(ATFNB). Two types of data description category are used to represent the correlation between classes and attributes, intercorrelation between attributes and attributes, respectively. ATFNB can select any one index from each category. Then, we introduce a switching factor \{beta} to fuse two indexes, which can adaptively adjust the optimal ratio of the two index on various datasets. And a quick algorithm is proposed to infer the optimal interval of switching factor \{beta}. Finally, the weight of each attribute is calculated using the optimal value \{beta} and is integrated into NB classifier to improve the accuracy. The experimental results on 50 benchmark datasets and a Flavia dataset show that ATFNB outperforms the basic NB and state-of-the-art filter weighted NB models. In addition, the ATFNB framework can improve the existing two-index NB model by introducing the adaptive switching factor \{beta}. Auxiliary experimental results demonstrate the improved model significantly increases the accuracy compared to the original model without the adaptive switching factor \{beta}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge