Zikai Xie

Merge Kernel for Bayesian Optimization on Permutation Space

Jul 18, 2025Abstract:Bayesian Optimization (BO) algorithm is a standard tool for black-box optimization problems. The current state-of-the-art BO approach for permutation spaces relies on the Mallows kernel-an $\Omega(n^2)$ representation that explicitly enumerates every pairwise comparison. Inspired by the close relationship between the Mallows kernel and pairwise comparison, we propose a novel framework for generating kernel functions on permutation space based on sorting algorithms. Within this framework, the Mallows kernel can be viewed as a special instance derived from bubble sort. Further, we introduce the \textbf{Merge Kernel} constructed from merge sort, which replaces the quadratic complexity with $\Theta(n\log n)$ to achieve the lowest possible complexity. The resulting feature vector is significantly shorter, can be computed in linearithmic time, yet still efficiently captures meaningful permutation distances. To boost robustness and right-invariance without sacrificing compactness, we further incorporate three lightweight, task-agnostic descriptors: (1) a shift histogram, which aggregates absolute element displacements and supplies a global misplacement signal; (2) a split-pair line, which encodes selected long-range comparisons by aligning elements across the two halves of the whole permutation; and (3) sliding-window motifs, which summarize local order patterns that influence near-neighbor objectives. Our empirical evaluation demonstrates that the proposed kernel consistently outperforms the state-of-the-art Mallows kernel across various permutation optimization benchmarks. Results confirm that the Merge Kernel provides a more compact yet more effective solution for Bayesian optimization in permutation space.

Order Matters in Hallucination: Reasoning Order as Benchmark and Reflexive Prompting for Large-Language-Models

Aug 09, 2024

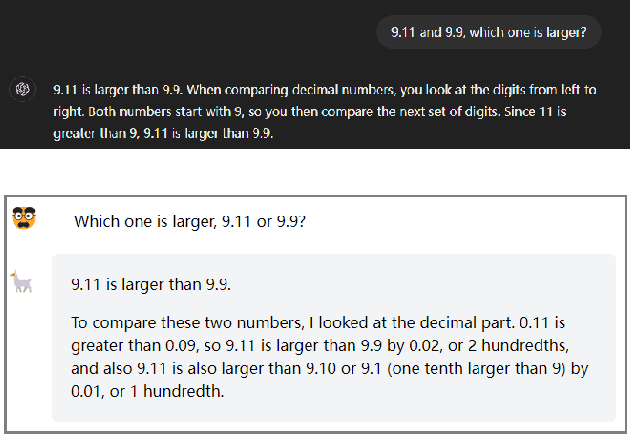

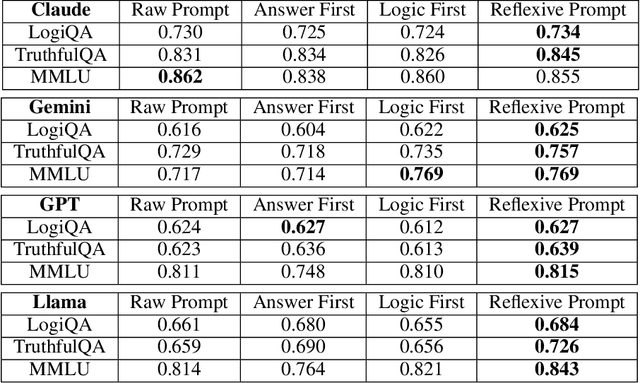

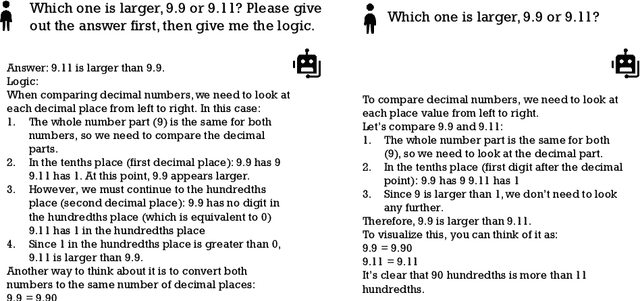

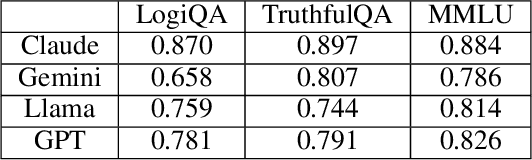

Abstract:Large language models (LLMs) have generated significant attention since their inception, finding applications across various academic and industrial domains. However, these models often suffer from the "hallucination problem", where outputs, though grammatically and logically coherent, lack factual accuracy or are entirely fabricated. A particularly troubling issue discovered and widely discussed recently is the numerical comparison error where multiple LLMs incorrectly infer that "9.11$>$9.9". We discovered that the order in which LLMs generate answers and reasoning impacts their consistency. Specifically, results vary significantly when an LLM generates an answer first and then provides the reasoning versus generating the reasoning process first and then the conclusion. Inspired by this, we propose a new benchmark method for assessing LLM consistency: comparing responses generated through these two different approaches. This benchmark effectively identifies instances where LLMs fabricate answers and subsequently generate justifications. Furthermore, we introduce a novel and straightforward prompt strategy designed to mitigate this issue. Experimental results demonstrate that this strategy improves performance across various LLMs compared to direct questioning. This work not only sheds light on a critical flaw in LLMs but also offers a practical solution to enhance their reliability.

Domain Knowledge Injection in Bayesian Search for New Materials

Nov 26, 2023

Abstract:In this paper we propose DKIBO, a Bayesian optimization (BO) algorithm that accommodates domain knowledge to tune exploration in the search space. Bayesian optimization has recently emerged as a sample-efficient optimizer for many intractable scientific problems. While various existing BO frameworks allow the input of prior beliefs to accelerate the search by narrowing down the space, incorporating such knowledge is not always straightforward and can often introduce bias and lead to poor performance. Here we propose a simple approach to incorporate structural knowledge in the acquisition function by utilizing an additional deterministic surrogate model to enrich the approximation power of the Gaussian process. This is suitably chosen according to structural information of the problem at hand and acts a corrective term towards a better-informed sampling. We empirically demonstrate the practical utility of the proposed method by successfully injecting domain knowledge in a materials design task. We further validate our method's performance on different experimental settings and ablation analyses.

* 8 pages, 5 figures, published in ECAI23

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge