Zhuyun Qi

DGEM: A New Dual-modal Graph Embedding Method in Recommendation System

Aug 09, 2021

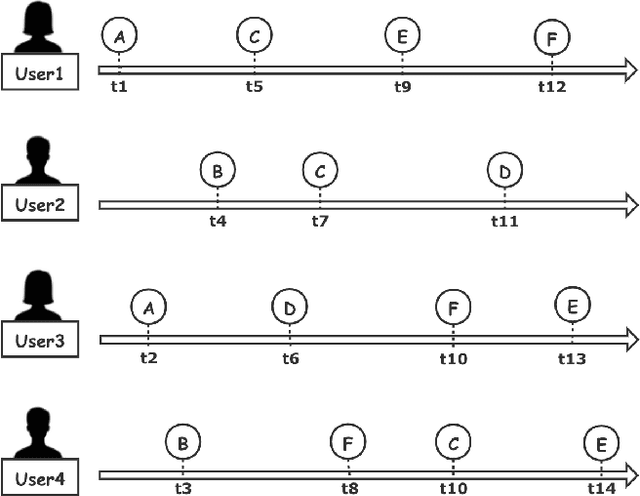

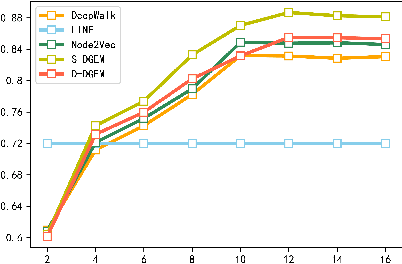

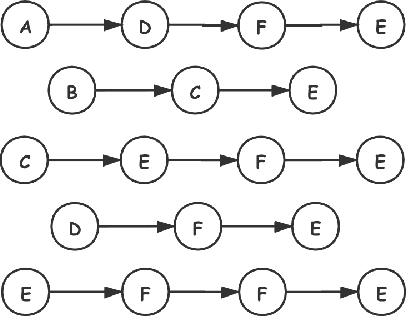

Abstract:In the current deep learning based recommendation system, the embedding method is generally employed to complete the conversion from the high-dimensional sparse feature vector to the low-dimensional dense feature vector. However, as the dimension of the input vector of the embedding layer is too large, the addition of the embedding layer significantly slows down the convergence speed of the entire neural network, which is not acceptable in real-world scenarios. In addition, as the interaction between users and items increases and the relationship between items becomes more complicated, the embedding method proposed for sequence data is no longer suitable for graphic data in the current real environment. Therefore, in this paper, we propose the Dual-modal Graph Embedding Method (DGEM) to solve these problems. DGEM includes two modes, static and dynamic. We first construct the item graph to extract the graph structure and use random walk of unequal probability to capture the high-order proximity between the items. Then we generate the graph embedding vector through the Skip-Gram model, and finally feed the downstream deep neural network for the recommendation task. The experimental results show that DGEM can mine the high-order proximity between items and enhance the expression ability of the recommendation model. Meanwhile it also improves the recommendation performance by utilizing the time dependent relationship between items.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge