Zhongliu Xie

Towards Single-phase Single-stage Detection of Pulmonary Nodules in Chest CT Imaging

Jul 16, 2018

Abstract:Detection of pulmonary nodules in chest CT imaging plays a crucial role in early diagnosis of lung cancer. Manual examination is highly time-consuming and error prone, calling for computer-aided detection, both to improve efficiency and reduce misdiagnosis. Over the years, a range of systems have been proposed, mostly following a two-phase paradigm with: 1) candidate detection, 2) false positive reduction. Recently, deep learning has become a dominant force in algorithm development. As for candidate detection, prior art was mainly based on the two-stage Faster R-CNN framework, which starts with an initial sub-net to generate a set of class-agnostic region proposals, followed by a second sub-net to perform classification and bounding-box regression. In contrast, we abandon the conventional two-phase paradigm and two-stage framework altogether and propose to train a single network for end-to-end nodule detection instead, without transfer learning or further post-processing. Our feature learning model is a modification of the ResNet and feature pyramid network combined, powered by RReLU activation. The major challenge is the condition of extreme inter-class and intra-class sample imbalance, where the positives are overwhelmed by a large negative pool, which is mostly composed of easy and a handful of hard negatives. Direct training on all samples can seriously undermine training efficacy. We propose a patch-based sampling strategy over a set of regularly updating anchors, which narrows sampling scope to all positives and only hard negatives, effectively addressing this issue. As a result, our approach substantially outperforms prior art in terms of both accuracy and speed. Finally, the prevailing FROC evaluation over [1/8, 1/4, 1/2, 1, 2, 4, 8] false positives per scan, is far from ideal in real clinical environments. We suggest FROC over [1, 2, 4] false positives as a better metric.

Near Real-time Hippocampus Segmentation Using Patch-based Canonical Neural Network

Jul 15, 2018

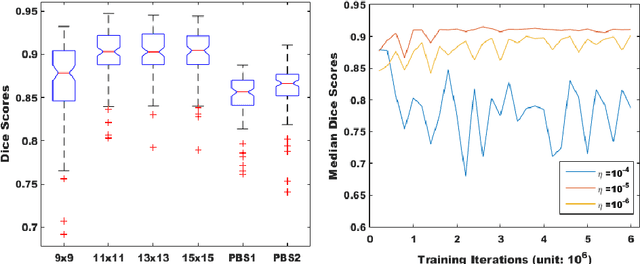

Abstract:Over the past decades, state-of-the-art medical image segmentation has heavily rested on signal processing paradigms, most notably registration-based label propagation and pair-wise patch comparison, which are generally slow despite a high segmentation accuracy. In recent years, deep learning has revolutionalized computer vision with many practices outperforming prior art, in particular the convolutional neural network (CNN) studies on image classification. Deep CNN has also started being applied to medical image segmentation lately, but generally involves long training and demanding memory requirements, achieving limited success. We propose a patch-based deep learning framework based on a revisit to the classic neural network model with substantial modernization, including the use of Rectified Linear Unit (ReLU) activation, dropout layers, 2.5D tri-planar patch multi-pathway settings. In a test application to hippocampus segmentation using 100 brain MR images from the ADNI database, our approach significantly outperformed prior art in terms of both segmentation accuracy and speed: scoring a median Dice score up to 90.98% on a near real-time performance (<1s).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge