Zhixiang Shen

Cooperation of Experts: Fusing Heterogeneous Information with Large Margin

May 28, 2025Abstract:Fusing heterogeneous information remains a persistent challenge in modern data analysis. While significant progress has been made, existing approaches often fail to account for the inherent heterogeneity of object patterns across different semantic spaces. To address this limitation, we propose the Cooperation of Experts (CoE) framework, which encodes multi-typed information into unified heterogeneous multiplex networks. By overcoming modality and connection differences, CoE provides a powerful and flexible model for capturing the intricate structures of real-world complex data. In our framework, dedicated encoders act as domain-specific experts, each specializing in learning distinct relational patterns in specific semantic spaces. To enhance robustness and extract complementary knowledge, these experts collaborate through a novel large margin mechanism supported by a tailored optimization strategy. Rigorous theoretical analyses guarantee the framework's feasibility and stability, while extensive experiments across diverse benchmarks demonstrate its superior performance and broad applicability. Our code is available at https://github.com/strangeAlan/CoE.

Multi-Domain Graph Foundation Models: Robust Knowledge Transfer via Topology Alignment

Feb 04, 2025Abstract:Recent advances in CV and NLP have inspired researchers to develop general-purpose graph foundation models through pre-training across diverse domains. However, a fundamental challenge arises from the substantial differences in graph topologies across domains. Additionally, real-world graphs are often sparse and prone to noisy connections and adversarial attacks. To address these issues, we propose the Multi-Domain Graph Foundation Model (MDGFM), a unified framework that aligns and leverages cross-domain topological information to facilitate robust knowledge transfer. MDGFM bridges different domains by adaptively balancing features and topology while refining original graphs to eliminate noise and align topological structures. To further enhance knowledge transfer, we introduce an efficient prompt-tuning approach. By aligning topologies, MDGFM not only improves multi-domain pre-training but also enables robust knowledge transfer to unseen domains. Theoretical analyses provide guarantees of MDGFM's effectiveness and domain generalization capabilities. Extensive experiments on both homophilic and heterophilic graph datasets validate the robustness and efficacy of our method.

Beyond Redundancy: Information-aware Unsupervised Multiplex Graph Structure Learning

Sep 25, 2024

Abstract:Unsupervised Multiplex Graph Learning (UMGL) aims to learn node representations on various edge types without manual labeling. However, existing research overlooks a key factor: the reliability of the graph structure. Real-world data often exhibit a complex nature and contain abundant task-irrelevant noise, severely compromising UMGL's performance. Moreover, existing methods primarily rely on contrastive learning to maximize mutual information across different graphs, limiting them to multiplex graph redundant scenarios and failing to capture view-unique task-relevant information. In this paper, we focus on a more realistic and challenging task: to unsupervisedly learn a fused graph from multiple graphs that preserve sufficient task-relevant information while removing task-irrelevant noise. Specifically, our proposed Information-aware Unsupervised Multiplex Graph Fusion framework (InfoMGF) uses graph structure refinement to eliminate irrelevant noise and simultaneously maximizes view-shared and view-unique task-relevant information, thereby tackling the frontier of non-redundant multiplex graph. Theoretical analyses further guarantee the effectiveness of InfoMGF. Comprehensive experiments against various baselines on different downstream tasks demonstrate its superior performance and robustness. Surprisingly, our unsupervised method even beats the sophisticated supervised approaches. The source code and datasets are available at https://github.com/zxlearningdeep/InfoMGF.

When Heterophily Meets Heterogeneous Graphs: Latent Graphs Guided Unsupervised Representation Learning

Sep 01, 2024

Abstract:Unsupervised heterogeneous graph representation learning (UHGRL) has gained increasing attention due to its significance in handling practical graphs without labels. However, heterophily has been largely ignored, despite its ubiquitous presence in real-world heterogeneous graphs. In this paper, we define semantic heterophily and propose an innovative framework called Latent Graphs Guided Unsupervised Representation Learning (LatGRL) to handle this problem. First, we develop a similarity mining method that couples global structures and attributes, enabling the construction of fine-grained homophilic and heterophilic latent graphs to guide the representation learning. Moreover, we propose an adaptive dual-frequency semantic fusion mechanism to address the problem of node-level semantic heterophily. To cope with the massive scale of real-world data, we further design a scalable implementation. Extensive experiments on benchmark datasets validate the effectiveness and efficiency of our proposed framework. The source code and datasets have been made available at https://github.com/zxlearningdeep/LatGRL.

Balanced Multi-Relational Graph Clustering

Jul 23, 2024

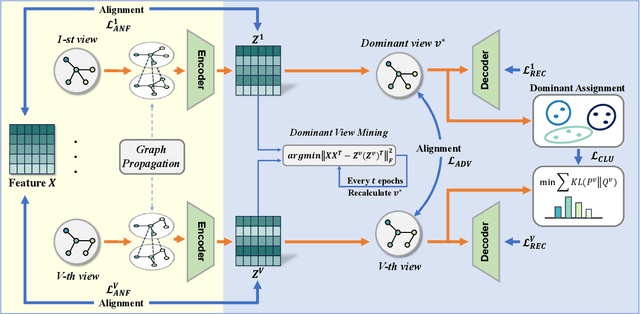

Abstract:Multi-relational graph clustering has demonstrated remarkable success in uncovering underlying patterns in complex networks. Representative methods manage to align different views motivated by advances in contrastive learning. Our empirical study finds the pervasive presence of imbalance in real-world graphs, which is in principle contradictory to the motivation of alignment. In this paper, we first propose a novel metric, the Aggregation Class Distance, to empirically quantify structural disparities among different graphs. To address the challenge of view imbalance, we propose Balanced Multi-Relational Graph Clustering (BMGC), comprising unsupervised dominant view mining and dual signals guided representation learning. It dynamically mines the dominant view throughout the training process, synergistically improving clustering performance with representation learning. Theoretical analysis ensures the effectiveness of dominant view mining. Extensive experiments and in-depth analysis on real-world and synthetic datasets showcase that BMGC achieves state-of-the-art performance, underscoring its superiority in addressing the view imbalance inherent in multi-relational graphs. The source code and datasets are available at https://github.com/zxlearningdeep/BMGC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge