Zhenyi He

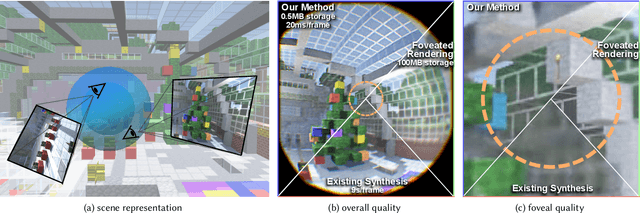

Foveated Neural Radiance Fields for Real-Time and Egocentric Virtual Reality

Mar 30, 2021

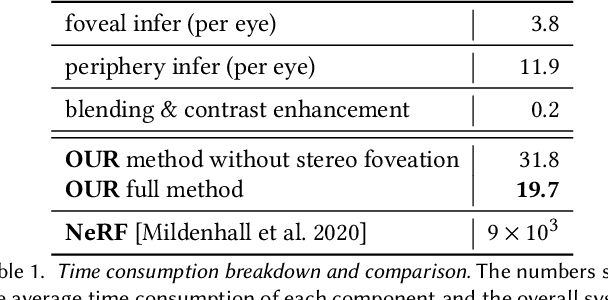

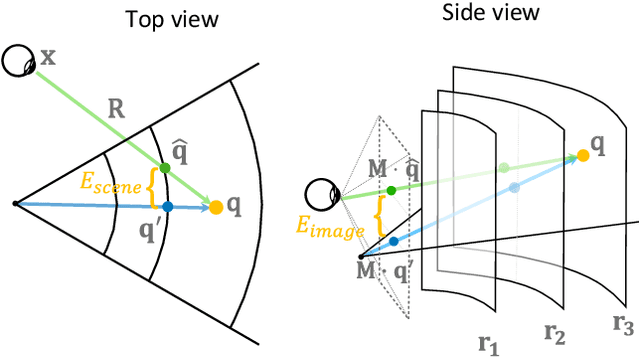

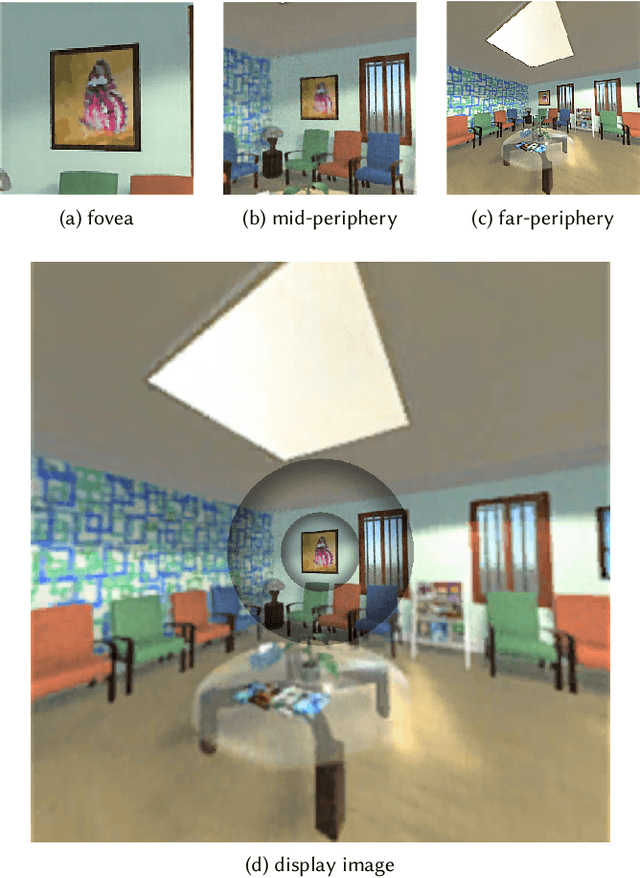

Abstract:Traditional high-quality 3D graphics requires large volumes of fine-detailed scene data for rendering. This demand compromises computational efficiency and local storage resources. Specifically, it becomes more concerning for future wearable and portable virtual and augmented reality (VR/AR) displays. Recent approaches to combat this problem include remote rendering/streaming and neural representations of 3D assets. These approaches have redefined the traditional local storage-rendering pipeline by distributed computing or compression of large data. However, these methods typically suffer from high latency or low quality for practical visualization of large immersive virtual scenes, notably with extra high resolution and refresh rate requirements for VR applications such as gaming and design. Tailored for the future portable, low-storage, and energy-efficient VR platforms, we present the first gaze-contingent 3D neural representation and view synthesis method. We incorporate the human psychophysics of visual- and stereo-acuity into an egocentric neural representation of 3D scenery. Furthermore, we jointly optimize the latency/performance and visual quality, while mutually bridging human perception and neural scene synthesis, to achieve perceptually high-quality immersive interaction. Both objective analysis and subjective study demonstrate the effectiveness of our approach in significantly reducing local storage volume and synthesis latency (up to 99% reduction in both data size and computational time), while simultaneously presenting high-fidelity rendering, with perceptual quality identical to that of fully locally stored and rendered high-quality imagery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge