Zeyad Osama Khalifa

Towards Visual Affordance Learning: A Benchmark for Affordance Segmentation and Recognition

Mar 26, 2022

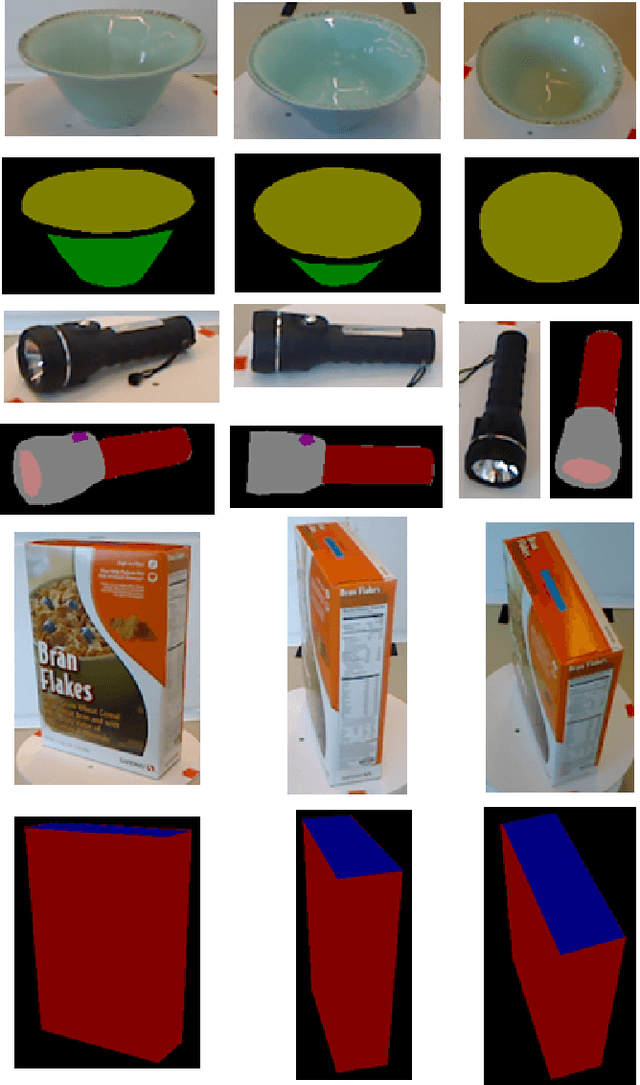

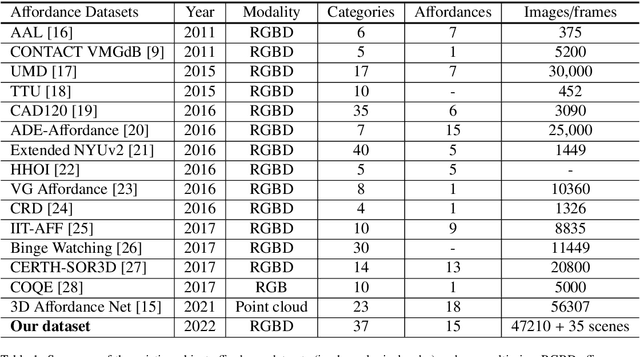

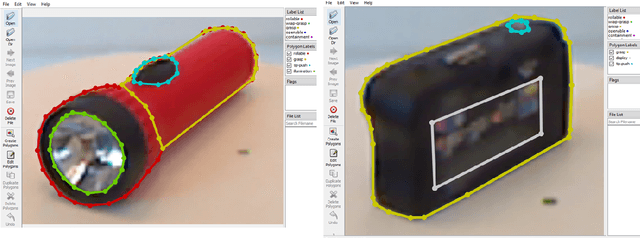

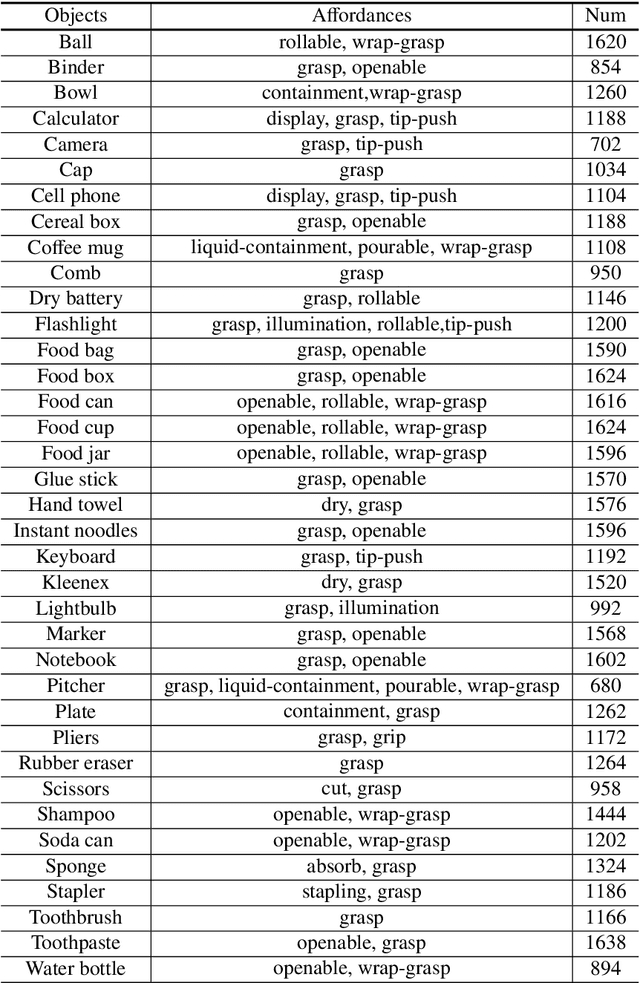

Abstract:The physical and textural attributes of objects have been widely studied for recognition, detection and segmentation tasks in computer vision. A number of datasets, such as large scale ImageNet, have been proposed for feature learning using data hungry deep neural networks and for hand-crafted feature extraction. To intelligently interact with objects, robots and intelligent machines need the ability to infer beyond the traditional physical/textural attributes, and understand/learn visual cues, called visual affordances, for affordance recognition, detection and segmentation. To date there is no publicly available large dataset for visual affordance understanding and learning. In this paper, we introduce a large scale multi-view RGBD visual affordance learning dataset, a benchmark of 47210 RGBD images from 37 object categories, annotated with 15 visual affordance categories and 35 cluttered/complex scenes with different objects and multiple affordances. To the best of our knowledge, this is the first ever and the largest multi-view RGBD visual affordance learning dataset. We benchmark the proposed dataset for affordance recognition and segmentation. To achieve this we propose an Affordance Recognition Network a.k.a ARNet. In addition, four state-of-the-art deep learning networks are evaluated for affordance segmentation task. Our experimental results showcase the challenging nature of the dataset and present definite prospects for new and robust affordance learning algorithms. The dataset is available at: https://sites.google.com/view/afaqshah/dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge