Zareen Alamgir

A Payload Optimization Method for Federated Recommender Systems

Jul 27, 2021

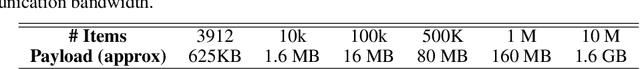

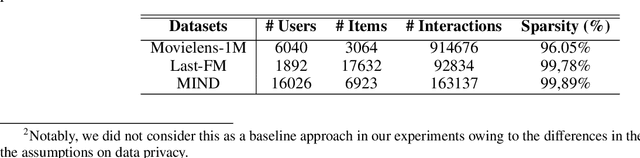

Abstract:We introduce the payload optimization method for federated recommender systems (FRS). In federated learning (FL), the global model payload that is moved between the server and users depends on the number of items to recommend. The model payload grows when there is an increasing number of items. This becomes challenging for an FRS if it is running in production mode. To tackle the payload challenge, we formulated a multi-arm bandit solution that selected part of the global model and transmitted it to all users. The selection process was guided by a novel reward function suitable for FL systems. So far as we are aware, this is the first optimization method that seeks to address item dependent payloads. The method was evaluated using three benchmark recommendation datasets. The empirical validation confirmed that the proposed method outperforms the simpler methods that do not benefit from the bandits for the purpose of item selection. In addition, we have demonstrated the usefulness of our proposed method by rigorously evaluating the effects of a payload reduction on the recommendation performance degradation. Our method achieved up to a 90\% reduction in model payload, yielding only a $\sim$4\% - 8\% loss in the recommendation performance for highly sparse datasets

* 15 pages, 3 figures, 4 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge