Yulu Jin

Fairness-aware Regression Robust to Adversarial Attacks

Nov 04, 2022

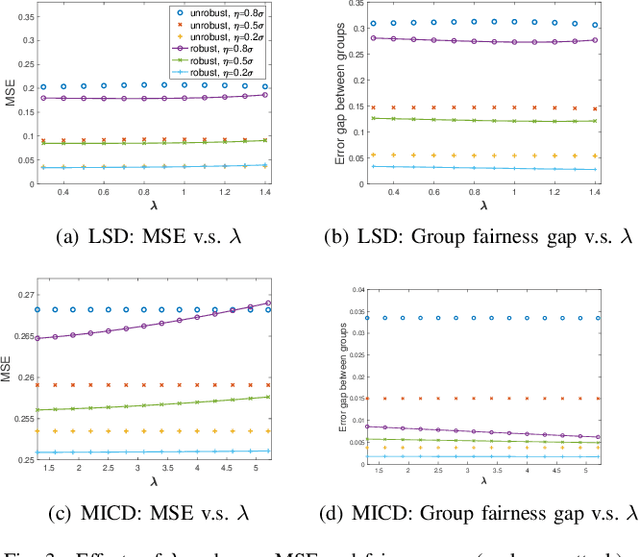

Abstract:In this paper, we take a first step towards answering the question of how to design fair machine learning algorithms that are robust to adversarial attacks. Using a minimax framework, we aim to design an adversarially robust fair regression model that achieves optimal performance in the presence of an attacker who is able to add a carefully designed adversarial data point to the dataset or perform a rank-one attack on the dataset. By solving the proposed nonsmooth nonconvex-nonconcave minimax problem, the optimal adversary as well as the robust fairness-aware regression model are obtained. For both synthetic data and real-world datasets, numerical results illustrate that the proposed adversarially robust fair models have better performance on poisoned datasets than other fair machine learning models in both prediction accuracy and group-based fairness measure.

Test Set Optimization by Machine Learning Algorithms

Oct 28, 2020

Abstract:Diagnosis results are highly dependent on the volume of test set. To derive the most efficient test set, we propose several machine learning based methods to predict the minimum amount of test data that produces relatively accurate diagnosis. By collecting outputs from failing circuits, the feature matrix and label vector are generated, which involves the inference information of the test termination point. Thus we develop a prediction model to fit the data and determine when to terminate testing. The considered methods include LASSO and Support Vector Machine(SVM) where the relationship between goals(label) and predictors(feature matrix) are considered to be linear in LASSO and nonlinear in SVM. Numerical results show that SVM reaches a diagnosis accuracy of 90.4% while deducting the volume of test set by 35.24%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge