Yuhong Luo

No Need to Look Back: An Efficient and Scalable Approach for Temporal Network Representation Learning

Feb 03, 2024

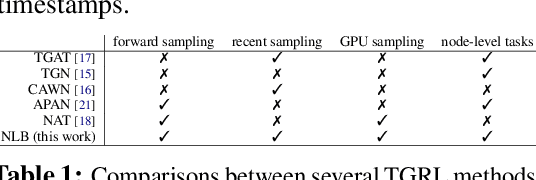

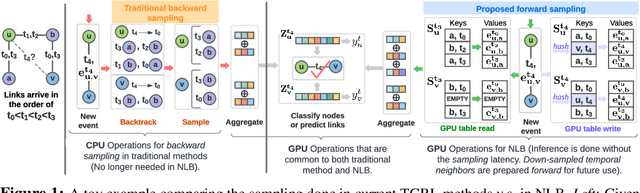

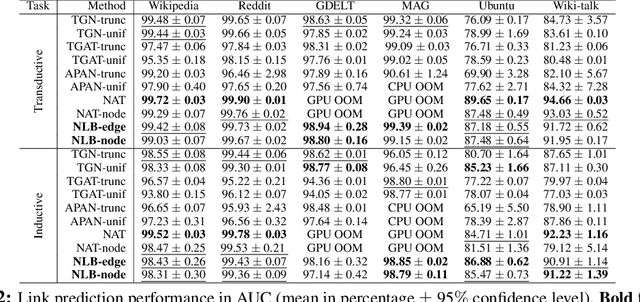

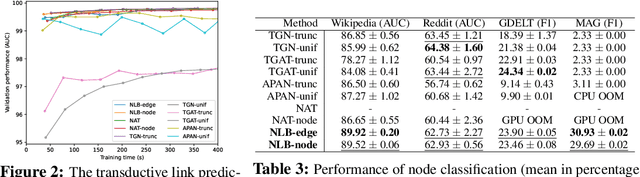

Abstract:Temporal graph representation learning (TGRL) is crucial for modeling complex, dynamic systems in real-world networks. Traditional TGRL methods, though effective, suffer from high computational demands and inference latency. This is mainly induced by their inefficient sampling of temporal neighbors by backtracking the interaction history of each node when making model inference. This paper introduces a novel efficient TGRL framework, No-Looking-Back (NLB). NLB employs a "forward recent sampling" strategy, which bypasses the need for backtracking historical interactions. This strategy is implemented using a GPU-executable size-constrained hash table for each node, recording down-sampled recent interactions, which enables rapid response to queries with minimal inference latency. The maintenance of this hash table is highly efficient, with $O(1)$ complexity. NLB is fully compatible with GPU processing, maximizing programmability, parallelism, and power efficiency. Empirical evaluations demonstrate that NLB matches or surpasses state-of-the-art methods in accuracy for link prediction and node classification across six real-world datasets. Significantly, it is 1.32-4.40 $\times$ faster in training, 1.2-7.94 $\times$ more energy efficient, and 1.97-5.02 $\times$ more effective in reducing inference latency compared to the most competitive baselines. The link to the code: https://github.com/Graph-COM/NLB.

Learning Fair Representations with High-Confidence Guarantees

Oct 23, 2023Abstract:Representation learning is increasingly employed to generate representations that are predictive across multiple downstream tasks. The development of representation learning algorithms that provide strong fairness guarantees is thus important because it can prevent unfairness towards disadvantaged groups for all downstream prediction tasks. To prevent unfairness towards disadvantaged groups in all downstream tasks, it is crucial to provide representation learning algorithms that provide fairness guarantees. In this paper, we formally define the problem of learning representations that are fair with high confidence. We then introduce the Fair Representation learning with high-confidence Guarantees (FRG) framework, which provides high-confidence guarantees for limiting unfairness across all downstream models and tasks, with user-defined upper bounds. After proving that FRG ensures fairness for all downstream models and tasks with high probability, we present empirical evaluations that demonstrate FRG's effectiveness at upper bounding unfairness for multiple downstream models and tasks.

Neighborhood-aware Scalable Temporal Network Representation Learning

Sep 05, 2022

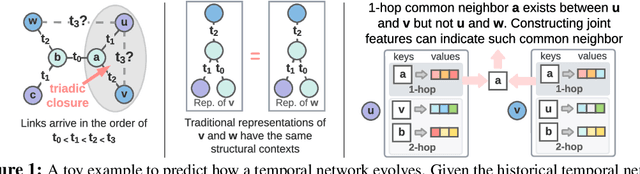

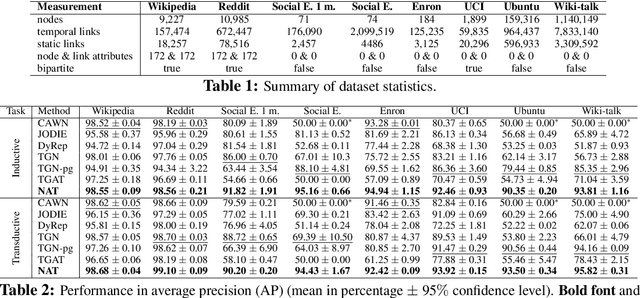

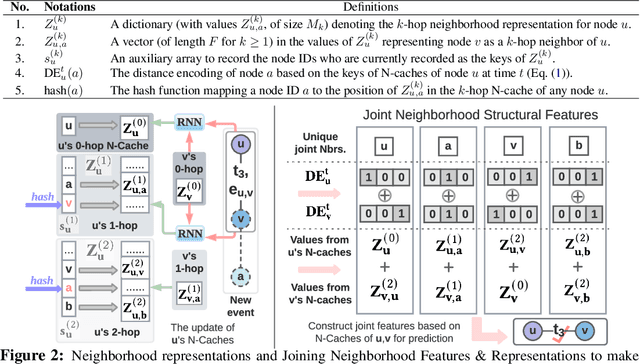

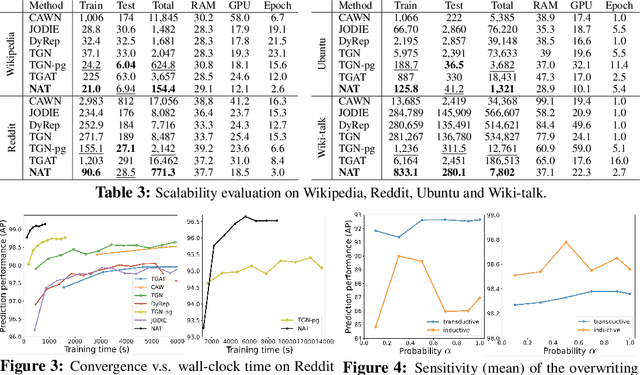

Abstract:Temporal networks have been widely used to model real-world complex systems such as financial systems and e-commerce systems. In a temporal network, the joint neighborhood of a set of nodes often provides crucial structural information on predicting whether they may interact at a certain time. However, recent representation learning methods for temporal networks often fail to extract such information or depend on extremely time-consuming feature construction approaches. To address the issue, this work proposes Neighborhood-Aware Temporal network model (NAT). For each node in the network, NAT abandons the commonly-used one-single-vector-based representation while adopting a novel dictionary-type neighborhood representation. Such a dictionary representation records a down-sampled set of the neighboring nodes as keys, and allows fast construction of structural features for a joint neighborhood of multiple nodes. We also design dedicated data structure termed N-cache to support parallel access and update of those dictionary representations on GPUs. NAT gets evaluated over seven real-world large-scale temporal networks. NAT not only outperforms all cutting-edge baselines by averaged 5.9% and 6.0% in transductive and inductive link prediction accuracy, respectively, but also keeps scalable by achieving a speed-up of 4.1-76.7x against the baselines that adopts joint structural features and achieves a speed-up of 1.6-4.0x against the baselines that cannot adopt those features. The link to the code: https://github.com/Graph-COM/Neighborhood-Aware-Temporal-Network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge