Yuanyuan Shen

On the Dimensionality of Word Embedding

Dec 11, 2018

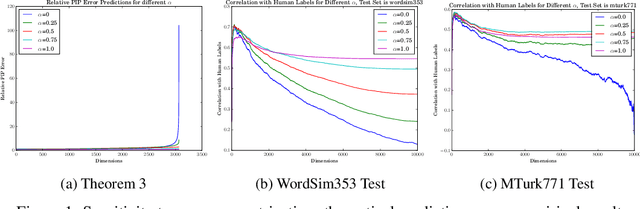

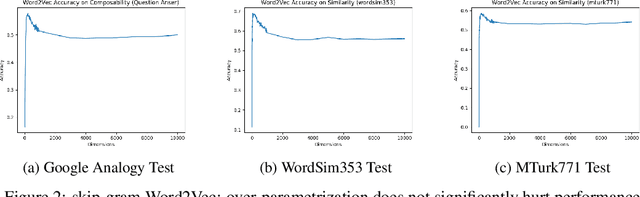

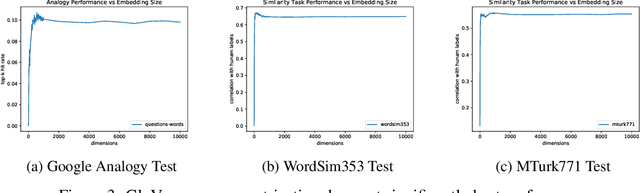

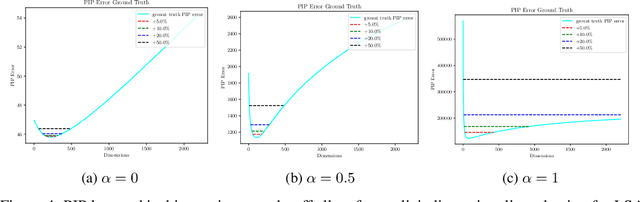

Abstract:In this paper, we provide a theoretical understanding of word embedding and its dimensionality. Motivated by the unitary-invariance of word embedding, we propose the Pairwise Inner Product (PIP) loss, a novel metric on the dissimilarity between word embeddings. Using techniques from matrix perturbation theory, we reveal a fundamental bias-variance trade-off in dimensionality selection for word embeddings. This bias-variance trade-off sheds light on many empirical observations which were previously unexplained, for example the existence of an optimal dimensionality. Moreover, new insights and discoveries, like when and how word embeddings are robust to over-fitting, are revealed. By optimizing over the bias-variance trade-off of the PIP loss, we can explicitly answer the open question of dimensionality selection for word embedding.

A Deep Causal Inference Approach to Measuring the Effects of Forming Group Loans in Online Non-profit Microfinance Platform

Jun 08, 2017

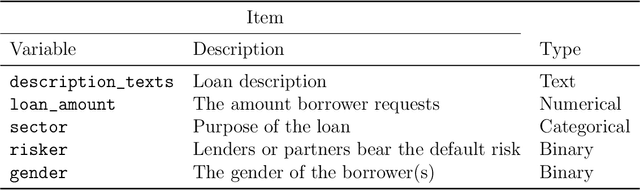

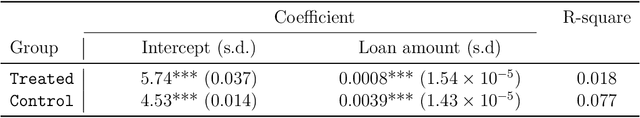

Abstract:Kiva is an online non-profit crowdsouring microfinance platform that raises funds for the poor in the third world. The borrowers on Kiva are small business owners and individuals in urgent need of money. To raise funds as fast as possible, they have the option to form groups and post loan requests in the name of their groups. While it is generally believed that group loans pose less risk for investors than individual loans do, we study whether this is the case in a philanthropic online marketplace. In particular, we measure the effect of group loans on funding time while controlling for the loan sizes and other factors. Because loan descriptions (in the form of texts) play an important role in lenders' decision process on Kiva, we make use of this information through deep learning in natural language processing. In this aspect, this is the first paper that uses one of the most advanced deep learning techniques to deal with unstructured data in a way that can take advantage of its superior prediction power to answer causal questions. We find that on average, forming group loans speeds up the funding time by about 3.3 days.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge