Youyang Sha

FSOD-VFM: Few-Shot Object Detection with Vision Foundation Models and Graph Diffusion

Feb 03, 2026Abstract:In this paper, we present FSOD-VFM: Few-Shot Object Detectors with Vision Foundation Models, a framework that leverages vision foundation models to tackle the challenge of few-shot object detection. FSOD-VFM integrates three key components: a universal proposal network (UPN) for category-agnostic bounding box generation, SAM2 for accurate mask extraction, and DINOv2 features for efficient adaptation to new object categories. Despite the strong generalization capabilities of foundation models, the bounding boxes generated by UPN often suffer from overfragmentation, covering only partial object regions and leading to numerous small, false-positive proposals rather than accurate, complete object detections. To address this issue, we introduce a novel graph-based confidence reweighting method. In our approach, predicted bounding boxes are modeled as nodes in a directed graph, with graph diffusion operations applied to propagate confidence scores across the network. This reweighting process refines the scores of proposals, assigning higher confidence to whole objects and lower confidence to local, fragmented parts. This strategy improves detection granularity and effectively reduces the occurrence of false-positive bounding box proposals. Through extensive experiments on Pascal-5$^i$, COCO-20$^i$, and CD-FSOD datasets, we demonstrate that our method substantially outperforms existing approaches, achieving superior performance without requiring additional training. Notably, on the challenging CD-FSOD dataset, which spans multiple datasets and domains, our FSOD-VFM achieves 31.6 AP in the 10-shot setting, substantially outperforming previous training-free methods that reach only 21.4 AP. Code is available at: https://intellindust-ai-lab.github.io/projects/FSOD-VFM.

Transformer-Unet: Raw Image Processing with Unet

Sep 17, 2021

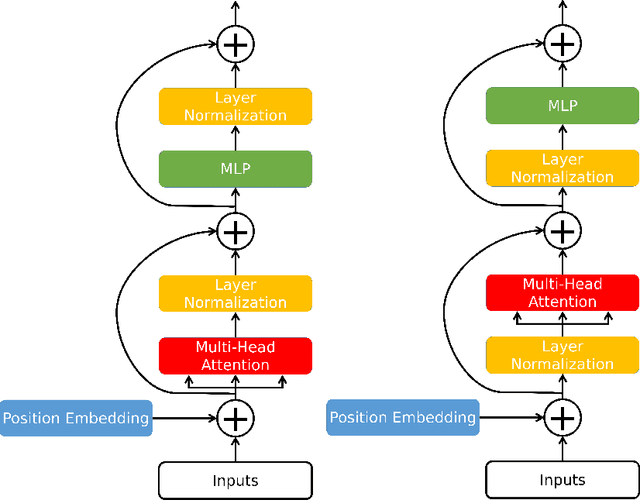

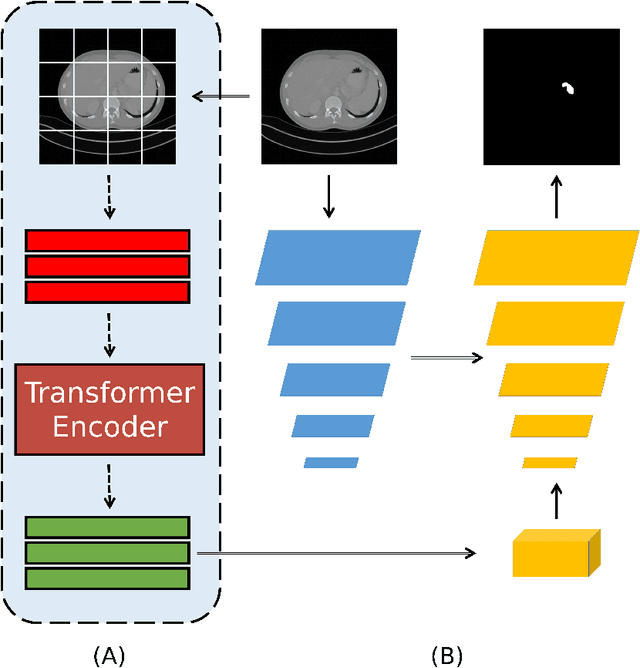

Abstract:Medical image segmentation have drawn massive attention as it is important in biomedical image analysis. Good segmentation results can assist doctors with their judgement and further improve patients' experience. Among many available pipelines in medical image analysis, Unet is one of the most popular neural networks as it keeps raw features by adding concatenation between encoder and decoder, which makes it still widely used in industrial field. In the mean time, as a popular model which dominates natural language process tasks, transformer is now introduced to computer vision tasks and have seen promising results in object detection, image classification and semantic segmentation tasks. Therefore, the combination of transformer and Unet is supposed to be more efficient than both methods working individually. In this article, we propose Transformer-Unet by adding transformer modules in raw images instead of feature maps in Unet and test our network in CT82 datasets for Pancreas segmentation accordingly. We form an end-to-end network and gain segmentation results better than many previous Unet based algorithms in our experiment. We demonstrate our network and show our experimental results in this paper accordingly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge