Yousuf M. Khalifa

Relative Afferent Pupillary Defect Screening through Transfer Learning

Aug 06, 2019

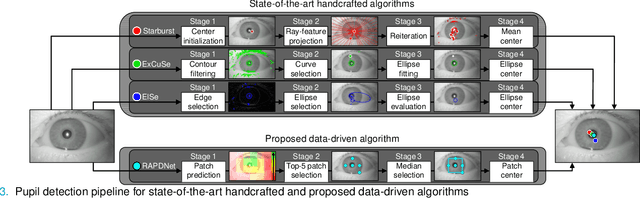

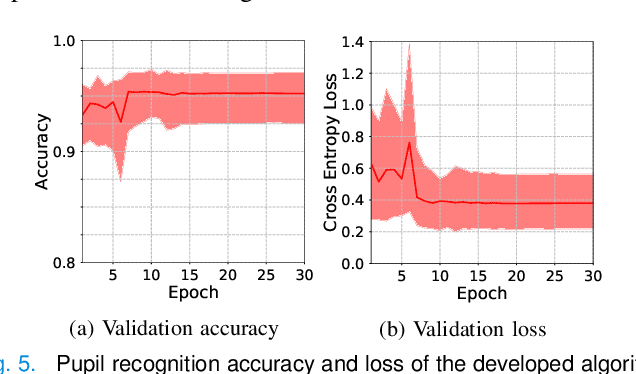

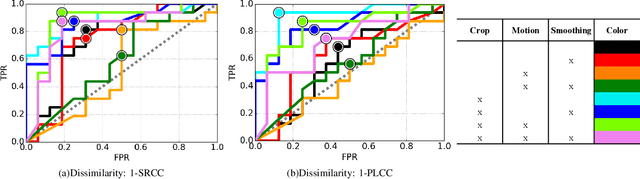

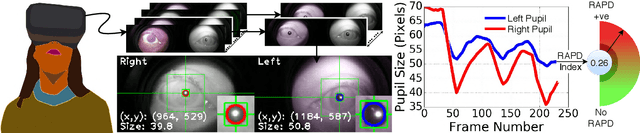

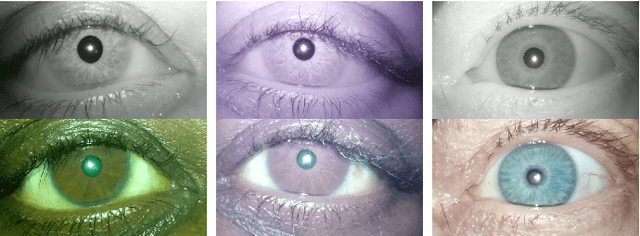

Abstract:Abnormalities in pupillary light reflex can indicate optic nerve disorders that may lead to permanent visual loss if not diagnosed in an early stage. In this study, we focus on relative afferent pupillary defect (RAPD), which is based on the difference between the reactions of the eyes when they are exposed to light stimuli. Incumbent RAPD assessment methods are based on subjective practices that can lead to unreliable measurements. To eliminate subjectivity and obtain reliable measurements, we introduced an automated framework to detect RAPD. For validation, we conducted a clinical study with lab-on-a-headset, which can perform automated light reflex test. In addition to benchmarking handcrafted algorithms, we proposed a transfer learning-based approach that transformed a deep learning-based generic object recognition algorithm into a pupil detector. Based on the conducted experiments, proposed algorithm RAPDNet can achieve a sensitivity and a specificity of 90.6% over 64 test cases in a balanced set, which corresponds to an AUC of 0.929 in ROC analysis. According to our benchmark with three handcrafted algorithms and nine performance metrics, RAPDNet outperforms all other algorithms in every performance category.

Automated Pupillary Light Reflex Test on a Portable Platform

May 21, 2019

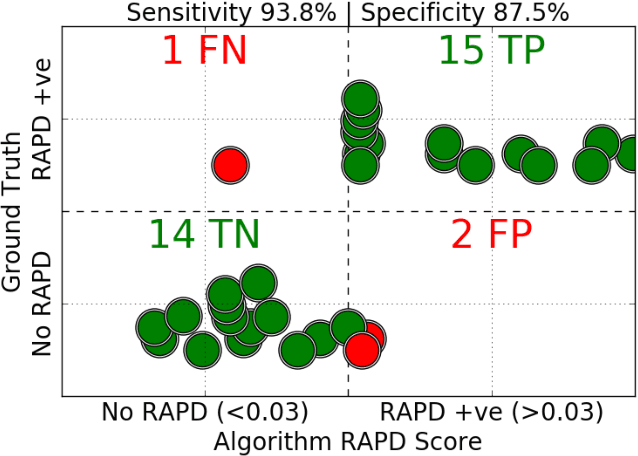

Abstract:In this paper, we introduce a portable eye imaging device denoted as lab-on-a-headset, which can automatically perform a swinging flashlight test. We utilized this device in a clinical study to obtain high-resolution recordings of eyes while they are exposed to a varying light stimuli. Half of the participants had relative afferent pupillary defect (RAPD) while the other half was a control group. In case of positive RAPD, patients pupils constrict less or do not constrict when light stimuli swings from the unaffected eye to the affected eye. To automatically diagnose RAPD, we propose an algorithm based on pupil localization, pupil size measurement, and pupil size comparison of right and left eye during the light reflex test. We validate the algorithmic performance over a dataset obtained from 22 subjects and show that proposed algorithm can achieve a sensitivity of 93.8% and a specificity of 87.5%.

* 7 pages, 11 figures, 3 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge