Youngoh Bang

T-GD: Transferable GAN-generated Images Detection Framework

Aug 10, 2020

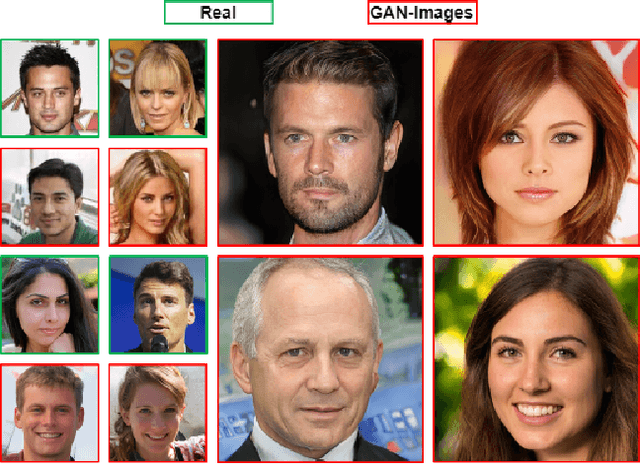

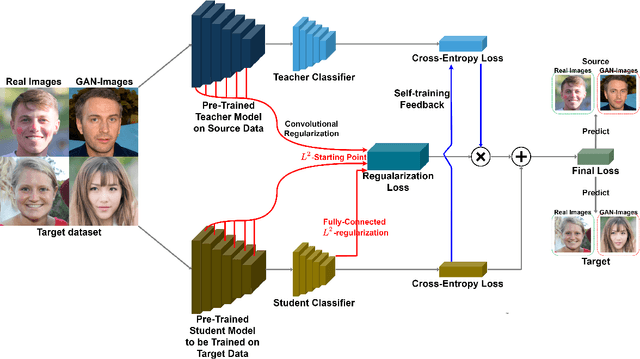

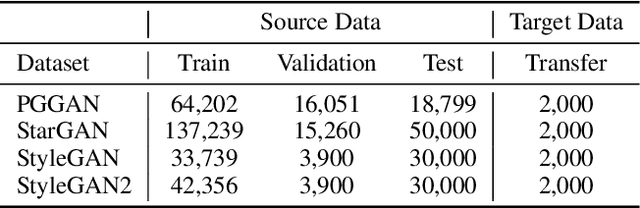

Abstract:Recent advancements in Generative Adversarial Networks (GANs) enable the generation of highly realistic images, raising concerns about their misuse for malicious purposes. Detecting these GAN-generated images (GAN-images) becomes increasingly challenging due to the significant reduction of underlying artifacts and specific patterns. The absence of such traces can hinder detection algorithms from identifying GAN-images and transferring knowledge to identify other types of GAN-images as well. In this work, we present the Transferable GAN-images Detection framework T-GD, a robust transferable framework for an effective detection of GAN-images. T-GD is composed of a teacher and a student model that can iteratively teach and evaluate each other to improve the detection performance. First, we train the teacher model on the source dataset and use it as a starting point for learning the target dataset. To train the student model, we inject noise by mixing up the source and target datasets, while constraining the weight variation to preserve the starting point. Our approach is a self-training method, but distinguishes itself from prior approaches by focusing on improving the transferability of GAN-image detection. T-GD achieves high performance on the source dataset by overcoming catastrophic forgetting and effectively detecting state-of-the-art GAN-images with only a small volume of data without any metadata information.

FDFtNet: Facing Off Fake Images using Fake Detection Fine-tuning Network

Jan 05, 2020

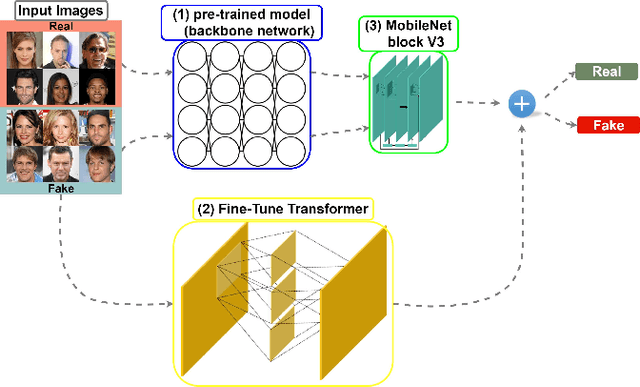

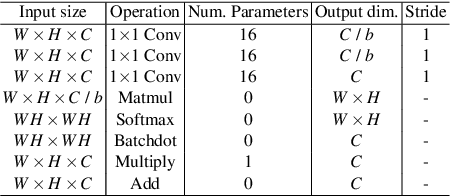

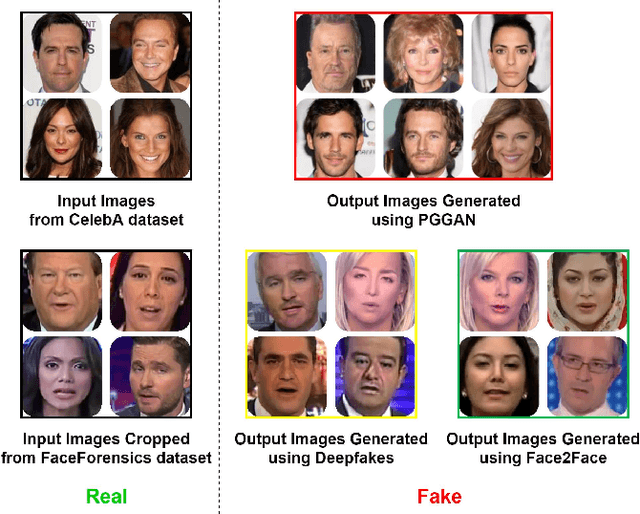

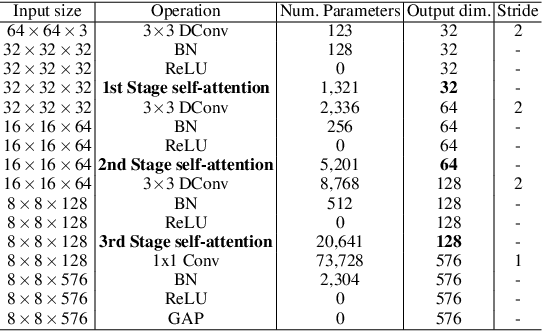

Abstract:Creating fake images and videos such as "Deepfake" has become much easier these days due to the advancement in Generative Adversarial Networks (GANs). Moreover, recent research such as the few-shot learning can create highly realistic personalized fake images with only a few images. Therefore, the threat of Deepfake to be used for a variety of malicious intents such as propagating fake images and videos becomes prevalent. And detecting these machine-generated fake images has been quite challenging than ever. In this work, we propose a light-weight robust fine-tuning neural network-based classifier architecture called Fake Detection Fine-tuning Network (FDFtNet), which is capable of detecting many of the new fake face image generation models, and can be easily combined with existing image classification networks and finetuned on a few datasets. In contrast to many existing methods, our approach aims to reuse popular pre-trained models with only a few images for fine-tuning to effectively detect fake images. The core of our approach is to introduce an image-based self-attention module called Fine-Tune Transformer that uses only the attention module and the down-sampling layer. This module is added to the pre-trained model and fine-tuned on a few data to search for new sets of feature space to detect fake images. We experiment with our FDFtNet on the GANsbased dataset (Progressive Growing GAN) and Deepfake-based dataset (Deepfake and Face2Face) with a small input image resolution of 64x64 that complicates detection. Our FDFtNet achieves an overall accuracy of 90.29% in detecting fake images generated from the GANs-based dataset, outperforming the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge