Yosef S. Razin

Converging Measures and an Emergent Model: A Meta-Analysis of Human-Automation Trust Questionnaires

Mar 24, 2023

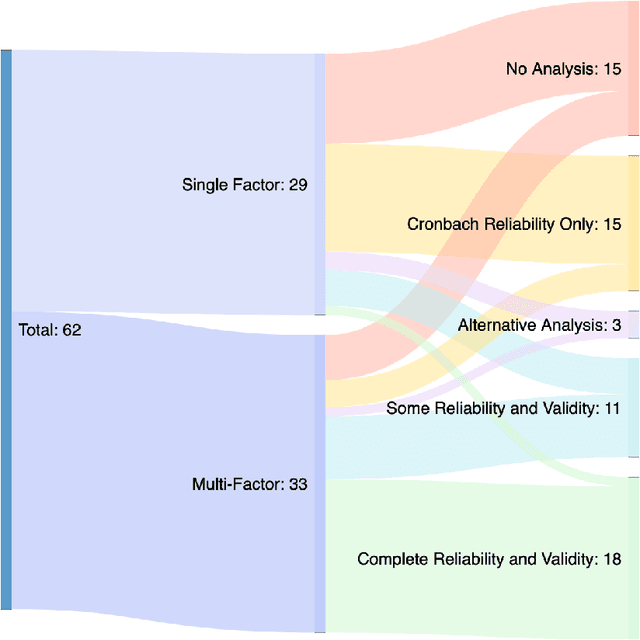

Abstract:A significant challenge to measuring human-automation trust is the amount of construct proliferation, models, and questionnaires with highly variable validation. However, all agree that trust is a crucial element of technological acceptance, continued usage, fluency, and teamwork. Herein, we synthesize a consensus model for trust in human-automation interaction by performing a meta-analysis of validated and reliable trust survey instruments. To accomplish this objective, this work identifies the most frequently cited and best-validated human-automation and human-robot trust questionnaires, as well as the most well-established factors, which form the dimensions and antecedents of such trust. To reduce both confusion and construct proliferation, we provide a detailed mapping of terminology between questionnaires. Furthermore, we perform a meta-analysis of the regression models that emerged from those experiments which used multi-factorial survey instruments. Based on this meta-analysis, we demonstrate a convergent experimentally validated model of human-automation trust. This convergent model establishes an integrated framework for future research. It identifies the current boundaries of trust measurement and where further investigation is necessary. We close by discussing choosing and designing an appropriate trust survey instrument. By comparing, mapping, and analyzing well-constructed trust survey instruments, a consensus structure of trust in human-automation interaction is identified. Doing so discloses a more complete basis for measuring trust emerges that is widely applicable. It integrates the academic idea of trust with the colloquial, common-sense one. Given the increasingly recognized importance of trust, especially in human-automation interaction, this work leaves us better positioned to understand and measure it.

Committing to Interdependence: Implications from Game Theory for Human-Robot Trust

Nov 12, 2021

Abstract:Human-robot interaction and game theory have developed distinct theories of trust for over three decades in relative isolation from one another. Human-robot interaction has focused on the underlying dimensions, layers, correlates, and antecedents of trust models, while game theory has concentrated on the psychology and strategies behind singular trust decisions. Both fields have grappled to understand over-trust and trust calibration, as well as how to measure trust expectations, risk, and vulnerability. This paper presents initial steps in closing the gap between these fields. Using insights and experimental findings from interdependence theory and social psychology, this work starts by analyzing a large game theory competition data set to demonstrate that the strongest predictors for a wide variety of human-human trust interactions are the interdependence-derived variables for commitment and trust that we have developed. It then presents a second study with human subject results for more realistic trust scenarios, involving both human-human and human-machine trust. In both the competition data and our experimental data, we demonstrate that the interdependence metrics better capture social `overtrust' than either rational or normative psychological reasoning, as proposed by game theory. This work further explores how interdependence theory--with its focus on commitment, coercion, and cooperation--addresses many of the proposed underlying constructs and antecedents within human-robot trust, shedding new light on key similarities and differences that arise when robots replace humans in trust interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge