Yongqiang Bai

Middle Fusion and Multi-Stage, Multi-Form Prompts for Robust RGB-T Tracking

Mar 27, 2024

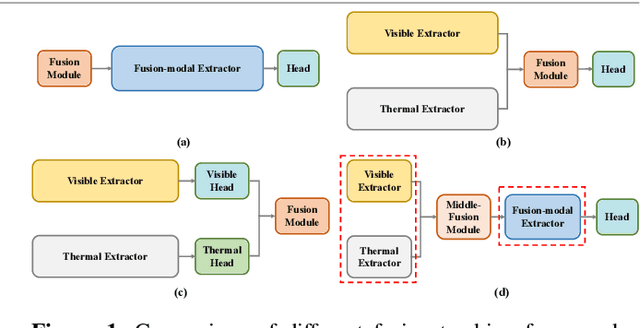

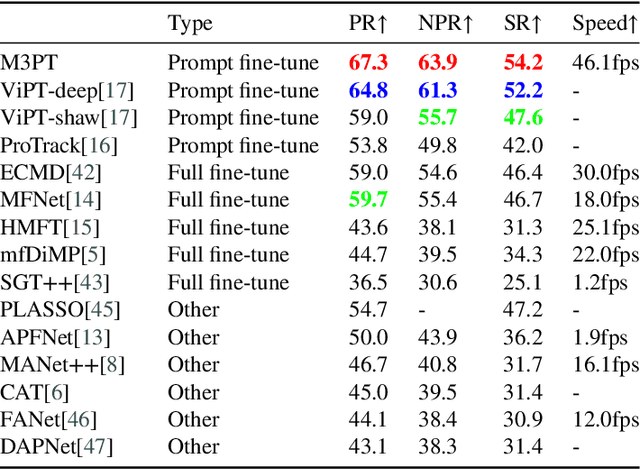

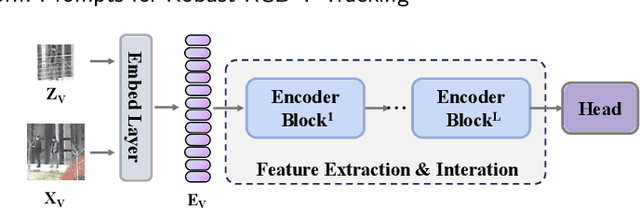

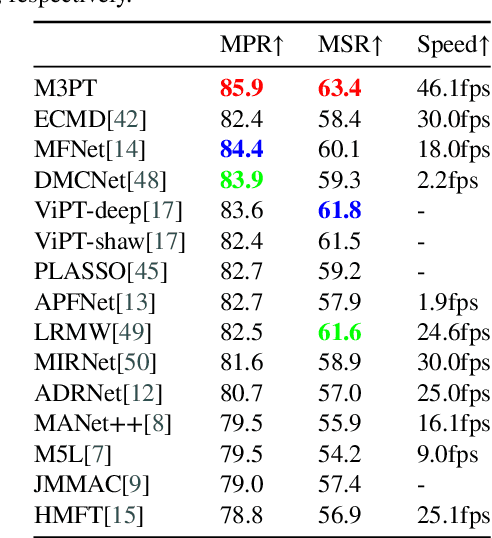

Abstract:RGB-T tracking, a vital downstream task of object tracking, has made remarkable progress in recent years. Yet, it remains hindered by two major challenges: 1) the trade-off between performance and efficiency; 2) the scarcity of training data. To address the latter challenge, some recent methods employ prompts to fine-tune pre-trained RGB tracking models and leverage upstream knowledge in a parameter-efficient manner. However, these methods inadequately explore modality-independent patterns and disregard the dynamic reliability of different modalities in open scenarios. We propose M3PT, a novel RGB-T prompt tracking method that leverages middle fusion and multi-modal and multi-stage visual prompts to overcome these challenges. We pioneer the use of the middle fusion framework for RGB-T tracking, which achieves a balance between performance and efficiency. Furthermore, we incorporate the pre-trained RGB tracking model into the framework and utilize multiple flexible prompt strategies to adapt the pre-trained model to the comprehensive exploration of uni-modal patterns and the improved modeling of fusion-modal features, harnessing the potential of prompt learning in RGB-T tracking. Our method outperforms the state-of-the-art methods on four challenging benchmarks, while attaining 46.1 fps inference speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge