Yitian Qian

A majorized PAM method with subspace correction for low-rank composite factorization model

Jun 07, 2024

Abstract:This paper concerns a class of low-rank composite factorization models arising from matrix completion. For this nonconvex and nonsmooth optimization problem, we propose a proximal alternating minimization algorithm (PAMA) with subspace correction, in which a subspace correction step is imposed on every proximal subproblem so as to guarantee that the corrected proximal subproblem has a closed-form solution. For this subspace correction PAMA, we prove the subsequence convergence of the iterate sequence, and establish the convergence of the whole iterate sequence and the column subspace sequences of factor pairs under the KL property of objective function and a restrictive condition that holds automatically for the column $\ell_{2,0}$-norm function. Numerical comparison with the proximal alternating linearized minimization method on one-bit matrix completion problems indicates that PAMA has an advantage in seeking lower relative error within less time.

Column $\ell_{2,0}$-norm regularized factorization model of low-rank matrix recovery and its computation

Aug 24, 2020

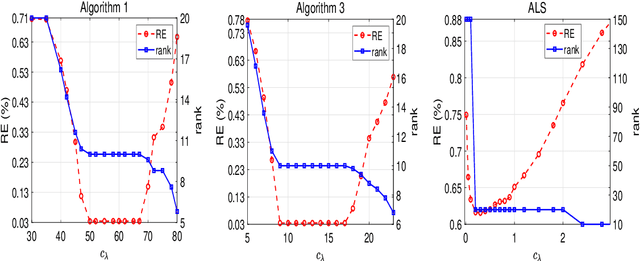

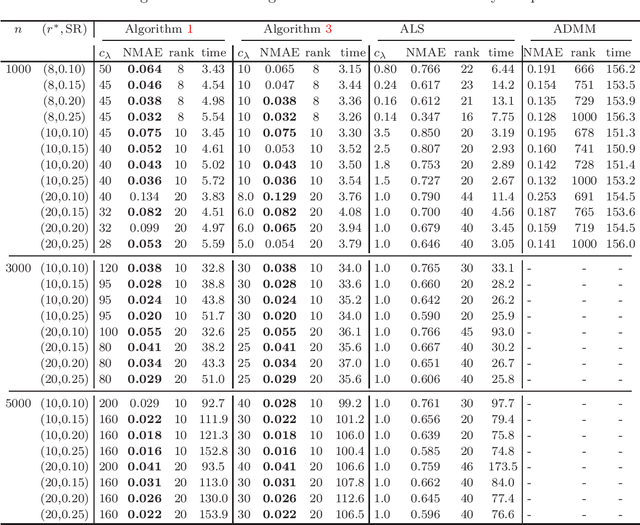

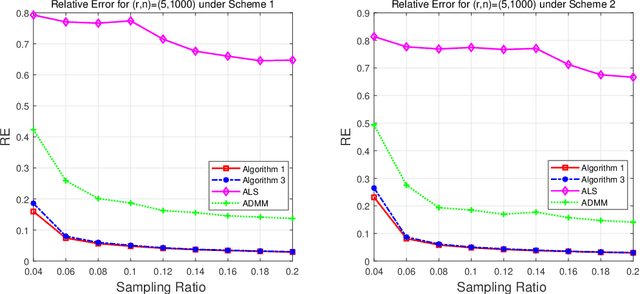

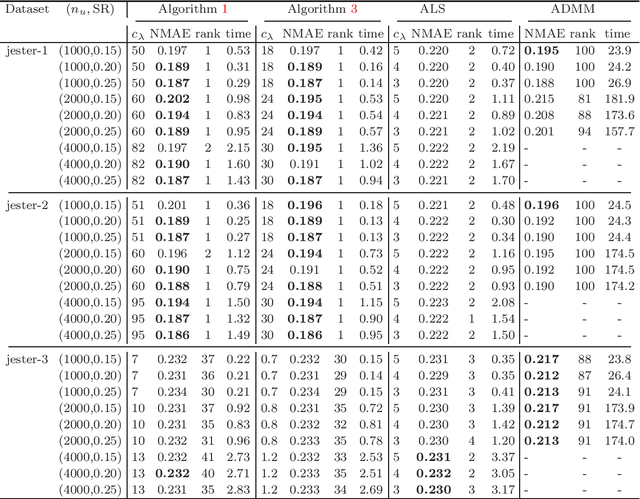

Abstract:This paper is concerned with the column $\ell_{2,0}$-regularized factorization model of low-rank matrix recovery problems and its computation. The column $\ell_{2,0}$-norm of factor matrices is introduced to promote column sparsity of factors and lower rank solutions. For this nonconvex nonsmooth and non-Lipschitz problem, we develop an alternating majorization-minimization (AMM) method with extrapolation, and a hybrid AMM in which a majorized alternating proximal method is first proposed to seek an initial factor pair with less nonzero columns and then the AMM with extrapolation is applied to the minimization of smooth nonconvex loss. We provide the global convergence analysis for the proposed AMM methods and apply them to the matrix completion problem with non-uniform sampling schemes. Numerical experiments are conducted with synthetic and real data examples, and comparison results with the nuclear-norm regularized factorization model and the max-norm regularized convex model demonstrate that the column $\ell_{2,0}$-regularized factorization model has an advantage in offering solutions of lower error and rank within less time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge