Yisu Zhang

FastMESH: Fast Surface Reconstruction by Hexagonal Mesh-based Neural Rendering

May 29, 2023Abstract:Despite the promising results of multi-view reconstruction, the recent neural rendering-based methods, such as implicit surface rendering (IDR) and volume rendering (NeuS), not only incur a heavy computational burden on training but also have the difficulties in disentangling the geometric and appearance. Although having achieved faster training speed than implicit representation and hash coding, the explicit voxel-based method obtains the inferior results on recovering surface. To address these challenges, we propose an effective mesh-based neural rendering approach, named FastMESH, which only samples at the intersection of ray and mesh. A coarse-to-fine scheme is introduced to efficiently extract the initial mesh by space carving. More importantly, we suggest a hexagonal mesh model to preserve surface regularity by constraining the second-order derivatives of vertices, where only low level of positional encoding is engaged for neural rendering. The experiments demonstrate that our approach achieves the state-of-the-art results on both reconstruction and novel view synthesis. Besides, we obtain 10-fold acceleration on training comparing to the implicit representation-based methods.

Multi-View Stereo Representation Revisit: Region-Aware MVSNet

Apr 27, 2023

Abstract:Deep learning-based multi-view stereo has emerged as a powerful paradigm for reconstructing the complete geometrically-detailed objects from multi-views. Most of the existing approaches only estimate the pixel-wise depth value by minimizing the gap between the predicted point and the intersection of ray and surface, which usually ignore the surface topology. It is essential to the textureless regions and surface boundary that cannot be properly reconstructed. To address this issue, we suggest to take advantage of point-to-surface distance so that the model is able to perceive a wider range of surfaces. To this end, we predict the distance volume from cost volume to estimate the signed distance of points around the surface. Our proposed RA-MVSNet is patch-awared, since the perception range is enhanced by associating hypothetical planes with a patch of surface. Therefore, it could increase the completion of textureless regions and reduce the outliers at the boundary. Moreover, the mesh topologies with fine details can be generated by the introduced distance volume. Comparing to the conventional deep learning-based multi-view stereo methods, our proposed RA-MVSNet approach obtains more complete reconstruction results by taking advantage of signed distance supervision. The experiments on both the DTU and Tanks \& Temples datasets demonstrate that our proposed approach achieves the state-of-the-art results.

Efficient Textured Mesh Recovery from Multiple Views with Differentiable Rendering

May 25, 2022

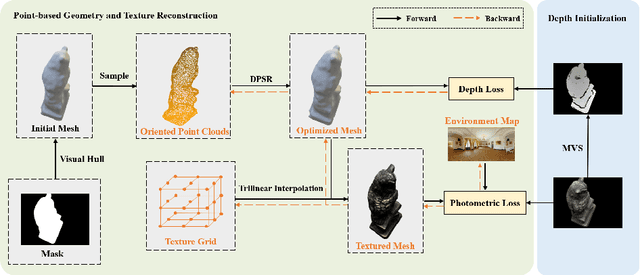

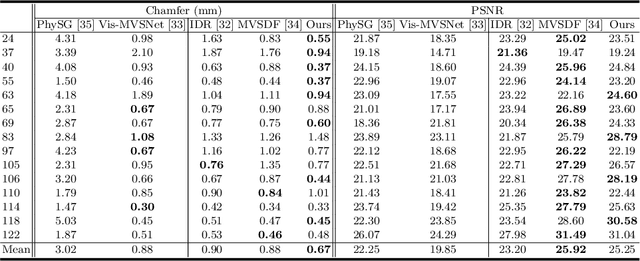

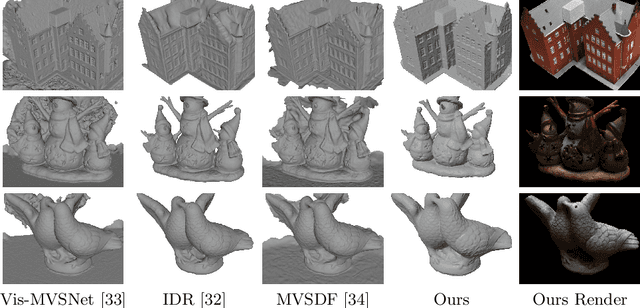

Abstract:Despite of the promising results on shape and color recovery using self-supervision, the multi-layer perceptrons-based methods usually costs hours to train the deep neural network due to the implicit surface representation. Moreover, it is quite computational intensive to render a single image, since a forward network inference is required for each pixel. To tackle these challenges, in this paper, we propose an efficient coarse-to-fine approach to recover the textured mesh from multi-view images. Specifically, we take advantage of a differentiable Poisson Solver to represent the shape, which is able to produce topology-agnostic and watertight surfaces. To account for the depth information, we optimize the shape geometry by minimizing the difference between the rendered mesh with the depth predicted by the learning-based multi-view stereo algorithm. In contrast to the implicit neural representation on shape and color, we introduce a physically based inverse rendering scheme to jointly estimate the lighting and reflectance of the objects, which is able to render the high resolution image at real-time. Additionally, we fine-tune the extracted mesh by inverse rendering to obtain the mesh with fine details and high fidelity image. We have conducted the extensive experiments on several multi-view stereo datasets, whose promising results demonstrate the efficacy of our proposed approach. We will make our full implementation publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge