Yiming Fei

Real-Time Progressive Learning: Mutually Reinforcing Learning and Control with Neural-Network-Based Selective Memory

Aug 08, 2023

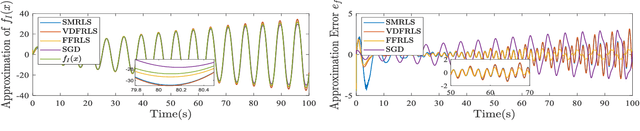

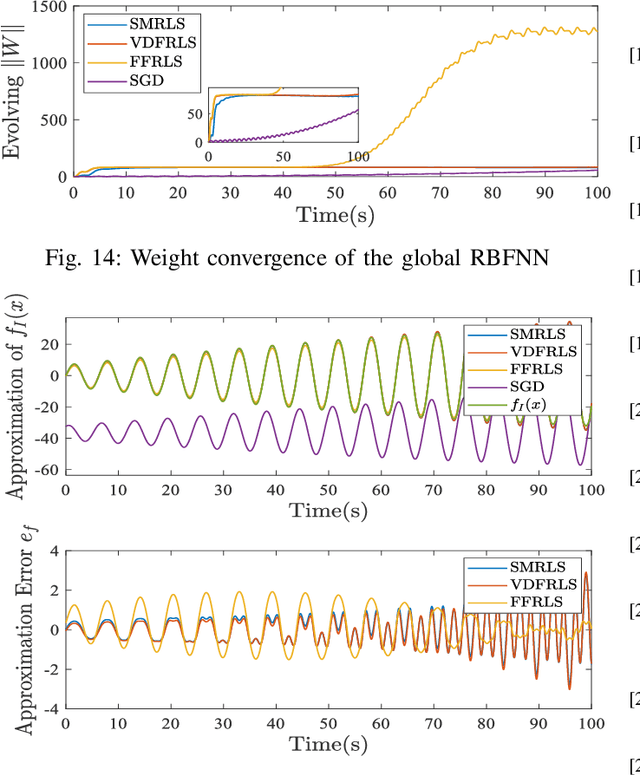

Abstract:Memory, as the basis of learning, determines the storage, update and forgetting of the knowledge and further determines the efficiency of learning. Featured with a mechanism of memory, a radial basis function neural network (RBFNN) based learning control scheme named real-time progressive learning (RTPL) is proposed to learn the unknown dynamics of the system with guaranteed stability and closed-loop performance. Instead of the stochastic gradient descent (SGD) update law in adaptive neural control (ANC), RTPL adopts the selective memory recursive least squares (SMRLS) algorithm to update the weights of the RBFNN. Through SMRLS, the approximation capabilities of the RBFNN are uniformly distributed over the feature space and thus the passive knowledge forgetting phenomenon of SGD method is suppressed. Subsequently, RTPL achieves the following merits over the classical ANC: 1) guaranteed learning capability under low-level persistent excitation (PE), 2) improved learning performance (learning speed, accuracy and generalization capability), and 3) low gain requirement ensuring robustness of RTPL in practical applications. Moreover, the RTPL based learning and control will gradually reinforce each other during the task execution, making it appropriate for long-term learning control tasks. As an example, RTPL is used to address the tracking control problem of a class of nonlinear systems with RBFNN being an adaptive feedforward controller. Corresponding theoretical analysis and simulation studies demonstrate the effectiveness of RTPL.

Selective Memory Recursive Least Squares: Uniformly Allocated Approximation Capabilities of RBF Neural Networks in Real-Time Learning

Nov 15, 2022

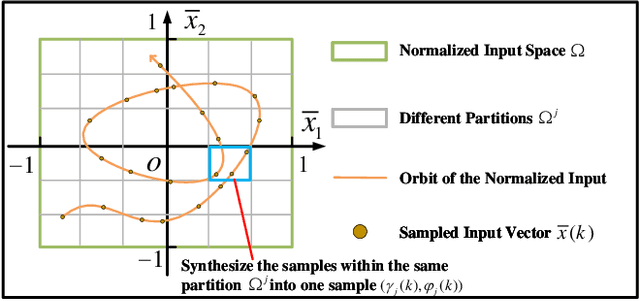

Abstract:When performing real-time learning tasks, the radial basis function neural network (RBFNN) is expected to make full use of the training samples such that its learning accuracy and generalization capability are guaranteed. Since the approximation capability of the RBFNN is finite, training methods with forgetting mechanisms such as the forgetting factor recursive least squares (FFRLS) and stochastic gradient descent (SGD) methods are widely used to maintain the learning ability of the RBFNN to new knowledge. However, with the forgetting mechanisms, some useful knowledge will get lost simply because they are learned a long time ago, which we refer to as the passive knowledge forgetting phenomenon. To address this problem, this paper proposes a real-time training method named selective memory recursive least squares (SMRLS) in which the feature space of the RBFNN is evenly discretized into a finite number of partitions and a synthesized objective function is developed to replace the original objective function of the ordinary recursive least squares (RLS) method. SMRLS is featured with a memorization mechanism that synthesizes the samples within each partition in real-time into representative samples uniformly distributed over the feature space, and thus overcomes the passive knowledge forgetting phenomenon and improves the generalization capability of the learned knowledge. Compared with the SGD or FFRLS methods, SMRLS achieves improved learning performance (learning speed, accuracy and generalization capability), which is demonstrated by corresponding simulation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge