Yigit Yıldırim

Learning Early Social Maneuvers for Enhanced Social Navigation

Mar 23, 2024

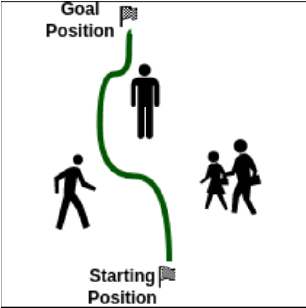

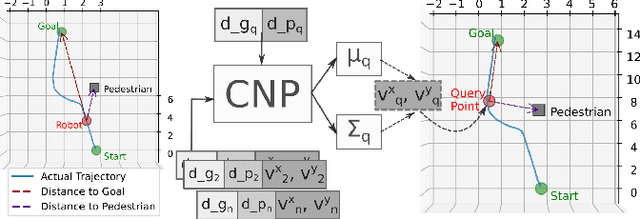

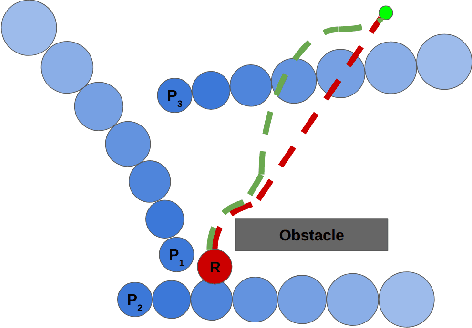

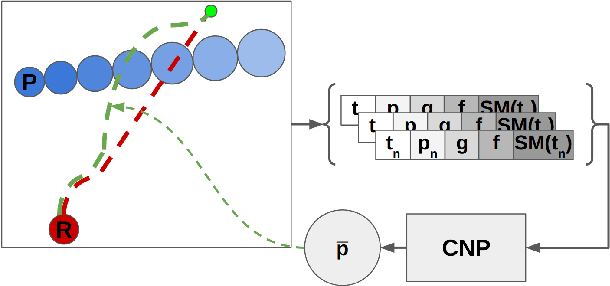

Abstract:Socially compliant navigation is an integral part of safety features in Human-Robot Interaction. Traditional approaches to mobile navigation prioritize physical aspects, such as efficiency, but social behaviors gain traction as robots appear more in daily life. Recent techniques to improve the social compliance of navigation often rely on predefined features or reward functions, introducing assumptions about social human behavior. To address this limitation, we propose a novel Learning from Demonstration (LfD) framework for social navigation that exclusively utilizes raw sensory data. Additionally, the proposed system contains mechanisms to consider the future paths of the surrounding pedestrians, acknowledging the temporal aspect of the problem. The final product is expected to reduce the anxiety of people sharing their environment with a mobile robot, helping them trust that the robot is aware of their presence and will not harm them. As the framework is currently being developed, we outline its components, present experimental results, and discuss future work towards realizing this framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge