Yasir Hussain

Robust Mobile Robot Path Planning via LLM-Based Dynamic Waypoint Generation

Jan 27, 2025

Abstract:Mobile robot path planning in complex environments remains a significant challenge, especially in achieving efficient, safe and robust paths. The traditional path planning techniques like DRL models typically trained for a given configuration of the starting point and target positions, these models only perform well when these conditions are satisfied. In this paper, we proposed a novel path planning framework that embeds Large Language Models to empower mobile robots with the capability of dynamically interpreting natural language commands and autonomously generating efficient, collision-free navigation paths. The proposed framework uses LLMs to translate high-level user inputs into actionable waypoints while dynamically adjusting paths in response to obstacles. We experimentally evaluated our proposed LLM-based approach across three different environments of progressive complexity, showing the robustness of our approach with llama3.1 model that outperformed other LLM models in path planning time, waypoint generation success rate, and collision avoidance. This underlines the promising contribution of LLMs for enhancing the capability of mobile robots, especially when their operation involves complex decisions in large and complex environments. Our framework has provided safer, more reliable navigation systems and opened a new direction for the future research. The source code of this work is publicly available on GitHub.

DeepVS: An Efficient and Generic Approach for Source Code Modeling Usage

Oct 15, 2019

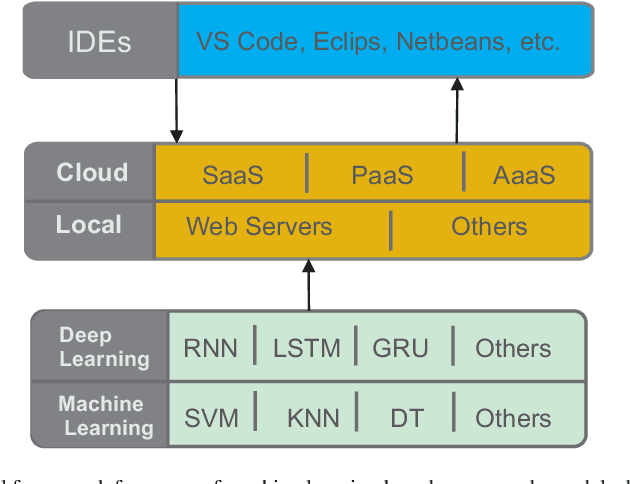

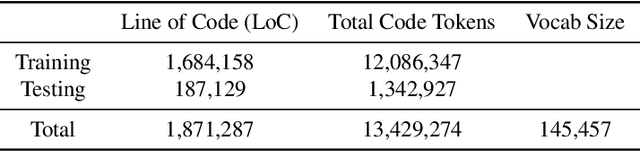

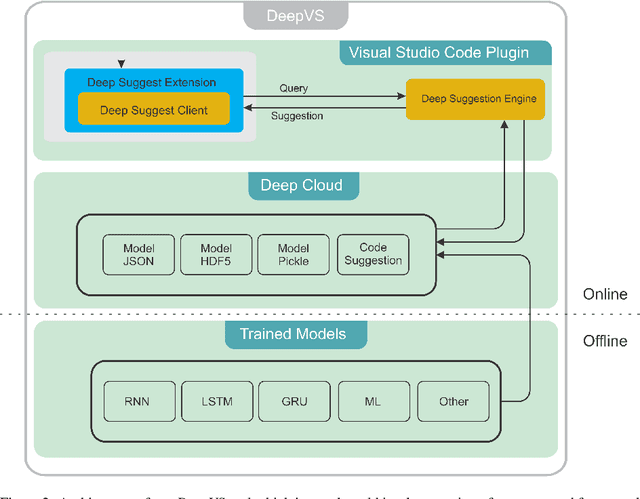

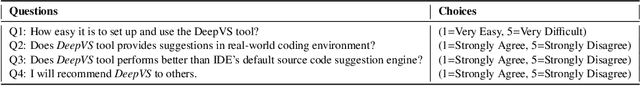

Abstract:Recently deep learning-based approaches have shown great potential in the modeling of source code for various software engineering tasks. These techniques lack adequate generalization and resistance to acclimate the use of such models in a real-world software development environment. In this work, we propose a novel general framework that combines cloud computing and deep learning in an integrated development environment (IDE) to assist software developers in various source code modeling tasks. Additionally, we present DeepVS, an end-to-end deep learning-based source code suggestion tool that shows a real-world implementation of our proposed framework. The DeepVS tool is capable of providing source code suggestions instantly in an IDE by using a pre-trained source code model. Moreover, the DeepVS tool is also capable of suggesting zero-day (unseen) code tokens. The DeepVS tool illustrates the effectiveness of the proposed framework and shows how it can help to link the gap between developers and researchers.

Deep Transfer Learning for Source Code Modeling

Oct 12, 2019

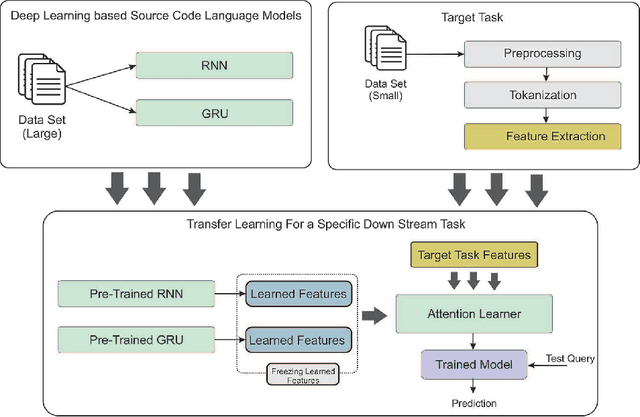

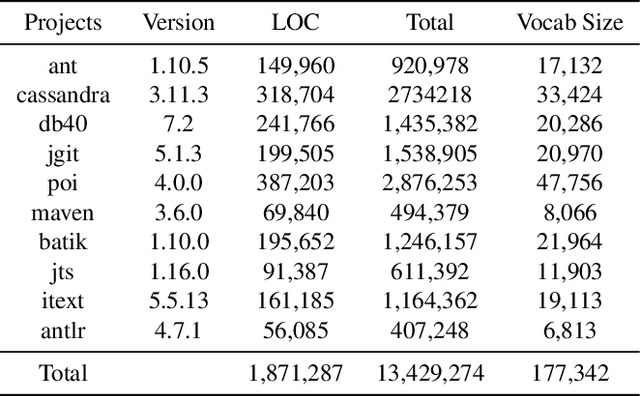

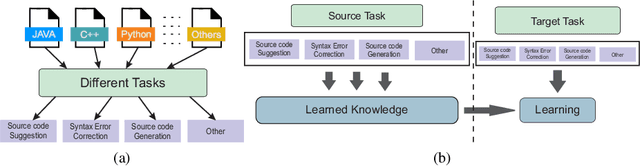

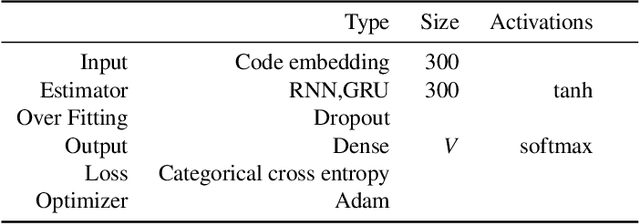

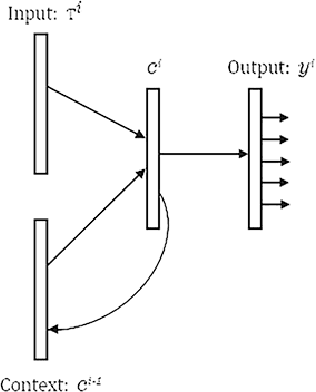

Abstract:In recent years, deep learning models have shown great potential in source code modeling and analysis. Generally, deep learning-based approaches are problem-specific and data-hungry. A challenging issue of these approaches is that they require training from starch for a different related problem. In this work, we propose a transfer learning-based approach that significantly improves the performance of deep learning-based source code models. In contrast to traditional learning paradigms, transfer learning can transfer the knowledge learned in solving one problem into another related problem. First, we present two recurrent neural network-based models RNN and GRU for the purpose of transfer learning in the domain of source code modeling. Next, via transfer learning, these pre-trained (RNN and GRU) models are used as feature extractors. Then, these extracted features are combined into attention learner for different downstream tasks. The attention learner leverages from the learned knowledge of pre-trained models and fine-tunes them for a specific downstream task. We evaluate the performance of the proposed approach with extensive experiments with the source code suggestion task. The results indicate that the proposed approach outperforms the state-of-the-art models in terms of accuracy, precision, recall, and F-measure without training the models from scratch.

CodeGRU: Context-aware Deep Learning with Gated Recurrent Unit for Source Code Modeling

Mar 03, 2019

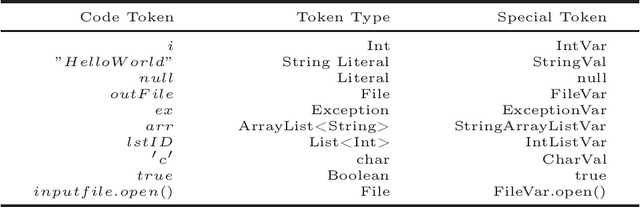

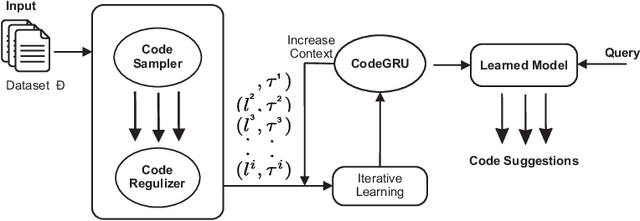

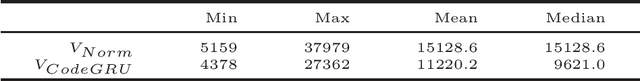

Abstract:Recently many NLP-based deep learning models have been applied to model source code for source code suggestion and recommendation tasks. A major limitation of these approaches is that they take source code as simple tokens of text and ignore its contextual, syntaxtual and structural dependencies. In this work, we present CodeGRU, a Gated Recurrent Unit based source code language model that is capable of capturing contextual, syntaxtual and structural dependencies for modeling the source code. The CodeGRU introduces the following several new components. The Code Sampler is first proposed for selecting noise-free code samples and transforms obfuscate code to its proper syntax, which helps to capture syntaxtual and structural dependencies. The Code Regularize is next introduced to encode source code which helps capture the contextual dependencies of the source code. Finally, we propose a novel method which can learn variable size context for modeling source code. We evaluated CodeGRU with real-world dataset and it shows that CodeGRU can effectively capture contextual, syntaxtual and structural dependencies which previous works fails. We also discuss and visualize two use cases of CodeGRU for source code modeling tasks (1) source code suggestion, and (2) source code generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge