Yan-Martin Tamm

Comparative Analysis of Pretrained Audio Representations in Music Recommender Systems

Sep 13, 2024

Abstract:Over the years, Music Information Retrieval (MIR) has proposed various models pretrained on large amounts of music data. Transfer learning showcases the proven effectiveness of pretrained backend models with a broad spectrum of downstream tasks, including auto-tagging and genre classification. However, MIR papers generally do not explore the efficiency of pretrained models for Music Recommender Systems (MRS). In addition, the Recommender Systems community tends to favour traditional end-to-end neural network learning over these models. Our research addresses this gap and evaluates the applicability of six pretrained backend models (MusicFM, Music2Vec, MERT, EncodecMAE, Jukebox, and MusiCNN) in the context of MRS. We assess their performance using three recommendation models: K-nearest neighbours (KNN), shallow neural network, and BERT4Rec. Our findings suggest that pretrained audio representations exhibit significant performance variability between traditional MIR tasks and MRS, indicating that valuable aspects of musical information captured by backend models may differ depending on the task. This study establishes a foundation for further exploration of pretrained audio representations to enhance music recommendation systems.

Quality Metrics in Recommender Systems: Do We Calculate Metrics Consistently?

Jun 26, 2022

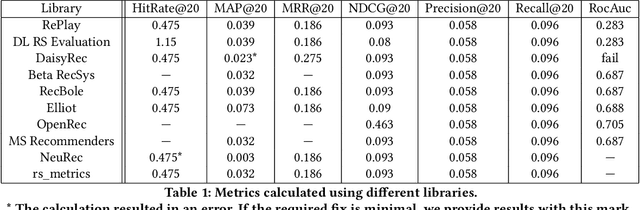

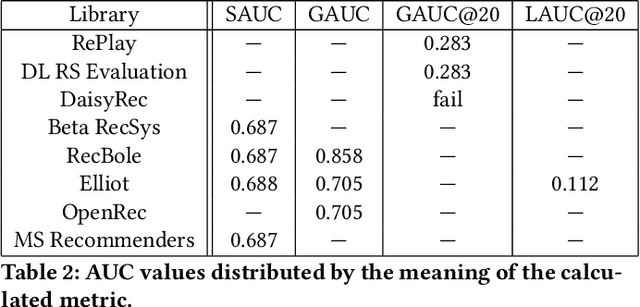

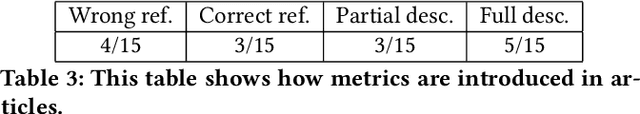

Abstract:Offline evaluation is a popular approach to determine the best algorithm in terms of the chosen quality metric. However, if the chosen metric calculates something unexpected, this miscommunication can lead to poor decisions and wrong conclusions. In this paper, we thoroughly investigate quality metrics used for recommender systems evaluation. We look at the practical aspect of implementations found in modern RecSys libraries and at the theoretical aspect of definitions in academic papers. We find that Precision is the only metric universally understood among papers and libraries, while other metrics may have different interpretations. Metrics implemented in different libraries sometimes have the same name but measure different things, which leads to different results given the same input. When defining metrics in an academic paper, authors sometimes omit explicit formulations or give references that do not contain explanations either. In 47% of cases, we cannot easily know how the metric is defined because the definition is not clear or absent. These findings highlight yet another difficulty in recommender system evaluation and call for a more detailed description of evaluation protocols.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge