Y. Yang

CH-Go: Online Go System Based on Chunk Data Storage

Mar 22, 2023

Abstract:The training and running of an online Go system require the support of effective data management systems to deal with vast data, such as the initial Go game records, the feature data set obtained by representation learning, the experience data set of self-play, the randomly sampled Monte Carlo tree, and so on. Previous work has rarely mentioned this problem, but the ability and efficiency of data management systems determine the accuracy and speed of the Go system. To tackle this issue, we propose an online Go game system based on the chunk data storage method (CH-Go), which processes the format of 160k Go game data released by Kiseido Go Server (KGS) and designs a Go encoder with 11 planes, a parallel processor and generator for better memory performance. Specifically, we store the data in chunks, take the chunk size of 1024 as a batch, and save the features and labels of each chunk as binary files. Then a small set of data is randomly sampled each time for the neural network training, which is accessed by batch through yield method. The training part of the prototype includes three modules: supervised learning module, reinforcement learning module, and an online module. Firstly, we apply Zobrist-guided hash coding to speed up the Go board construction. Then we train a supervised learning policy network to initialize the self-play for generation of experience data with 160k Go game data released by KGS. Finally, we conduct reinforcement learning based on REINFORCE algorithm. Experiments show that the training accuracy of CH- Go in the sampled 150 games is 99.14%, and the accuracy in the test set is as high as 98.82%. Under the condition of limited local computing power and time, we have achieved a better level of intelligence. Given the current situation that classical systems such as GOLAXY are not free and open, CH-Go has realized and maintained complete Internet openness.

AI-assisted Optimization of the ECCE Tracking System at the Electron Ion Collider

May 20, 2022Abstract:The Electron-Ion Collider (EIC) is a cutting-edge accelerator facility that will study the nature of the "glue" that binds the building blocks of the visible matter in the universe. The proposed experiment will be realized at Brookhaven National Laboratory in approximately 10 years from now, with detector design and R&D currently ongoing. Notably, EIC is one of the first large-scale facilities to leverage Artificial Intelligence (AI) already starting from the design and R&D phases. The EIC Comprehensive Chromodynamics Experiment (ECCE) is a consortium that proposed a detector design based on a 1.5T solenoid. The EIC detector proposal review concluded that the ECCE design will serve as the reference design for an EIC detector. Herein we describe a comprehensive optimization of the ECCE tracker using AI. The work required a complex parametrization of the simulated detector system. Our approach dealt with an optimization problem in a multidimensional design space driven by multiple objectives that encode the detector performance, while satisfying several mechanical constraints. We describe our strategy and show results obtained for the ECCE tracking system. The AI-assisted design is agnostic to the simulation framework and can be extended to other sub-detectors or to a system of sub-detectors to further optimize the performance of the EIC detector.

GBLinks: GNN-Based Beam Selection and Link Activation for Ultra-dense D2D mmWave Networks

Jul 28, 2021

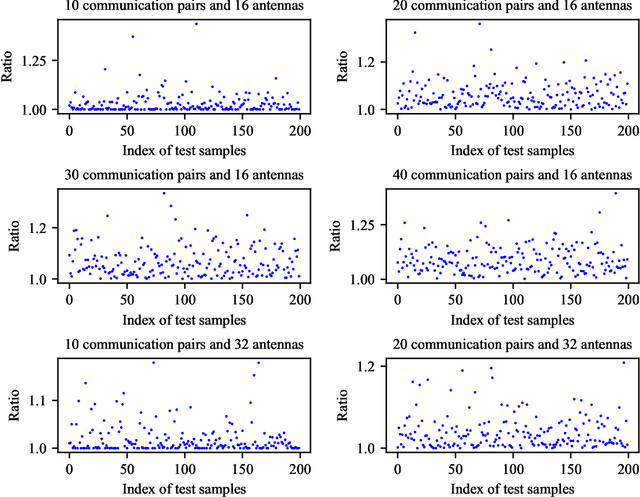

Abstract:In this paper, we consider the problem of joint beam selection and link activation across a set of communication pairs to effectively control the interference between communication pairs via inactivating part communication pairs in ultra-dense device-to-device (D2D) mmWave communication networks. The resulting optimization problem is formulated as an integer programming problem that is nonconvex and NP-hard problem. Consequently, the global optimal solution, even the local optimal solution, cannot be generally obtained. To overcome this challenge, this paper resorts to design a deep learning architecture based on graph neural network to finish the joint beam selection and link activation, with taking the network topology information into account. Meanwhile, we present an unsupervised Lagrangian dual learning framework to train the parameters of GBLinks model. Numerical results show that the proposed GBLinks model can converges to a stable point with the number of iterations increases, in terms of the sum rate. Furthermore, the GBLinks model can reach near-optimal solution through comparing with the exhaustive search scheme in small-scale ultra-dense D2D mmWave communication networks and outperforms GreedyNoSched and the SCA-based method. It also shows that the GBLinks model can generalize to varying scales and densities of ultra-dense D2D mmWave communication networks.

A Robust Deep Unfolded Network for Sparse Signal Recovery from Noisy Binary Measurements

Oct 15, 2020

Abstract:We propose a novel deep neural network, coined DeepFPC-$\ell_2$, for solving the 1-bit compressed sensing problem. The network is designed by unfolding the iterations of the fixed-point continuation (FPC) algorithm with one-sided $\ell_2$-norm (FPC-$\ell_2$). The DeepFPC-$\ell_2$ method shows higher signal reconstruction accuracy and convergence speed than the traditional FPC-$\ell_2$ algorithm. Furthermore, we compare its robustness to noise with the previously proposed DeepFPC network---which stemmed from unfolding the FPC-$\ell_1$ algorithm---for different signal to noise ratio (SNR) and sign-flipped ratio (flip ratio) scenarios. We show that the proposed network has better noise immunity than the previous DeepFPC method. This result indicates that the robustness of a deep-unfolded neural network is related with that of the algorithm it stems from.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge