Xukai Xie

Exploiting Operation Importance for Differentiable Neural Architecture Search

Nov 24, 2019

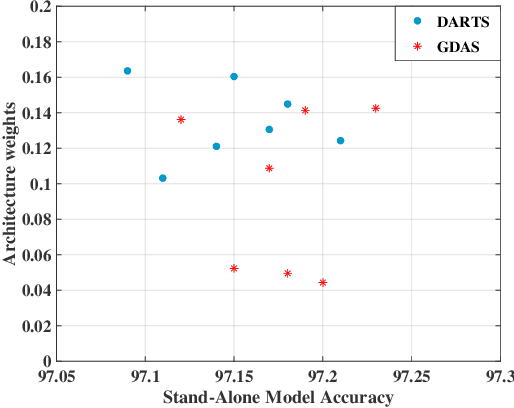

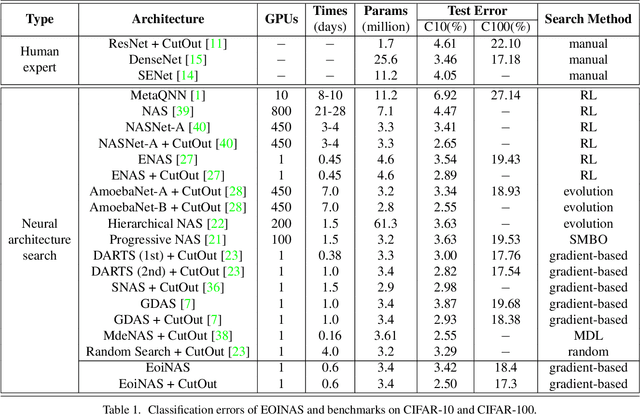

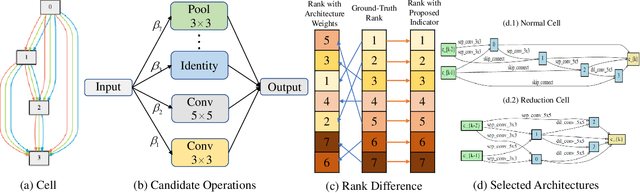

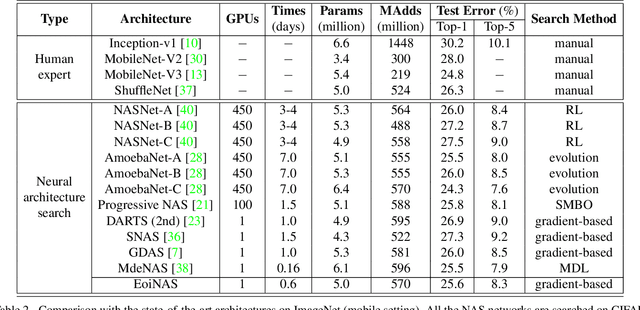

Abstract:Recently, differentiable neural architecture search methods significantly reduce the search cost by constructing a super network and relax the architecture representation by assigning architecture weights to the candidate operations. All the existing methods determine the importance of each operation directly by architecture weights. However, architecture weights cannot accurately reflect the importance of each operation; that is, the operation with the highest weight might not related to the best performance. To alleviate this deficiency, we propose a simple yet effective solution to neural architecture search, termed as exploiting operation importance for effective neural architecture search (EoiNAS), in which a new indicator is proposed to fully exploit the operation importance and guide the model search. Based on this new indicator, we propose a gradual operation pruning strategy to further improve the search efficiency and accuracy. Experimental results have demonstrated the effectiveness of the proposed method. Specifically, we achieve an error rate of 2.50\% on CIFAR-10, which significantly outperforms state-of-the-art methods. When transferred to ImageNet, it achieves the top-1 error of 25.6\%, comparable to the state-of-the-art performance under the mobile setting.

HGC: Hierarchical Group Convolution for Highly Efficient Neural Network

Jun 09, 2019

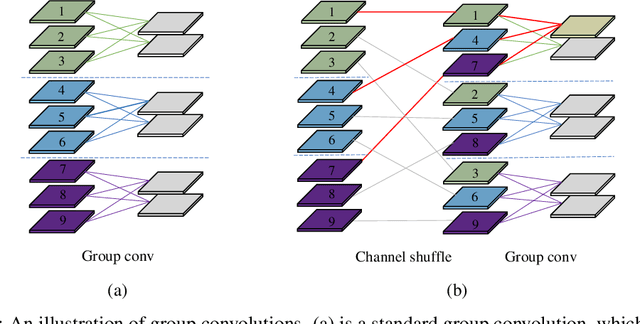

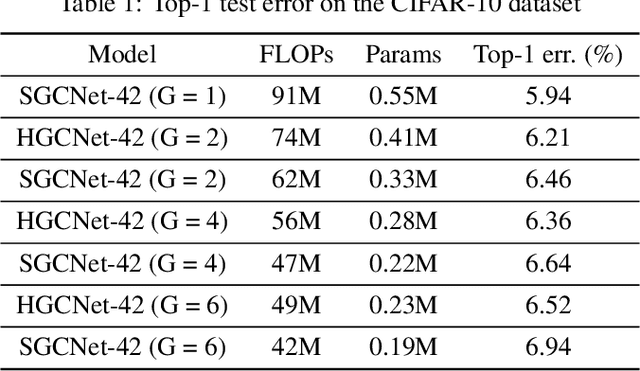

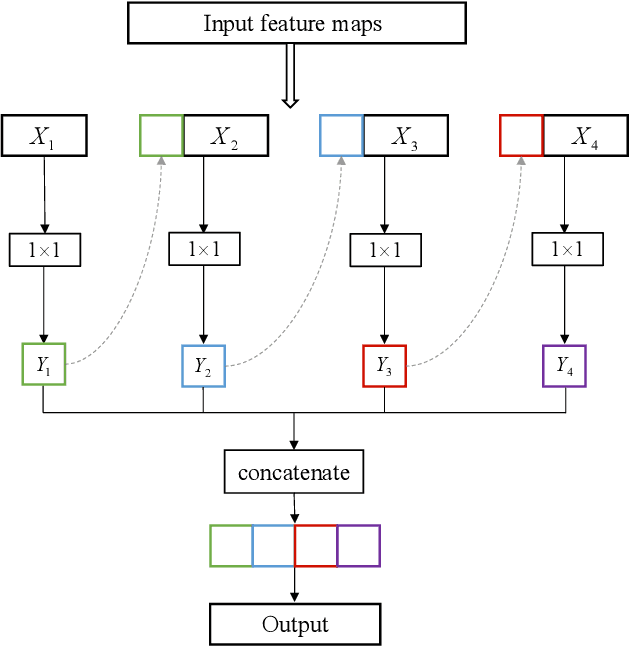

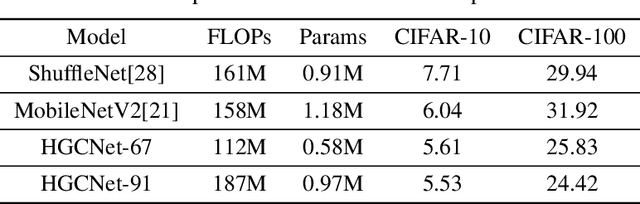

Abstract:Group convolution works well with many deep convolutional neural networks (CNNs) that can effectively compress the model by reducing the number of parameters and computational cost. Using this operation, feature maps of different group cannot communicate, which restricts their representation capability. To address this issue, in this work, we propose a novel operation named Hierarchical Group Convolution (HGC) for creating computationally efficient neural networks. Different from standard group convolution which blocks the inter-group information exchange and induces the severe performance degradation, HGC can hierarchically fuse the feature maps from each group and leverage the inter-group information effectively. Taking advantage of the proposed method, we introduce a family of compact networks called HGCNets. Compared to networks using standard group convolution, HGCNets have a huge improvement in accuracy at the same model size and complexity level. Extensive experimental results on the CIFAR dataset demonstrate that HGCNets obtain significant reduction of parameters and computational cost to achieve comparable performance over the prior CNN architectures designed for mobile devices such as MobileNet and ShuffleNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge