Xiaomin Liu

CBA: Communication-Bound-Aware Cross-Domain Resource Assignment for Pipeline-Parallel Distributed LLM Training in Dynamic Multi-DC Optical Networks

Dec 23, 2025

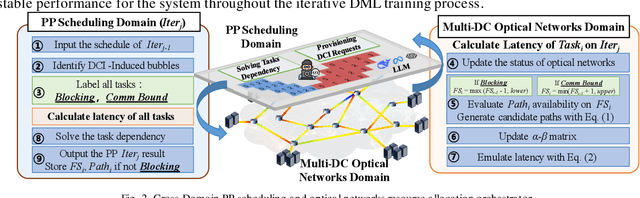

Abstract:We propose a communication-bound-aware cross-domain resource assignment framework for pipeline-parallel distributed training over multi-datacenter optical networks, which lowers iteration time by 31.25% and reduces 13.20% blocking requests compared to baselines.

Building a digital twin of EDFA: a grey-box modeling approach

Jul 13, 2023

Abstract:To enable intelligent and self-driving optical networks, high-accuracy physical layer models are required. The dynamic wavelength-dependent gain effects of non-constant-pump erbium-doped fiber amplifiers (EDFAs) remain a crucial problem in terms of modeling, as it determines optical-to-signal noise ratio as well as the magnitude of fiber nonlinearities. Black-box data-driven models have been widely studied, but it requires a large size of data for training and suffers from poor generalizability. In this paper, we derive the gain spectra of EDFAs as a simple univariable linear function, and then based on it we propose a grey-box EDFA gain modeling scheme. Experimental results show that for both automatic gain control (AGC) and automatic power control (APC) EDFAs, our model built with 8 data samples can achieve better performance than the neural network (NN) based model built with 900 data samples, which means the required data size for modeling can be reduced by at least two orders of magnitude. Moreover, in the experiment the proposed model demonstrates superior generalizability to unseen scenarios since it is based on the underlying physics of EDFAs. The results indicate that building a customized digital twin of each EDFA in optical networks become feasible, which is essential especially for next generation multi-band network operations.

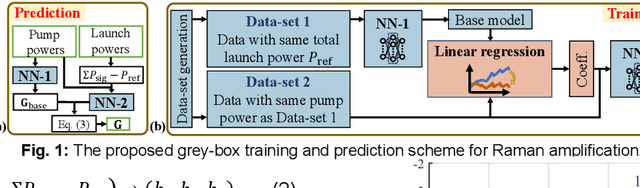

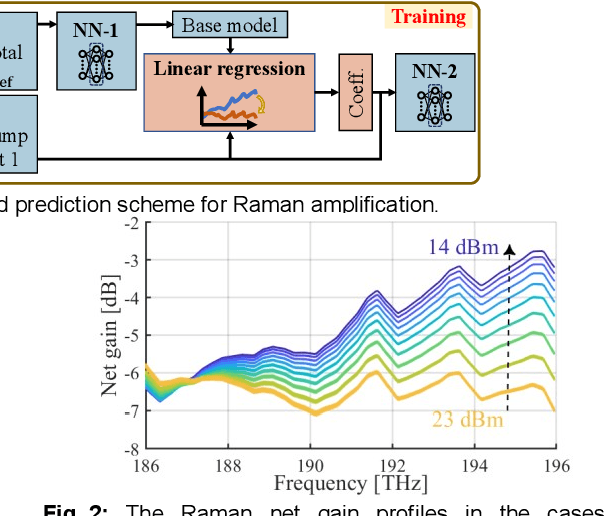

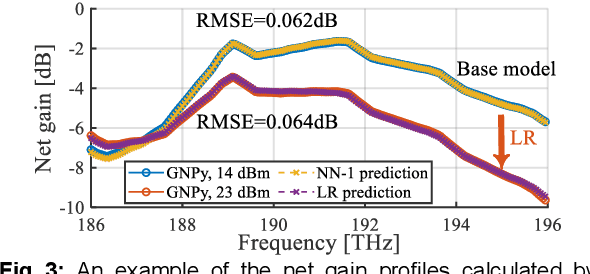

A Grey-box Launch-profile Aware Model for C+L Band Raman Amplification

Jun 24, 2022

Abstract:Based on the physical features of Raman amplification, we propose a three-step modelling scheme based on neural networks (NN) and linear regression. Higher accuracy, less data requirements and lower computational complexity are demonstrated through simulations compared with the pure NN-based method.

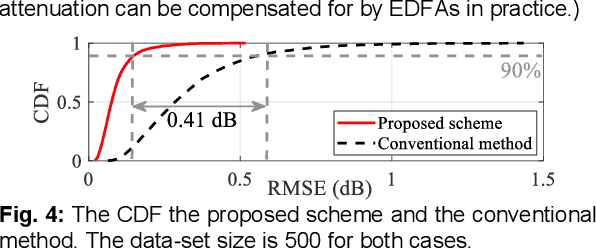

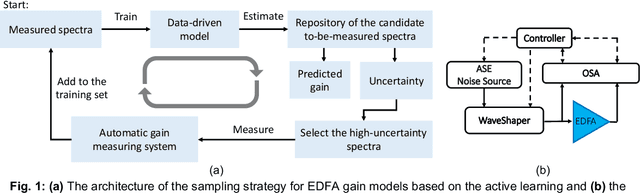

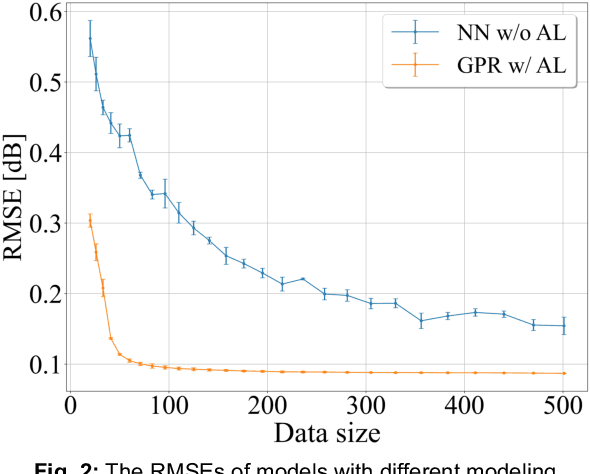

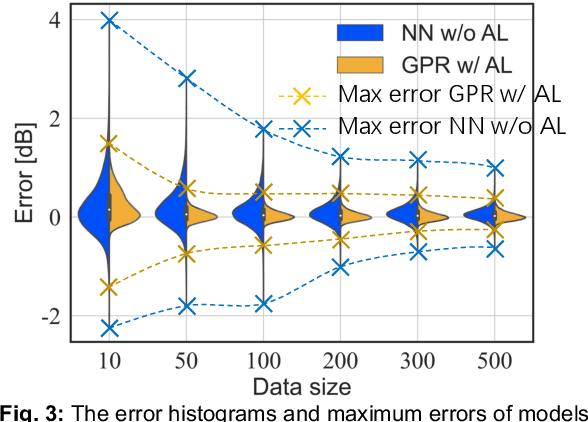

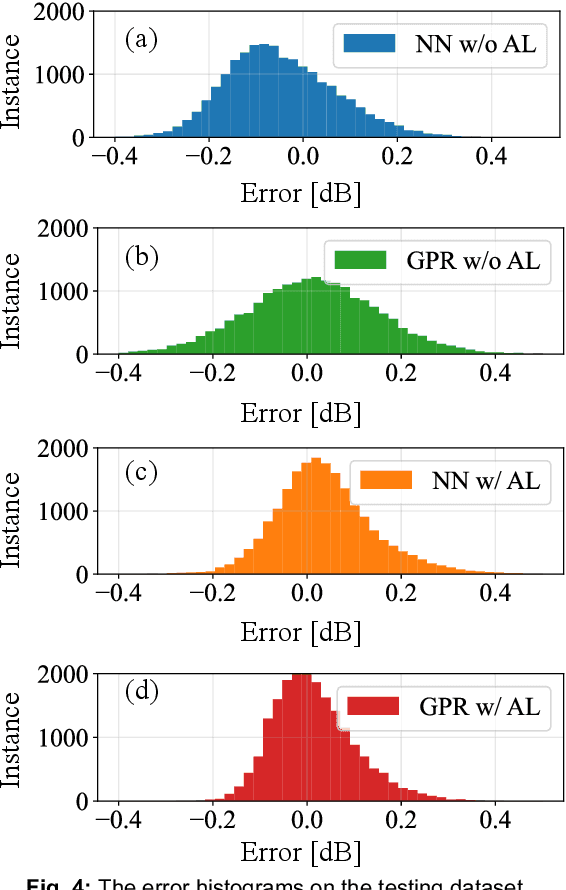

Physics-informed EDFA Gain Model Based on Active Learning

Jun 13, 2022

Abstract:We propose a physics-informed EDFA gain model based on the active learning method. Experimental results show that the proposed modelling method can reach a higher optimal accuracy and reduce ~90% training data to achieve the same performance compared with the conventional method.

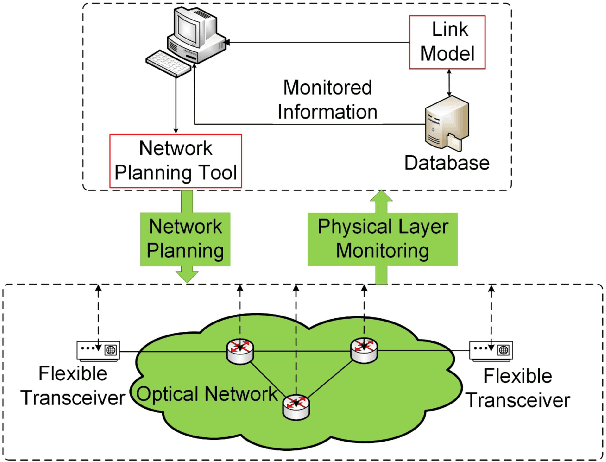

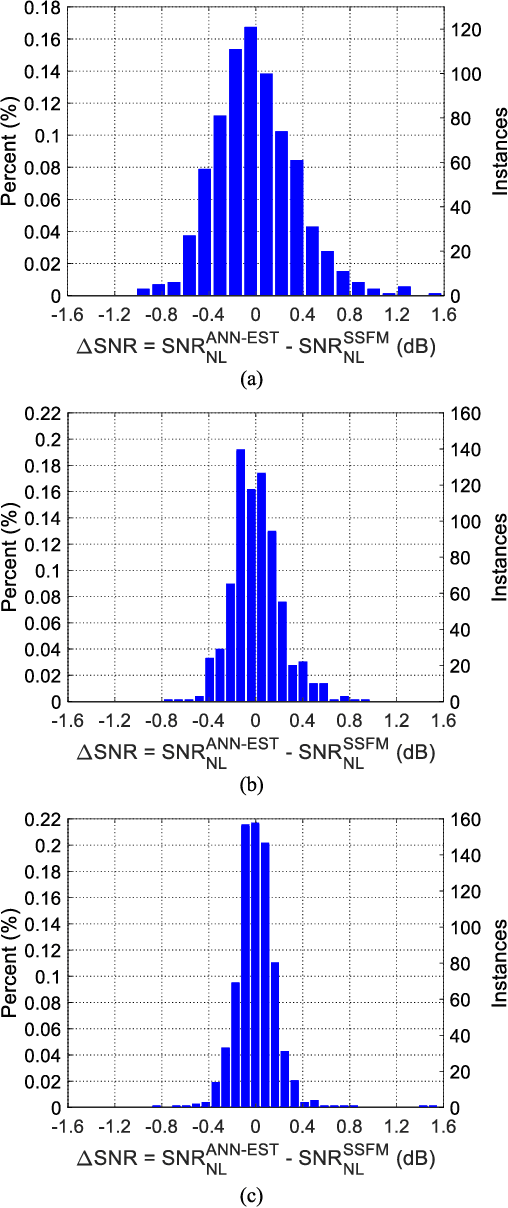

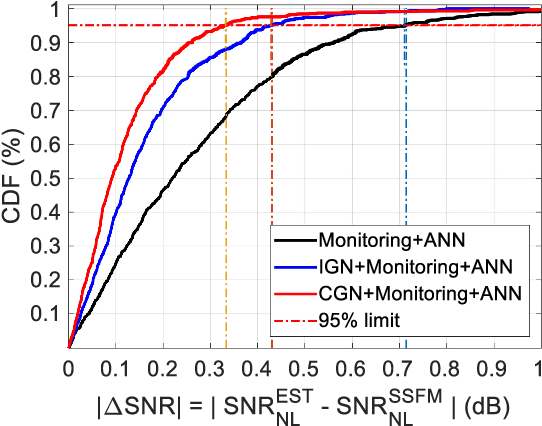

Application of Machine Learning in Fiber Nonlinearity Modeling and Monitoring for Elastic Optical Networks

Nov 23, 2018

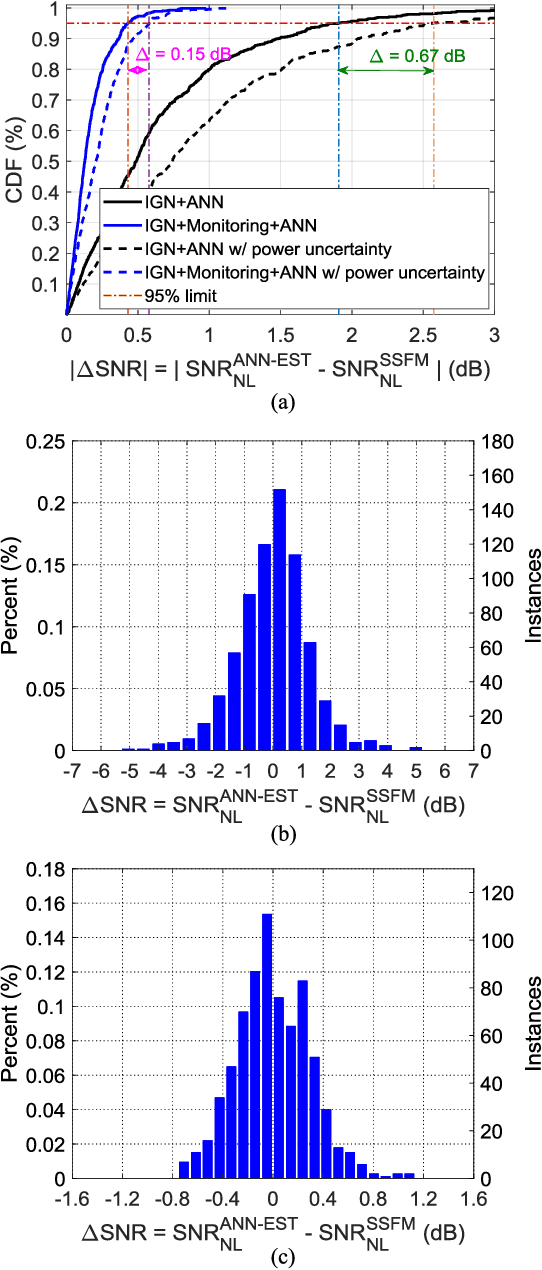

Abstract:Fiber nonlinear interference (NLI) modeling and monitoring are the key building blocks to support elastic optical networks (EONs). In the past, they were normally developed and investigated separately. Moreover, the accuracy of the previously proposed methods still needs to be improved for heterogenous dynamic optical networks. In this paper, we present the application of machine learning (ML) in NLI modeling and monitoring. In particular, we first propose to use ML approaches to calibrate the errors of current fiber nonlinearity models. The Gaussian-noise (GN) model is used as an illustrative example, and significant improvement is demonstrated with the aid of an artificial neural network (ANN). Further, we propose to use ML to combine the modeling and monitoring schemes for a better estimation of NLI variance. Extensive simulations with 1603 links are conducted to evaluate and analyze the performance of various schemes, and the superior performance of the ML-aided combination of modeling and monitoring is demonstrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge